Sliding Window Technique and Algorithm

The sliding window algorithm is a natural language processing technique in computer science and algorithms. The Sliding Window Technique is useful for problems involving arrays or lists where NLP engineers must process a subset of the elements in a continuous sequence. The idea behind the Sliding Window Algorithm is to maintain a window that slides over the data structure to perform operations on a smaller data segment rather than iterating over the entire data structure multiple times. The Sliding Window Algorithm processes text data in chunks or windows of a fixed size in Natural Language Processing (NLP) for text segmentation, feature extraction, and pattern recognition tasks.

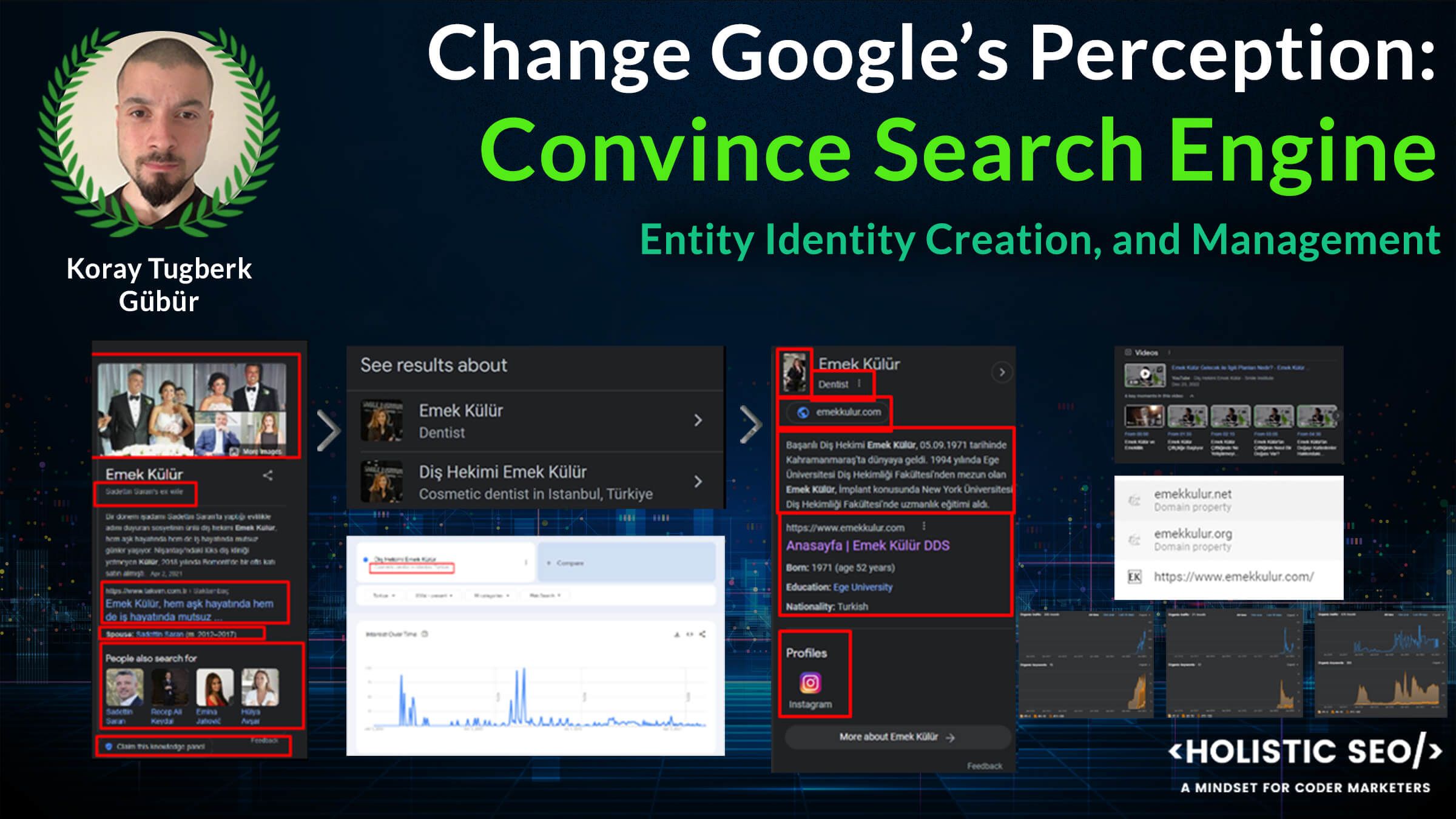

Sliding Window Algorithm Definition

The Sliding Window Algorithm efficiently processes a fixed-size subset of elements that moves sequentially over a data structure.

Sliding Window Technique Invention

The sliding window technique, originating from early signal processing and data transmission, evolved into a fundamental algorithmic concept for efficiently processing sequential data by maintaining a fixed-size window that moves across the dataset.

Sliding Window Algorithm in Programming

The sliding window algorithm can be effectively implemented in programming languages like Python, Java, C++, and JavaScript due to their support for fundamental data structures and robust libraries.

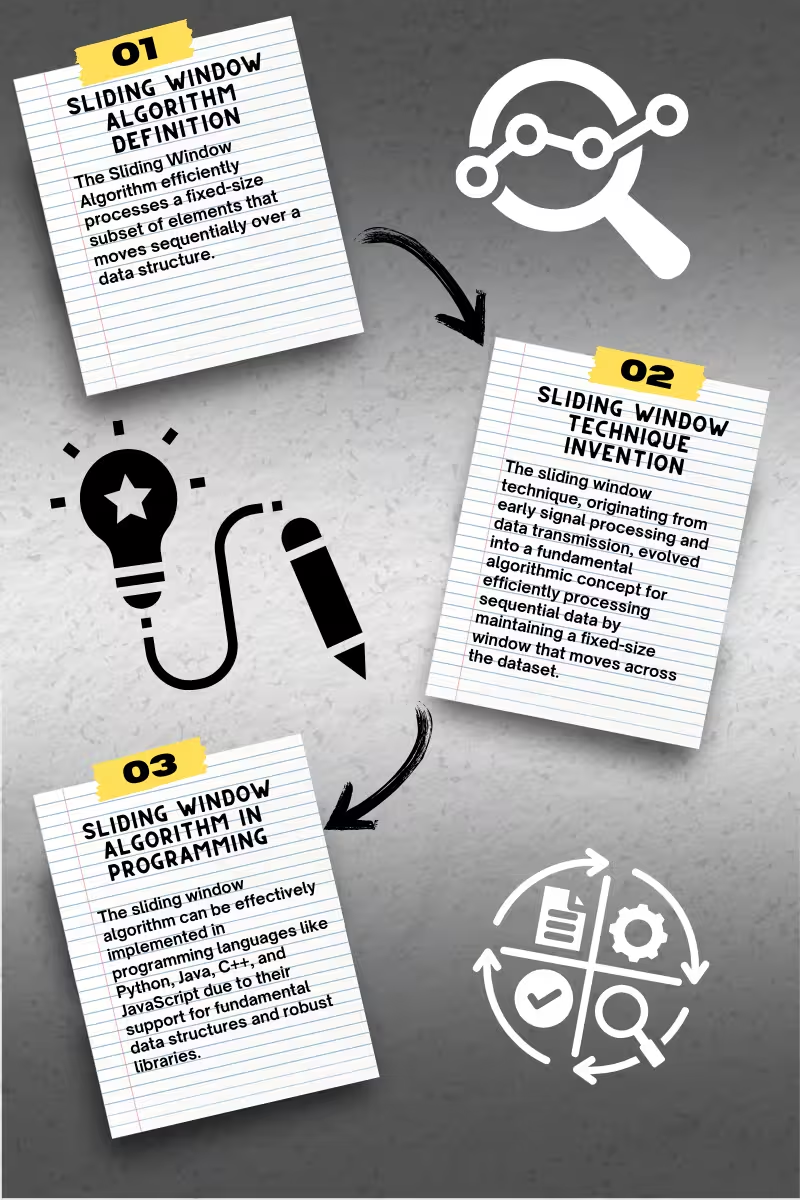

How does the Sliding Window Algorithm Work in Natural Language Processing (NLP)?

The Sliding Window algorithm helps processing Natural Language through contextual embeddings, n-gram generation, feature extraction for machine learning and labeling, named entity recognition, and sentiment analysis.

- N-gram Generation: The sliding window generates n-grams, sequences of ‘n’ contiguous words or characters from a text.

- Feature Extraction: Extract features from text for machine learning models. For example, in text classification, features like word frequencies or TF-IDF scores can be computed within each window.

- Contextual Embeddings: When generating embeddings for words or sentences, the sliding window can help create context windows around each word, generating context-sensitive embeddings.

- Sentiment Analysis: Analyzing sentiment over different text parts by examining each window to capture local sentiment variations.

- Named Entity Recognition (NER): Identifying named entities within different parts of the text by examining windows of words around potential entities.

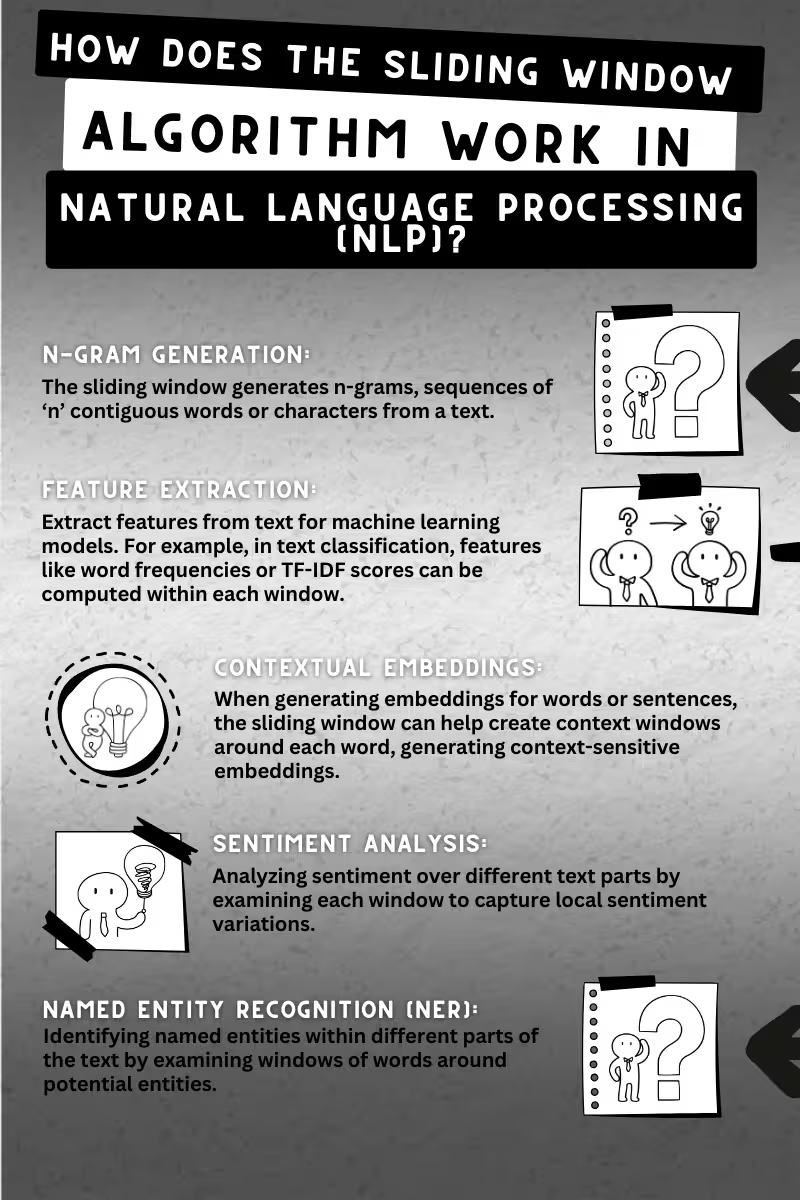

How to Use Sliding Window Algorithm for Natural Language Understanding (NLU)?

To use the Sliding Window Algorithm for Natural Language Understanding (NLU), follow these steps to determine the range of the sliding window and analyze the connections between lexemes and word units. The search engine Google announced BERT (Bidirectional Encoder Representations from Transformers), which uses a sliding window approach to analyze word sequences for sequence modelling. Below are the four main steps to implement the Sliding Window Technique for NLU.

- Define the Window Size:

- Determine the appropriate window size based on the specific task and the length of the text segments. The window size can vary depending on whether you work with tokens (words) or characters.

- For example, if you’re analyzing sentences, a window size of 5-10 words might be appropriate.

- Initialize the Window:

- Start at the beginning of the text and create the first window by selecting the initial set of words or characters based on the defined window size.

- For instance, if your text is “Natural Language Understanding is crucial,” and your window size is 3, your initial window would be [“Natural”, “Language”, “Understanding”].

- Slide the Window and Analyze:

- Move the window across the text one position at a time (or by a predefined stride) and analyze the content within each window.

- Extract relevant features, such as n-grams, part-of-speech tags, named entities, and syntactic dependencies, within each window.

- Identify connections between lexemes and word units by examining how words within the window relate contextually.

- Update and Repeat:

- Continue sliding the window across the text until the entire text is processed. At each step, update the window by adding the next word and removing the first word from the previous window.

- For example, the following window after [“Natural”, “Language”, “Understanding”] would be [“Language”, “Understanding”, “is”].

What are the Advantages of Sliding Window Algorithm?

The main 6 advantages of the sliding window algorithm involves efficiency for cost, and decreased memory usage, real-time processing, simplicity, versatility, and contextual analysis.

- Efficiency: The sliding window algorithm reduces computational overhead by focusing on smaller, manageable data segments rather than processing the entire dataset simultaneously. This leads to faster processing times, especially for large datasets.

- Decreased Memory Usage: The sliding window algorithm is memory efficient, maintaining only a small subset of the data in memory at any given time. This is particularly beneficial when working with limited memory resources.

- Real-Time Processing: The algorithm is well-suited for real-time applications that continuously stream data. It allows for immediate processing and analysis of incoming data without waiting for the entire dataset to be available.

- Simplicity: The sliding window algorithm’s concept and implementation are straightforward, making it accessible and easy to implement for a wide range of problems.

- Versatility: The sliding window technique can be applied to various data structures and problems, including time series analysis, signal processing, and text processing in NLP.

- Contextual Analysis: In NLP, the sliding window allows for detailed contextual analysis within each window, improving the understanding of word relationships and enhancing tasks such as named entity recognition and sentiment analysis.

How to Understand the Benefits of the Sliding Window Technique?

Use the dictionary definitions for the concepts below to better understand the benefits of the Sliding Window Technique in the Natural Language Processing, Understanding, and Generation fields.

- Sliding Window Technique: A computational method used to process a subset of elements in a data structure by maintaining a fixed-size window that “slides” over the data, performing operations on each subset.

- Window Size: The number of elements included in each subset or window. The window size determines the scope of the analysis performed at each step of the sliding window algorithm.

- Stride: The number of positions by which the window moves after each operation. A stride of one means the window moves one element at a time, while larger strides mean the window skips elements between operations.

- Contextual Analysis: Examining the context in which data elements (such as words in a text) appear to understand their relationships, meanings, and implications.

- Real-Time Processing: The ability to process data as received, without delay, allowing for immediate analysis and response.

- Feature Extraction: Identifying and extracting relevant features or attributes from the data within each window. In NLP, this can include n-grams, part-of-speech tags, and named entities.

- Named Entity Recognition (NER): A subtask of information extraction that seeks to locate and classify named entities in text into predefined categories such as person names, organizations, locations, and more.

- Sentiment Analysis: The process of determining the emotional tone or sentiment expressed in a text segment, often used to gauge opinions and attitudes.

- Bidirectional Encoder Representations from Transformers (BERT): A transformer-based model developed by Google that uses a sliding window approach to analyze word sequences for sequence modeling and natural language understanding.

Who found the Sliding Window Algorithm?

How to Use Sliding Window Technique with Python for NLP?

To use the Sliding Window Algorithm and technique in NLP and NLU, there is a Python Script below with if statements and explanatory comments.

def sliding_window(elements, window_size):

"""

Generate a list of sliding windows from the input list.

Parameters:

elements (list): The input list to generate windows from.

window_size (int): The size of each sliding window.

Returns:

list: A list of lists, where each sublist is a sliding window.

"""

if window_size <= 0:

raise ValueError("Window size must be greater than 0")

if len(elements) < window_size:

return []

return [elements[i:i + window_size] for i in range(len(elements) - window_size + 1)]

# Example usage with a list

lst = [1, 2, 3, 4, 5, 6, 7, 8]

window_size = 3

windows = sliding_window(lst, window_size)

for window in windows:

print(window)The description of the Python Code example above for the Sliding Window technique is below.

- Define the Function: The function sliding_window generates sliding windows from an input list. It takes two parameters: elements (the input list) and

window_size(the size of each sliding window). - Parameter Validation: The function first checks if the window_size is less than or equal to zero. If it is, it raises a ValueError because the window size must be positive. It then checks if the length of the elements list is smaller than the

window_size. If so, it returns an empty list because it is impossible to create a window of the specified size from the list. - Generate Sliding Windows:

- If the input parameters are valid, the function generates the sliding windows.

- It uses a list comprehension to create the sliding windows. The list comprehension iterates over the indices of the elements list, starting from index 0 to the length of the list minus the window size plus 1.

- A sublist (window) that starts at the current index and includes the following window-size elements is created for each index.

- Return the Result: The function returns a list of sublists, each containing a sliding window of the specified size.

- Example Usage: An example of the sliding_window function is provided, where “

lst” variable is a list of integers from 1 to 8, and window_size is set to 3.- The

sliding_windowthe function is called with lst and window_size, and the result is stored in the variable windows. - A for loop iterates over the windows list and prints each sliding window.

- The output of the Python script for the Sliding Window usage example is below.

- The

[1, 2, 3]

[2, 3, 4]

[3, 4, 5]

[4, 5, 6]

[5, 6, 7]

[6, 7, 8]To use the Sliding Window technique for textual data instead of integers, follow and examine the Python script below.

def sliding_window_words(text, window_size):

"""

Generate a list of sliding windows from the input text, based on words.

Parameters:

text (str): The input text to generate windows from.

window_size (int): The size of each sliding window in terms of words.

Returns:

list: A list of strings, where each string is a sliding window of words.

"""

if window_size <= 0:

raise ValueError("Window size must be greater than 0")

words = text.split()

if len(words) < window_size:

return []

return [' '.join(words[i:i + window_size]) for i in range(len(words) - window_size + 1)]

# Example usage with a string

s = "Koray Tugberk GUBUR explains sliding window concept to help SEOs around the globe."

window_size = 3

windows = sliding_window_words(s, window_size)

for window in windows:

print(window)The output of the specific Python script for using the sliding window technique on textual data is below.

Koray Tugberk GUBUR

Tugberk GUBUR explains

GUBUR explains sliding

explains sliding window

sliding window concept

window concept to

concept to help

to help SEOs

help SEOs around

SEOs around the

around the globe.How to Use Sliding Window with Java Programming Language?

import java.util.ArrayList;

import java.util.List;

public class SlidingWindow {

public static List<List<Integer>> slidingWindow(int[] elements, int windowSize) {

// Check if window size is valid

if (windowSize <= 0) {

throw new IllegalArgumentException("Window size must be greater than 0");

}

// Check if elements array is shorter than the window size

if (elements.length < windowSize) {

return new ArrayList<>();

}

List<List<Integer>> result = new ArrayList<>();

for (int i = 0; i <= elements.length - windowSize; i++) {

List<Integer> window = new ArrayList<>();

for (int j = i; j < i + windowSize; j++) {

window.add(elements[j]);

}

result.add(window);

}

return result;

}

public static void main(String[] args) {

int[] lst = {1, 2, 3, 4, 5, 6, 7, 8};

int windowSize = 3;

List<List<Integer>> windows = slidingWindow(lst, windowSize);

for (List<Integer> window : windows) {

System.out.println(window);

}

}

}The description of the code example with Java for the Sliding Window algorithm is below.

- Define the Method:

slidingWindow: This method generates sliding windows from an input array.- Parameters:

int[]elements (input array) and intwindowSize(size of each sliding window). - Returns: A list of lists, where each inner list represents a sliding window.

- Perform Parameter Validation:

- The method checks if

windowSizeis less than or equal to zero. If it is, it throws an IllegalArgumentException. - It checks if the length of the elements array is smaller than the

windowSize. If so, it returns an empty list.

- The method checks if

- Generate Sliding Windows:

- It creates a

resultlist to store the sliding windows. - It iterates over the indices of the elements array from 0 to

elements.length - windowSize. - For each index, it creates a new window list, and adds the next

windowSizeelements from the elements array to this window list, and then adds the window list to theresultlist.

- It creates a

- Use Main Method:

- The main method provides an example usage of the

slidingWindowmethod. - It defines an array

lstand sets thewindowSizeto 3. - It calls the

slidingWindowmethod with lst and windowSize, storing the result in windows. - It iterates over the

windowslist and prints each slidingwindow.

- The main method provides an example usage of the

The Java Code example for the Sliding Window Technique above creates the output below.

[1, 2, 3]

[2, 3, 4]

[3, 4, 5]

[4, 5, 6]

[5, 6, 7]

[6, 7, 8]How to Use Sliding Window with C++ Programming Language?

There are 7 main steps to use “sliding window with the C++ Programming Language”.

- Include Necessary Headers:

- #include <iostream> for input and output operations.

- #include <vector> for using the vector container.

- #include <algorithm> for the max function.

- Define a function maxSumOfKConsecutiveElements that takes a constant reference to a vector of integers and an integer k.

- Check if the array’s size is less than k. If so, print an error message and return -1.

- Compute the sum of the first window of size k.

- Slide the window from the start of the array to the end. For each new element in the window, update the window sum by adding the new element and subtracting the element that is no longer in the window.

- Update Maximum Sum: After updating the window sum, check if it is greater than the current maximum sum and update accordingly.

- Create a sample array and call the maxSumOfKConsecutiveElements function to get the maximum sum of k consecutive elements.

#include <iostream>

#include <vector>

#include <algorithm> // for max function

using namespace std;

int maxSumOfKConsecutiveElements(const vector<int>& arr, int k) {

int n = arr.size();

if (n < k) {

cout << "Invalid";

return -1;

}

// Compute the sum of the first window of size k

int max_sum = 0;

for (int i = 0; i < k; i++) {

max_sum += arr[i];

}

// Compute the sums of remaining windows by removing the first element of the previous window and adding the next element in the array

int window_sum = max_sum;

for (int i = k; i < n; i++) {

window_sum += arr[i] - arr[i - k];

max_sum = max(max_sum, window_sum);

}

return max_sum;

}

int main() {

vector<int> arr = {1, 2, 3, 4, 5, 6, 7, 8, 9, 10};

int k = 3;

cout << "Maximum sum of " << k << " consecutive elements is " << maxSumOfKConsecutiveElements(arr, k) << endl;

return 0;

}Maximum sum of 3 consecutive elements is 27#include <iostream>

#include <unordered_set>

#include <string>

using namespace std;

int longestUniqueSubstring(const string& str) {

int n = str.length();

unordered_set<char> charSet;

int maxLength = 0;

int left = 0, right = 0;

while (right < n) {

if (charSet.find(str[right]) == charSet.end()) {

charSet.insert(str[right]);

maxLength = max(maxLength, right - left + 1);

right++;

} else {

charSet.erase(str[left]);

left++;

}

}

return maxLength;

}

int main() {

string str = "abcabcbb";

cout << "Length of the longest substring with all unique characters: " << longestUniqueSubstring(str) << endl;

return 0;

}- 1. Include Necessary Headers:

- include

<iostream>for input and output operations. - include

<unordered_set>for using the unordered set container. - include

<string>for using the string class.

- include

- Define a function

longestUniqueSubstringthat takes a constant reference to a string. - Initiate Sliding Window:

- Use two pointers, left and right, to represent the current window.

- Use an unordered set to keep track of characters in the current window.

- Slide the Window:

- Move the right pointer to expand the window. If the character is not in the set, add it and update the maximum length.

- If the character is already in the set, move the left pointer to shrink the window until the character can be added.

- 5. Create a sample string and call the longestUniqueSubstring function to find the length of the longest substring with all unique characters.

Length of the longest substring with all unique characters: 3What are the Alternatives of Sliding Window Algorithm for NLP?

Recurrent Neural Networks (1980s), Long Short-Term Memory Networks (1997), Convolutional Neural Networks (late 1980s-1990s), Attention Mechanisms (2014), and Transformer Models (2017) are key alternatives to the sliding window algorithm in NLP, each offering unique advantages in handling sequential data and capturing context.

| Sliding Window Alternatives | Definition | Use Cases | Advantages | Disadvantages |

|---|---|---|---|---|

| Recurrent Neural Networks (RNNs) | Neural networks designed to handle sequential data and maintain memory of previous inputs | Language modeling, machine translation, sentiment analysis | Captures dependencies in sequences, effective for sequential data | Struggles with long-term dependencies (vanishing gradient problem) |

| Long Short-Term Memory Networks (LSTMs) | A type of RNN that avoids the vanishing gradient problem and captures long-term dependencies | Text generation, time series prediction, speech recognition | Handles long-range dependencies better than standard RNNs | Computationally intensive, slower training |

| Transformer Models | Models that use self-attention to process the entire input sequence simultaneously | Question answering, text classification, summarization | Captures global dependencies, allows parallel processing | Requires large datasets, high computational cost |

| Convolutional Neural Networks (CNNs) | Networks that apply convolutional filters to text sequences to extract local features | Text classification, sentiment analysis, phrase extraction | Efficiently captures local features like n-grams | Limited in capturing long-range dependencies |

| Attention Mechanisms | Mechanisms that dynamically focus on specific parts of the input sequence during prediction | Machine translation, document summarization, QA | Provides flexible and accurate context understanding | Neural networks are designed to handle sequential data and maintain memory of previous inputs |

| Markov Models | Simple, interpretable, and effective for clear state transitions | Part-of-speech tagging, named entity recognition | It can be complex to implement and tune | Assumes limited context, may miss long-range dependencies |

| n-Gram Models | Statistical models predicting the next item based on the previous (n-1) items | Language modeling, text prediction, auto-completion | Simple to implement, effective for short-range dependencies | Suffers from sparsity, limited in capturing longer contexts |

| Bidirectional Models | Models that process input sequences in both directions for comprehensive context | Named entity recognition, text classification | Captures context from both ends of a sequence | More computationally expensive than unidirectional models |

| CRF (Conditional Random Fields) | Structured prediction models considering the context of the entire sequence | Sequence labeling, part-of-speech tagging, NER | Takes entire sequence context into account | Requires feature engineering, can be slower to train |

Natural Language Processing (NLP) is a field of AI that enables machines to understand and generate human language, with the sliding window algorithm being relevant for processing text by dividing it into smaller segments to capture localized context, though more advanced models are often needed for handling long-range dependencies.

- Sliding Window - August 12, 2024

- B2P Marketing: How it Works, Benefits, and Strategies - April 26, 2024

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024