TF-IDF Analysis is one of the oldest methodologies to understand the context. Calculating the weights of a word in a document can help SEOs to understand documents on a SERP for a specific query or a web entity’s content structure and methodology. TF-IDF or Term Frequency and Inverse Document Frequency is useful to extract the related entities and topical phrases. It also skims the “stop words” and by scanning all the documents, extracts the main terms on a document. Performing a quick and efficient TF-IDF Analysis via Python is easy and also useful. We recommend you to not to “focus on numbers” for optimizing content instead of user-friendliness, but you can use these techniques for quick analysis in scale.

In this article, we will use the Scikit-Learn Library of Python and some necessary methods, class, and functions along with the Pandas Library.

How to Perform TF-IDF Analysis for Text Valuation via Python

Term Frequency – Inverse Data Frequency methodology tries to understand the text’s purpose and topic according to the words’ frequencies according to each other. Computers can’t understand the texts but they can change the words into numbers so that they can extract the relationships between them. In TF-IDF Analysis the words’ usage amount is actually not important. Because, usually the most used words in a text are “stop words” such as “will”, “and”, or “you”. These words say nothing about the purpose of the text, so we will try to figure the content’s context by these principles.

To learn more about Python SEO, you may read the related guidelines:

- How to resize images in bulk with Python

- How to crawl and analyze a Website via Python

- How to perform text analysis via Python

- How to test a robots.txt file via Python

- How to Compare and Analyse Robots.txt File via Python

- How to Categorize URL Parameters and Queries via Python?

- How to Perform a Content Structure Analysis via Python and Sitemaps

What is the Term Frequency?

Term frequency is the frequency of the word in a text. It gives the exact number of the word’s occurrence in a text.

What is Inverse Data Frequency?

It is the frequency of the least used words in a text.

How does Inverse Data Frequency and Term Frequency Work Together?

By using these two metrics, we can extract the important words to see the content’s purpose by skimming the stop words.

To learn more, read our TF-IDF Analysis Guideline.

TF-IDF Analysis Implementation with Python’s SKLearn Library

To perform TF-IDF Analysis via Python, we will use SKLearn Library. Scikit-Learn is the most useful and frequently used library in Python for Scientific purposes and Machine Learning. It can show correlations and regressions so that developers can give decision-making ability to machines. SK-Learn Library has also a “feature extraction” component for text documents. Thanks to it, we will turn a raw document into a matrix and we will calculate every word’s weight in a document. After the calculation, we will turn every matrix element into a data frame row.

To start with, I have chosen 6 example sentences from a history book which tells about the creation of “Balkan Pact” in the late 1930s by Mustafa Kemal Atatürk, founder of the Turkish Republic. First, we need to import the sentences we want to use and Python modules.

import pandas as pd

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.feature_extraction.text import TfidfVectorizerBelow, we are creating our document within a list of sentences for TF-IDF Analysis with python coding language.

docs=["Until the early 1930s, Turkey followed a neutral foreign policy with the West by developing joint friendship and neutrality agreements. ",

"These bilateral agreements aligned with Atatürk's worldview. By the end of 1925, Turkey had signed fifteen joint agreements with Western states.",

"In the early 1930s, changes and developments in world politics required Turkey to make multilateral agreements to improve its security. Atatürk strongly believed that close cooperation between the Balkan states based on the principle of equality would have an important effect on European politics.",

"These states had been ruled by the Ottoman Empire for centuries and had proved to be a powerful force. While the origins of the Balkan agreement may date as far back as 1925, the Balkan Pact came into being in the mid-1930s.",

"Several important developments in Europe helped the original idea materialize, such as improvements in the Turkish-Greek alliance and the rapprochement between Bulgaria and Yugoslavia. The most important factor in driving Turkish foreign policy from the mid-1930s onwards was the fear of Italy."]We have imported CountVectorizer, TFIDFTransformer, and TFIDFVectorizer for calculating the TF-IDF Scores every word in the sentences. And Pandas is for creating the data frame. CountVectorizer is for turning a raw document into a matrix of tokens.

doc = CountVectorizer()

word_count=doc.fit_transform(docs)

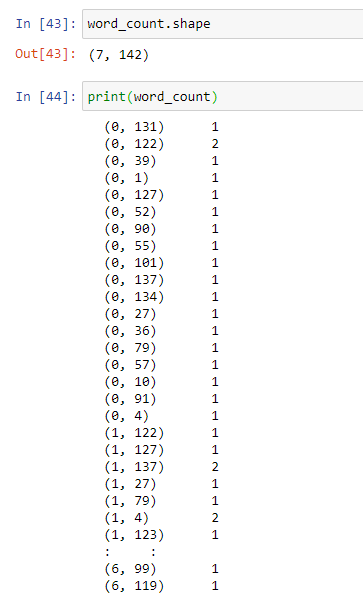

word_count.shape

print(word_count)The explanation of the code block above can be found within the list below.

- We have created a variable with the “CountVectorizer()” class.

- We have used the “fit_transform” method on our sentences via our Class Object. The main reason for using “CountVectorizer()” is to use “TFIDFTransformer” method.

- We have called its shape and printed it.

You may see the result below:

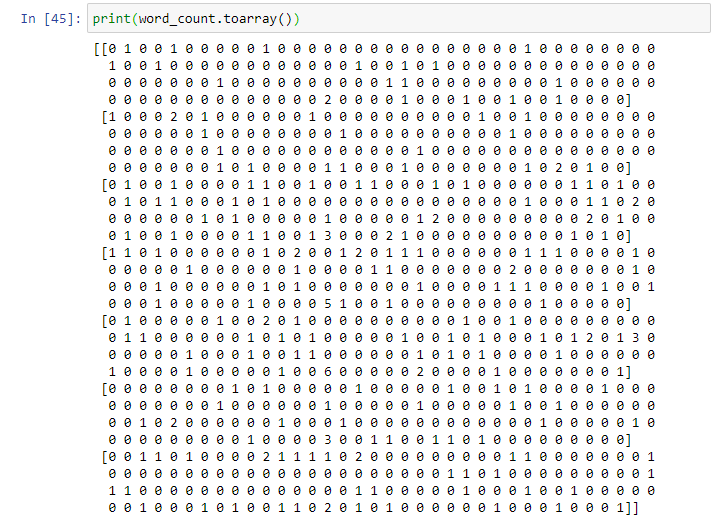

We see our Matrix and its shape along with the occurrence count of the words. You may also call it in arrays.

print(word_count.toarray())

Yes, it says nothing for a human but it has lots of meanings for a computer. First, we will use TFIDFTransformer for calculating the TF and IDF values separately.

tfidf_transformer=TfidfTransformer(smooth_idf=True,use_idf=True)

tfidf_transformer.fit(word_count)

df_idf = pd.DataFrame(tfidf_transformer.idf_, index=doc.get_feature_names(),columns=["idf_weights"])

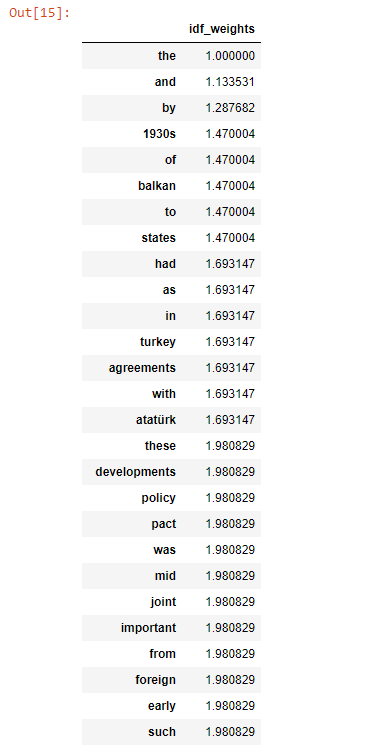

df_idf.sort_values(by=['idf_weights']).head(40)Below, you can see the explanation of the code block.

- The first line is for creating a variable for assigning the TFIDFTransformer Method with “smooth_idf” and “use_idf” attributes. The “smooth_idf” is necessary for calculating TF-IDF Values from more than one documents so that if a word isn’t being used in a document, it shall be divided by 1 instead of 0. “Use_idf” is for using the IDF Weightining in our calculation.

- In the second line, we are using “fit” and “transform” methods from SKLearn at the same time.

- At the third line, we are turning our output into a data frame, we are using our “words” in the index with “get_feature_names()” and “idf_weights” in the column.

- In the last line, we are sorting the values according to the “idf_weights” and pulling the top 40 lines.

These are the IDF Values for our sentences. As you see the non-important words which may not tell the context has lower IDF since they appear in every sentence. Inverse Document Frequency Score is inversely proportional to the usage frequency of the word. Now, we can calculate the TF-IDF Scores based on IDF values.

tf_idf_vector=tfidf_transformer.transform(word_count)

feature_names = doc.get_feature_names()

first_document_vector=tf_idf_vector[1]

df = pd.DataFrame(first_document_vector.T.todense(), index=feature_names, columns=["tfidf"])

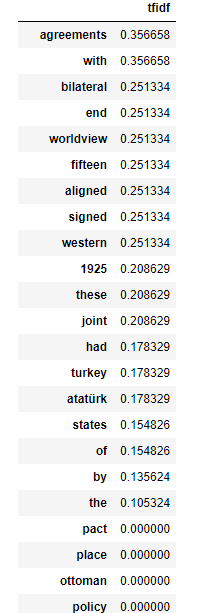

df.sort_values(by=["tfidf"],ascending=False).head(45)TF-IDF Analysis code block’s analysis is below.

- The first line assigns the “transform(word_count)” method through our IDF values into the tf_df_vector. By multiplication of TF*IDF, we have calculated TF-IDF Values based on IDF Values.

- In the second line, we are assigning the feature names (words) into another variable.

- We are assigning our first document into a variable for showing the results in a data frame.

- In the fourth line, we are creating a data frame from our “first_document_vector” variable by switching the places of columns and rows via the “T()” method and we are turning our variable into a matrix via “todense()”. We have chosen the index names via feature names and the column name will be ‘tfidf’. You may see our TF-IDF values below for the first document.

Besides the words from the first sentence have a 0.0 score because we didn’t include them in our data frame with the “first_document_vector=tf_idf_vector[1]” line. If you check the first sentence from our “docs” variable, you may see the more used words have lower TF-IDF scores, the more unique words have more TF-IDF Scores, in this way we are skimming the stop words.

Now, we will perform the same process in a easier way thanks to TFIDFVectorizer.

tfidf_vectorizer=TfidfVectorizer(use_idf=True)

tfidf_vectorizer_vectors=tfidf_vectorizer.fit_transform(docs)

first_vector_tfidfvectorizer=tfidf_vectorizer_vectors[1]

df = pd.DataFrame(first_vector_tfidfvectorizer.T.todense(), index=tfidf_vectorizer.get_feature_names(), columns=["tfidf"])

df.sort_values(by=["tfidf"],ascending=False).head(45)We have created a “tfidf_vectorizer” object via “TfidfVectorizer” class.

We have used fit and transform methods at the same time on our “docs” variable via it. The rest is actually the same as the code block above. You may see the same result below:

Last Thoughts on TF-IDF Analysis via Python

For Text Analysis via Python, there are hundreds of different methods and possibilities. Google doesn’t use TF-IDF Analysis in their algorithm according to their explanations but still comparing and analyzing the content profiles of different HTML Documents via TF-IDF Analysis for certain queries may help an SEO to see the competitors’ focus on a topic. For optimizing content, you shouldn’t care about the numbers in algorithms, you should care about the user-friendliness. For now, our TF-IDF Analysis Article has lots of missing points, we will improve it by time:

Sources:

- https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.CountVectorizer.html#sklearn.feature_extraction.text.CountVectorizer

- https://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.TfidfTransformer.html#sklearn.feature_extraction.text.TfidfTransformer.fit_transform

- Sliding Window - August 12, 2024

- B2P Marketing: How it Works, Benefits, and Strategies - April 26, 2024

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024

Hi Koray,

I’ve read an article by Bill Slawski on SEMrush, where he mentioned that TF-IDF analysis is replaced by the Bm-25 algorithm.

I’m just wondering if there is any value you think had in TF-IDF analysis, or if is it just an old-school tactic.

Hello Ahmad Khan,

TF-IDF and BM-25 are both distributional semantics-related methodologies to understand the heaviest context words inside a document. The only difference is that BM-25 focuses on document length and word saturation inside the document, while the TF-IDF focuses on term frequency, and gives more weight to the less occurring terms. Also, TF-IDF gives an advantage to longer documents since they have more space to include specific terms.

Helal kardeşime. Yazıda Mustafa Kemal Atatürk’ü görünce ilk işim yazara bakmak oldu. Başarılarının devamını dilerim kardeşim.

TR: Teşekkür ederim.

EN: Thank you.