Unsupervised learning is a powerful approach in the field of machine learning that enables algorithms to learn patterns, structures, and relationships from unlabeled data. Unsupervised learning focuses on exploring the inherent structure and information within the data itself unlike supervised learning, where models are trained on labeled examples. It Unsupervised Learning plays a crucial role in extracting insights, discovering hidden patterns, and making sense of datasets that are not annotated.

Learning refers to the process by which an entity, such as a human or a machine, acquires knowledge, skills, or understanding through experience, study, or practice. Learning It involves the ability to perceive, assimilate, and retain information, and to use that information to adapt, make decisions, and solve problems.

Unsupervised learning works in various stages, which starts with data preparation and are followed by the exploration and representation stage. Clustering is the next stage and then followed by model training and evaluation and interpretation stage.

The importance of unsupervised learning is its ability to uncover secret patterns and structures within unlabeled data. Unsupervised learning algorithms reveal underlying relationships, clusters, and dependencies that are not apparent in the raw data by analyzing the data without relying on predefined labels or guidance. It leads to valuable insights, new discoveries, and broader comprehension of the data, which inform decision-making and drive further analysis and exploration.

Unsupervised learning is used for various purposes. Unsupervised learning aids in learning informative features from unlabeled data, improving supervised learning models. It The learning enables domain adaptation by transferring knowledge from one domain to another. Unsupervised learning is valuable for data preprocessing, including tasks like data cleaning, imputation, and outlier detection. Unsupervised learning It is applied in clustering to group similar data points together. Unsupervised learning techniques help in dimensionality reduction, reducing the number of variables in high-dimensional datasets while preserving essential information.

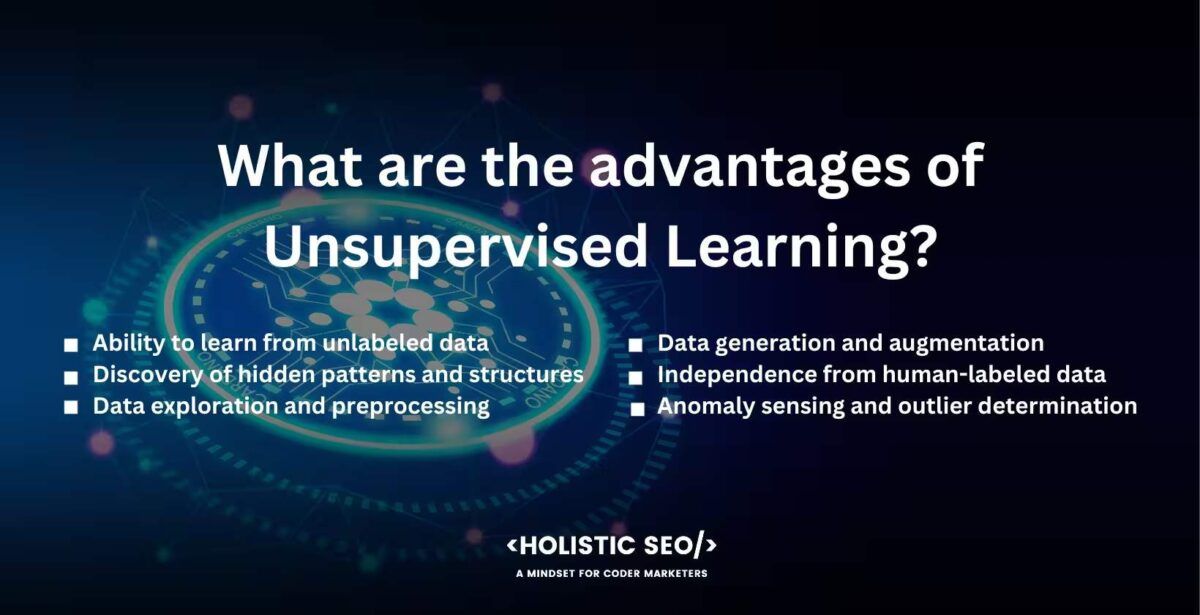

The advantages of unsupervised learning are the ability to learn from unlabeled data, the discovery of hidden patterns and structures, and data exploration and preprocessing. Unsupervised learning is advantageous because it has data generation and augmentation, independence from human-labeled data, and anomaly sensing and outlier determination.

The disadvantages are a lack of labeled data for evaluation, ambiguity and subjectivity, and difficulty in handling high-dimensional data. Unsupervised learning has drawbacks including limited guidance or explicit learning objectives, sensitivity to data preprocessing, and scalability and computational complexity.

What is Unsupervised Learning?

The term unsupervised learning refers to a form of machine learning in which an algorithm learns patterns, structures, or relationships in a dataset with no explicit direction or marked samples. The algorithm under unsupervised learning analyzes the information and determines intrinsic patterns or sets with no previous understanding of the preferred outcome. The primary purpose of unsupervised learning is to find the underlying format or allocation of the data. Unsupervised learning aids in the discovery of previously unseen patterns, similarities, or anomalies. Unsupervised learning It is frequently used for exploratory data analysis, data visualization, and data preprocessing activities.

What is the other term for Unsupervised Learning?

The other term for unsupervised learning is “self-supervised” learning. The phrase “self-supervised learning” emphasizes the view that the learning method is driven by the data itself, with no reliance on explicit tags supplied by human annotators. Self-supervised learning leverages the inherent format, patterns, or relationships within the unlabeled data to create supervisory signs instead of using labeled information.

Self-supervised learning algorithms normally propose pretext assignments or supplementary objectives that are solved using unlabeled data. The AI learns meaningful terms or components from the data not demanding explicit annotations by teaching a model to envision certain aspects of the data such as predicting missing parts of an image or predicting the next word in a sentence.

The usage of self-supervised learning has shown favorable effects in diverse disciplines, such as computer vision, natural language processing, and audio processing. Self-supervised learning It allows AI to comprehend useful representations and serves as a pretraining step before fine-tuning specific downstream tasks using smaller labeled datasets.

How does Unsupervised Learning work?

Unsupervised learning works by going through various stages.

Data preparation is the first step in which there is a gathering and preprocessing of the dataset. The preparation of dataIt involves removing any irrelevant or redundant features, handling missing values, and ensuring that data is in a suitable format for analysis.

Exploration and representation are the next steps wherein an unsupervised learning algorithm then analyzes the dataset to discover patterns or relationships. The exploration involves various techniques, such as clustering or dimensionality reduction.

Clustering is where the algorithm groups similar data points together based on their feature similarities. The algorithm identifies clusters or groups within the data, aiming to maximize the similarity of the data points within each cluster and minimize the similarity between different clusters. Dimensionality reduction techniques aim to reduce the number of input features while preserving important information. The algorithm finds a lower-dimensional representation of the data that captures its essential structure or variance.

Model training is done once the exploration or representation step is complete, the unsupervised learning algorithm trains the model based on the identified patterns or reduced dimensionality. The algorithm adjusts its parameters or internal representation to best capture the structure of the data.

Evaluation and interpretation are done as unsupervised learning algorithms do not have explicit target outputs for evaluation. Evaluation is typically performed instead, using intrinsic metrics or assessing the quality of the results often requires human analysis and domain expertise.

Application and insights are the last steps as the learned patterns, clusters, or reduced representations are applied to various tasks or used for gaining insight into the data. Application and insight It involves tasks such as anomaly detection, data visualization, recommender systems, or other downstream applications.

Does Unsupervised Learning involve the prediction of target variables?

No, unsupervised learning does not involve the prediction of target variables. Unsupervised learning concentrates on locating patterns, configurations, or associations in the data with no direct guidance or data label points, opposite to supervised learning, where algorithms obtain understanding from samples that are labeled to foresee a clear target variable.

The algorithms in unsupervised learning investigate the data and, depending on the input properties, find internal patterns or clusters. The intent is to comprehend the data’s fundamental form or distribution, as opposed to making predictions about specific target variables.

Clustering and dimensionality reduction are examples of unsupervised learning strategies that are not necessitating data with labels for training. They count on the fundamental properties of the data to reveal latent patterns or decrease the spatiality of the feature space.

The discovered patterns or representations are beneficial for future tasks even though unsupervised learning does not involve predicting target variables. For instance, comparable data in clustering are grouped jointly depending on their attributes, which aids in the comprehension of the data’s natural groupings. The reduced representations in dimensionality reduction are utilized for visualization or as input for subsequent algorithms.

Does Unsupervised Learning rely on labeled data for training?

No, unsupervised learning does not rely on labeled data for training. Unsupervised learning pertains to the utilization of unlabeled data, which refers to data lacking explicit target or output values. The main objective of unsupervised learning algorithms is to identify patterns, structures, or relationships within the data without relying on predefined labels.

The algorithms delve into the data’s inherent characteristics solely based on the input features. They do not have access to predetermined target variables during the training process. They concentrate on uncovering the inherent structure or distribution of the data without any explicit guidance instead.

Unsupervised learning proves particularly valuable when labeled data is scarce or when the primary goal is to explore and comprehend the underlying structure of the data. It enables data-driven insights and uncovers patterns that are immediately evident through manual examination.

What is the primary purpose of Unsupervised Learning?

The primary purpose of unsupervised learning is to function as an instrument to locate patterns, systems, and connections inside a dataset with no reliance on labeled or pre-classified data. Unsupervised learning concentrates on extracting meaningful insights from unlabeled data dissimilar to supervised learning, which learns from labeled examples to establish an input-output mapping.

The goal of unsupervised learning algorithms is to unveil innate configurations and groupings in the data, such as clusters or patterns, possessing no prior familiarity or direction. Unsupervised learning enables the discovery of hidden insights, identification of unknown patterns, anomaly detection, or data compression by identifying similarities, dissimilarities, or commonalities among data points.

Common techniques employed in unsupervised learning include hierarchical clustering, clustering algorithms like k-means clustering, and density-based clustering. Dimensionality reduction methods, such as principal component analysis (PCA) and t-SNE (t-Distributed Stochastic Neighbor Embedding), are frequently used to extract essential features or reduce data complexity.

Why is Unsupervised Learning important in Machine Learning?

Unsupervised learning is important in machine learning as it is associated with a lot of benefits.

Data exploration and pattern discovery are attained as unsupervised learning algorithms enable people to explore and analyze huge datasets with no possessed knowledge or examples with labels. The algorithms aid to reveal hidden patterns, structures, and connections within the data. People acquire valuable insights into the data, comprehend its underlying distribution, and create informed decisions by identifying the patterns.

Anomaly detection is obtained when unsupervised learning is utilized to determine anomalies or outliers in a dataset. Unsupervised algorithms flag instances that deviate significantly from the norm by learning the normal behavior of the data. Anomaly detection has applications in fraud detection, network intrusion, and quality control, among others.

Data preprocessing and feature engineering are other benefits that make unsupervised learning important in machine learning. Unsupervised learning techniques are frequently employed in the preprocessing stage of machine learning pipelines. They aid with tasks such as data cleaning, dimensionality reduction, and feature extraction. Unsupervised learning enhances the efficiency and effectiveness of subsequent supervised learning algorithms by decreasing the dimensionality of the data or transforming it into a more fitted representation.

Clustering and segmentation are achieved as unsupervised learning is commonly utilized in clustering, which involves grouping the same data points together based on their intrinsic attributes. Clustering techniques are useful for market segmentation, customer profiling, image segmentation, and document organization, among others. They allow individuals to search meaningful subgroups within a dataset with no possessed knowledge of the groups prior to the process.

Recommendation systems are present because unsupervised learning methods are fundamental to them, which are prevalent in e-commerce, online streaming, and content platforms. The algorithms analyze user behavior and patterns to group users with similar preferences and provide personalized recommendations. Techniques such as collaborative filtering rely on unsupervised learning to identify similar users or items.

Generative modeling is gained as unsupervised learning is essential for generative modeling tasks, where the goal is to learn the underlying probability distribution of the data. Generative models generate new samples that resemble the original data distribution by learning from examples with no labels. Generative models have applications in image synthesis, text generation, and data augmentation.

What are the Types of Unsupervised Learning Algorithms?

Unsupervised learning algorithms are a form of machine learning algorithm that learn patterns, structures, or connections from unlabeled data. Unsupervised learning algorithms work on data that are not annotated and aim to extract meaningful information without explicit directions. Unsupervised learning algorithms can be classified into two types of problems and there are types of unsupervised learning that solve them.

The types of unsupervised learning algorithms are listed below.

- Clustering: Clustering is a fundamental concept in unsupervised learning, where data points are grouped based on their inherent attributes or properties. The algorithm identifies natural clusters or patterns in the data without predefined class labels. It aids in data exploration, pattern recognition, anomaly detection, and data compression by maximizing similarity within clusters and minimizing similarity between different clusters.

- Association Rules: Association rules are a technique used by unsupervised learning algorithms to discover relationships among variables in a dataset. The rules determine routines or dependencies between items or attributes without relying on predefined class labels. The algorithm examines the presence, absence, and co-occurrence of elements to identify frequent itemsets and generate association rules with antecedents and consequences. Association rules have applications in market basket analysis, decision-making, recommendation systems, and data exploration, aiding in cross-selling, knowledge discovery, and pattern recognition.

1. Clustering

Clustering is a fundamental concept in unsupervised learning algorithms. Clustering involves grouping identical data points jointly founded on their innate attributes or properties. It The concept assists in pinpointing natural clusters or patterns in a particular set of data with no requirement for predefined class labels or target values.

The algorithm in clustering examines the data and allocates data points to separate clusters founded on their similarities or distances from one another. The objective is to maximize the alikeness within clusters and minimize the similarity between distinct clusters. The algorithm normally iteratively adjusts the cluster assignments until an optimal solution is reached. Clustering is significant as it has data exploration, pattern recognition, anomaly detection, and data compression.

2. Association Rules

Association rules are a technique employed by unsupervised learning algorithms to discover interesting relationships or associations between variables in a dataset. Association rules seek to identify patterns or dependencies between distinct items or attributes with no dependence on predefined class identifiers or target variables.

The algorithm in association rule mining investigates the existence, absence, and co-occurrence of elements or qualities within deals or observations. The algorithm It attempts to identify frequent itemsets or combinations of items that appear frequently together in the dataset. Association principles are derived from frequent item combinations to indicate the likelihood of certain items occurring together.

Association principles include a predicate (or premise) and a consequence (or conclusion). They are frequently expressed as “IF antecedent> THEN consequence>.” The antecedent is the item or characteristic that serves as a condition, whereas the consequent is the item or characteristic that is predicted or associated with the antecedent.

There are two direct stages in association rules, namely the generation of frequent itemsets and rule generation. Generation of frequent itemsets is done when the algorithm examines the dataset to determine itemsets that occur frequently together, typically employing measures such as support or confidence. Rule generation is when the algorithm generates association rules from the frequent item sets by considering various thresholds or measures, such as minimum support and minimum confidence.

Association rules are essential for unsupervised learning as they have a market basket analysis, decision-making, cross-selling and recommendation systems, and data exploration and knowledge discovery.

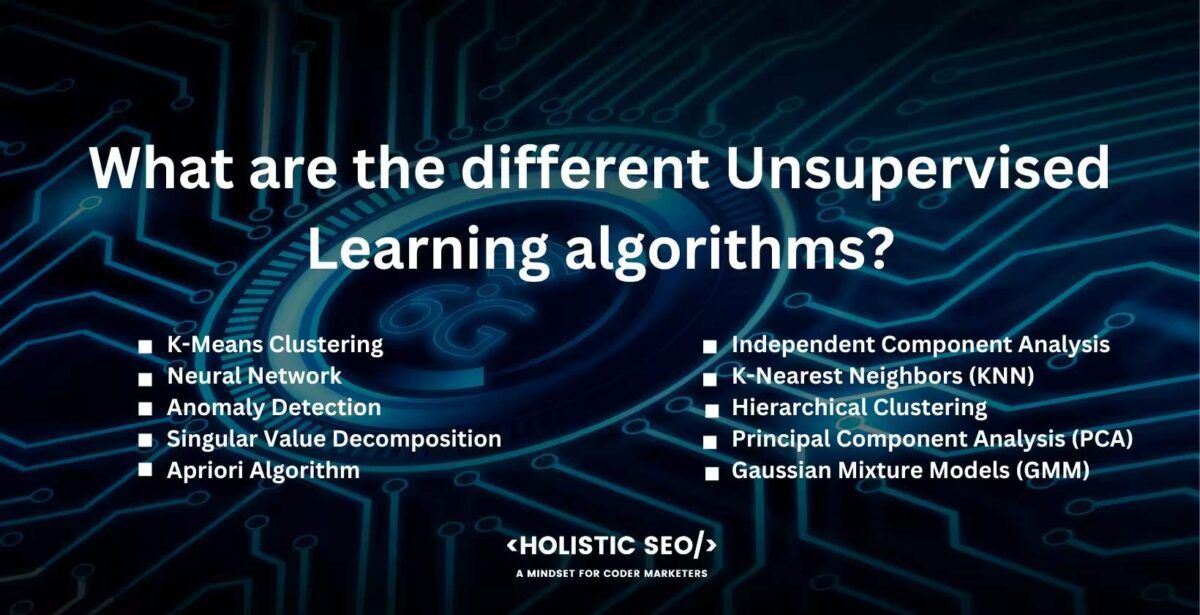

What are the different Unsupervised Learning algorithms?

The different unsupervised learning algorithms are listed below.

- K-Means Clustering: K-Means clustering is an unsupervised learning technique that groups similar data points into K clusters based on their attributes. The technique It involves initializing K centroids, assigning data points to the nearest centroid, and updating the centroids by recalculating the mean. K-Means clusteringIt is commonly used for document clustering, anomaly detection, image compression, and customer segmentation, but requires specifying the number of clusters in advance and works best with continuous numerical data.

- Neural Network: A neural network is a computational model inspired by the human brain, used in unsupervised learning to discover patterns in unlabeled data. It The model consists of interconnected neurons organized in layers, with weights assigned to their connections. Neural networks are utilized for tasks like grouping, dimensionality reduction, and generative modeling, with a key role in learning meaningful representations from raw data for various applications.

- Anomaly Detection: Anomaly detection is the process of identifying rare or abnormal instances in data without prior knowledge of what constitutes normal behavior. It uses statistical methods, clustering algorithms, density estimates, and neural networks to identify patterns that deviate from the norm. Anomaly detection is crucial in unsupervised learning as it helps identify unusual occurrences in data, making it valuable in various domains such as fraud detection, network intrusion detection, and quality control in manufacturing.

- Singular Value Decomposition: Singular Value Decomposition (SVD) is a mathematical technique used in unsupervised learning for dimensionality reduction and data analysis. The techniqueIt breaks down a matrix into three smaller matrices, allowing the original data to be represented in a lower-dimensional space while retaining important information. SVD is valuable for tasks such as feature extraction, data analysis, and pattern detection, and is applied in various domains including recommendation systems, image compression, and text mining.

- Apriori Algorithm: The Apriori algorithm is an unsupervised learning method used for itemset mining and association rule learning. The methodIt discovers relationships and patterns among items in transactional datasets based on support and confidence metrics. The algorithm generates candidate itemsets, prunes them based on support thresholds, and iteratively identifies frequent itemsets. Apriori is valuable in various applications, including market basket analysis, customer behavior analysis, and recommendation systems, where identifying associations and dependencies is important.

- Independent Component Analysis (ICA): Independent Component Analysis (ICA) is an unsupervised learning algorithm used to separate mixed signals into their original independent components. The algorithmIt leverages statistical independence and non-Gaussianity to identify the transformation that maximizes the independence of the components. ICA is valuable in blind source separation, signal processing, and feature extraction applications, particularly in fields like image and speech processing, where it separates mixed signals or images into their underlying sources.

- K-Nearest Neighbors (KNN): K-Nearest Neighbors (KNN) is an instance-based learning algorithm that assigns labels to new data points based on the majority vote of their k nearest neighbors in the training set. The algorithmIt calculates the similarity between data points using distance metrics and selects the k closest neighbors to determine the label of a new data point. KNN is used as an unsupervised learning clustering algorithm to group data points based on their resemblance or proximity to each other.

- Hierarchical Clustering: Hierarchical clustering is an unsupervised learning method that creates a hierarchical decomposition of data by merging or splitting groups based on similarity. The methodIt has two approaches, which are agglomerative clustering, which starts with individual data points as clusters and progressively merges similar clusters, and divisive clustering, which begins with all data points in one cluster and recursively divides them. Hierarchical clustering is widely used to identify natural groupings and structures in various domains, including biology, social sciences, and market segmentation.

- Principal Component Analysis (PCA): Principal Component Analysis (PCA) is a mathematical method used in unsupervised learning to transform high-dimensional data into a lower-dimensional representation. The methodIt captures the maximum variance in the data by finding orthogonal directions called principal components. The components are determined by the covariance matrix and eigenvectors of the data, with higher-ranked components capturing more variance. PCA is valuable for data exploration, visualization, feature extraction, and as a pre-processing step for other unsupervised learning tasks.

- Gaussian Mixture Models (GMM): Gaussian Mixture Models (GMM) is a probabilistic model used in unsupervised learning for clustering and density estimation. The modelIt represents the data as a mixture of Gaussian distributions, where each Gaussian component corresponds to a cluster. GMM assigns probabilities to data points to determine their cluster assignment and estimates model parameters using Maximum Likelihood Estimation. GMM is applied in various tasks such as clustering, density estimation, and data generation, enabling the discovery of underlying data structures and identification of clusters or modes.

1. K-Means Clustering

K-Means clustering is a well-known unsupervised learning procedure that is utilized to arrange matching data constituents into collections. K-Means clustering attempts to split data into K clusters, where K is a user-specified parameter. A centroid, which is the average or middle point of the data attributes within that cluster, symbolizes each cluster.

K-Means clustering is a meticulous step-by-step procedure. The initialization stage involves randomly placing K centroids in the feature space. The assignment step involves assigning each data point to the nearest centroid based on a distance metric, most commonly Euclidean distance. The centroids are recalculated in the update stage by taking the mean of the data points given to each cluster. The stages are iterated until a convergence criterion, such as a defined number of iterations or when the centroids no longer change appreciably, is reached. The technique generates K clusters, with each data point designated to the cluster indicated by its closest centroid.

K-Means is widely used in a variety of unsupervised learning applications. The varietyIt is used to cluster documents, identify anomalies, compress images, and segment customers.

The distinction between K-Means and other clustering algorithms is that it necessitates the number of clusters to be specified in advance. K-Means is based on the premise that clusters are spherical and of comparable size. It is affected by the original placement of centroids. K-Means works with continuous numerical data.

2. Neural Networks

A neural network in unsupervised learning is a computational model inspired by the structure and function of the human brain. A neural network is used to discover patterns and relationships in unlabeled data without the need for explicit guidance or labeled examples.

Neural networks are made up of interrelated nodes called neurons that are structured in layers. Each neuron receives information, transforms it, and creates an output. Weights are allocated to neuronal connections, which impact the strength of influence one neuron has on another. The network learns by altering the weights in response to patterns in the incoming data.

Neural networks are extensively employed in unsupervised learning for tasks such as grouping, dimensionality reduction, and generative modeling. Feature learning or representation learning is one of the unique roles of neural networks in unsupervised learning. Neural networks learn meaningful representations or characteristics from raw data automatically, which are subsequently used for a variety of downstream applications.

One key difference between neural networks to other unsupervised learning algorithms is their flexibility in modeling complex relationships.

3. Anomaly Detection

Anomaly detection refers to the determination of rare or abnormal instances or patterns in data components with no acquired previous knowledge of what constitutes normal behavior. Anomalies are data points or observations that contrast enormously from most of the data, indicating unusual or potentially interesting circumstances or outliers.

The goal of anomaly detection is to distinguish anomalous instances from normal ones without relying on labeled data or specific anomalies to train on. Anomaly detection It aims to detect previously unseen or unknown anomalies.

Anomaly detection employs a variety of approaches, including statistical methods, clustering algorithms, density estimates, and neural networks. The strategies examine the data’s features and distributions to uncover patterns that depart from the norm.

Anomaly detection is critical in unsupervised learning because it aids in the identification of odd or possibly interesting patterns or occurrences in data. It is especially useful when labeled data for training supervised algorithms is limited or non-existent. Fraud detection, network intrusion detection, defect detection in industrial systems, outlier detection in data cleansing, and quality control in manufacturing are all services for anomaly detection.

Anomaly detection differs from other unsupervised learning methods in that it focuses on recognizing outliers or anomalies in the data rather than discovering broad patterns or structures. Anomaly detection seeks to find cases that vary from the norm while clustering algorithms seek to group similar examples together and dimensionality reduction techniques seek to minimize data complexity. Anomaly detection methods are specially developed to address the difficulty of finding unusual and unexpected patterns, whereas other unsupervised learning algorithms are primarily concerned with understanding the broad distribution or structure of the data.

4. Singular Value Decomposition

Singular Value Decomposition (SVD) is a mathematical strategy for dimensionality reduction and data analysis in unsupervised learning. Singular Value Decomposition (SVD) divides a matrix into three smaller matrices, allowing the original data to be represented in a lower-dimensional space while keeping crucial information. SVD is a factorization method that deteriorates a given matrix into three matrices: U, Σ, and V^T. The original matrix A is depicted as A = UΣV^T, where U and V are orthogonal matrices, and Σ is a diagonal matrix containing singular values.

Singular Value Decomposition (SVD) is most normally operated for dimensionality reduction, which is the process of reducing the number of features or variables in a dataset while maintaining the most significant information. A lower-dimensional representation of the data is generated by picking a subset of the singular values and related columns from U and V.

Singular Value Decomposition is useful for data analysis and feature extraction. Important patterns and correlations within the data are detected by evaluating the singular values and accompanying singular vectors, which are the columns of U and V. SVD in data shows underlying structures, clusters, or patterns.

Singular Value Decomposition is widely used in various unsupervised learning tasks, including recommendation systems, image compression, text mining, and collaborative filtering. For example, SVD is used in recommendation systems to evaluate user-item interaction matrices to find latent characteristics and produce individualized suggestions.

The distinction of Singular Value Decomposition from other unsupervised learning methods is its emphasis on matrix decomposition and dimensionality reduction. SVD is more concerned with expressing data in a lower-dimensional space by capturing the most significant characteristics or patterns, while clustering algorithms like k-means or hierarchical clustering try to group similar instances together based on distance or similarity metrics.

5. Apriori Algorithm

Apriori algorithm pertains to an unsupervised learning algorithm applied for prevailing itemset mining and association rule learning. Apriori algorithm is specifically designed to discover relationships, associations, and patterns among items in a transactional dataset.

Apriori algorithm is based on two important metrics, which are support and confidence. The relative frequency of an itemset in the dataset is referred to as support, whereas the strength of an association rule is referred to as confidence. The algorithm selects relevant itemsets and association rules by defining minimal levels of support and confidence.

Apriori algorithm works by generating candidate itemsets and pruning them repeatedly depending on the lowest support threshold. The algorithm It first examines the dataset for frequent individual items. The items are then combined to generate bigger candidate itemsets, which are pruned if their subgroups do not match the minimal support criteria. The method is repeated until no more frequent itemsets are produced.

Apriori algorithm plays a significant role in unsupervised learning as it assists to discover associations and dependencies in transactional data without the need for labeled examples. It is used to identify frequently co-occurring items in a market basket analysis, customer behavior analysis, recommendation systems, and other domains where understanding associations between items is valuable.

6. Independent Component Analysis

Independent component analysis (ICA) is an unsupervised learning algorithm used to split a set of mixed signals into their original autonomous components or sources. Independent component analysis (ICA) aims to locate a linear transformation of the data that maximizes the statistical independence of the transformed elements.

The key concept in independent component analysis (ICA) is statistical independence. It Statistical independence presumes that the source signals are mutually independent, meaning they are not correlated or predictable from each other. ICA aims to separate the mixed signals into their original sources by exploiting the independence of the transformed components.

Independent component analysis (ICA) operates by iteratively finding a demixing matrix that linearly transforms the mixed signals into statistically independent components. The algorithm seeks to maximize the non-Gaussianity or nonlinearity of the transformed components, as statistical independence is associated with non-Gaussian distributions.

Independent component analysis (ICA) plays a crucial role in unsupervised learning, especially in blind source separation, signal processing, and feature extraction tasks. The analysisIt is commonly used in areas such as image and speech processing, where it helps separate mixed signals or images into their constituent parts. ICA is particularly useful in scenarios where the sources or the mixing process is unknown or complex.

7. K-Nearest Neighbors (KNN)

K-Nearest Neighbors (KNN) is a sort of instance-based learning algorithm that constructs projections based on the likeness of the input information to the labeled data attributes in the training set. KNN identifies the k nearest labeled data points (neighbors) in the feature space and assigns a label to the new data point founded on the majority vote of its k nearest neighbors given a new, unlabeled data point.

The K-Nearest Neighbors (KNN) algorithm works by calculating the space or likeness between data attributes in a feature space. The algorithm It computes the space, such as Euclidean distance or Manhattan distance, between the new data attribute and all the labeled data points in the training set. It then selects the k nearest neighbors based on the shortest distance. The label of the new data point is defined by the majority label of its k nearest neighbors.

The application of K-Nearest Neighbors (KNN) in an unsupervised learning clustering algorithm. KNN clusters the data based on the distance or resemblance among data points. Each data point is designated to the cluster represented by its k nearest neighbors.

8. Hierarchical Clustering

Hierarchical clustering is an unsupervised learning procedure that strives to generate a hierarchical data decomposition by repeatedly joining or diverging groups depending on similarity or dissimilarity. The output is a dendrogram that depicts the clusters’ hierarchical structure.

Hierarchical clustering possesses two major methods, which are agglomerative (bottom-up) and divisive (top-down). Agglomerative clustering commences with every data component as a distinct cluster and iteratively converges the most alike clusters until a stopping criterion is satisfied. Divisive clustering commences with all data components in one cluster and recursively divides them into tinier clusters.

Hierarchical clustering is widely used in unsupervised learning for various applications. It helps identify natural groupings or structures in the data and provides insights into the connections between data points. It is ordinarily employed in fields such as biology, social sciences, and market segmentation.

9. Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is an applied mathematics method that converts a high-dimensional dataset into a lower-dimensional model. PCA aims to catch the greatest variance in the data by locating orthogonal directions, referred to as principal constituents, along which the data contrasts the most.

Principal Component Analysis (PCA) determines the primary constituents by evaluating the data’s matrix of covariance and then identifying its eigenvectors. The directions of greatest variance in the data match the eigenvectors with the highest eigenvalues. The eigenvectors in the lower-dimensional subspace define the new coordinate axes.

The primary constituents are sorted in order of importance, with the first primary constituent capturing the most variance, followed by the second, third, and so on. The dimensionality of the data is reduced by selecting a subset of the principal components. The new feature space retains as much information as possible while decreasing repetitiveness and noise.

Principal Component Analysis (PCA) is a highly essential algorithm in unsupervised learning, particularly in data exploration, visualization, and feature extraction. The algorithmIt helps identify the most informative features and reduces the computational complexity of subsequent analysis. PCA is used as a pre-processing step for clustering, classification, or anomaly detection algorithms.

10. Gaussian Mixture Models (GMM)

Gaussian Mixture Models (GMM) is a probabilistic model utilized in unsupervised learning for clustering and density estimation. GMM signifies the data as a mixture of Gaussian distributions, where every Gaussian component represents a cluster in the data.

Gaussian Mixture Models (GMM) assign a probability to every data attribute, indicating the likelihood of it belonging to each Gaussian component. The probabilities are calculated using Bayes’ theorem and the model parameters. The data attribute is normally assigned to the Gaussian component with the highest probability.

The parameters of the Gaussian Mixture Models GMM are estimated using the Maximum Likelihood Estimation (MLE) approach. The algorithm iteratively updates the model parameters to maximize the likelihood of the observed data given the model.

Gaussian Mixture Models GMM has several applications in unsupervised learning, including clustering, density estimation, and data generation. It automatically discovers the underlying structure of the data and identifies clusters or modes of the data distribution.

How is Unsupervised Learning used in AI?

There are various means that unsupervised learning is used in AI.

Unsupervised learning algorithms are utilized to learn informative features from unlabeled data. The learned features are then used as inputs for supervised learning models, enhancing their performance. Techniques like Restricted Machines (RBMs) and Deep Belief Networks (DBNs) are normally utilized for unsupervised feature learning.

Unsupervised learning is applicable to domain adaptation, where models trained on one domain are adapted to perform well on a different, but related, domain. Unsupervised learning algorithms help in transferring knowledge and adapting models to new tasks or environments by leveraging the unlabeled data from the target domain.

Unsupervised learning techniques are utilized for data preprocessing tasks like data cleaning, imputation of missing values, and outlier detection. The algorithms help improve the quality and reliability of the dataset used for subsequent tasks by analyzing the patterns in the data.

Unsupervised learning is applied in clustering as the algorithms cluster data points into groups based on their inherent similarities. Clustering is useful for various applications, such as customer segmentation, anomaly detection, document clustering, and image segmentation. Algorithms like k-means, hierarchical clustering, and Gaussian mixture models are ordinarily utilized for clustering.

Unsupervised learning is employed to help decrease the number of variables or features in a dataset while preserving important information. Dimensionality reduction is beneficial when dealing with high-dimensional data, as it simplifies the data representation and reduces computational complexity. Principal Component Analysis (PCA) and t-SNE (t-Distributed Stochastic Neighbor Embedding) are popular dimensionality reduction techniques.

How is Unsupervised Learning Used in AI Newsletter?

There are a lot of ways in which unsupervised learning is used in AI newsletters.

Firstly, unsupervised learning is used in topic modeling as algorithms such as Latent Dirichlet Allocation (LDA) or Non-negative Matrix Factorization (NMF), are applied to extract latent topics or themes from a collection of articles or texts. The algorithms analyze the textual content and identify underlying topics, enabling the newsletter to categorize articles based on the discovered themes.

Secondly, unsupervised learning is used in clustering and similarity analysis because k-means, or hierarchical clustering, group the same articles or topics together based on their content or other features. Unsupervise learningIt helps in organizing the newsletter by identifying related articles or providing different sections based on the discovered themes.

Thirdly, unsupervised learning techniques are useful for anomaly detection as they are employed to identify unusual or outlier articles that deviate from the typical content of an AI newsletter. Unsupervised learningIt aids the newsletter to identify articles that are significantly different or novel compared to the regular topics covered.

Lastly, unsupervised learning induces recommender systems as the methods, such as collaborative filtering, are utilized to recommend relevant articles or related content to readers based on their history or preferences. It enhances the personalization and engagement of the newsletter.

What are the advantages of Unsupervised Learning?

The advantages of Unsupervised Learning are listed below.

- Ability to learn from unlabeled data: Unsupervised learning enables machines to learn from unlabeled data, which is often more abundant and easier to obtain compared to data with labels. The flexibility enables the utilization of vast amounts of unlabeled data available in many domains, leading to potentially richer and more comprehensive models.

- Discovery of hidden patterns and structures: Unsupervised learning algorithms are adept at discovering hidden patterns, structures, and relationships in the data. The algorithms uncover clusters, anomalies, or latent factors that are not explicitly defined or known beforehand by exploring the inherent structure of the data. They It provides valuable insights and drives decision-making processes.

- Data exploration and preprocessing: Unsupervised learning techniques, such as clustering and dimensionality reduction, enable analysts and data scientists to explore and understand the data better. Clustering algorithms group similar instances together, helping to identify natural groupings or segments in the data. Dimensionality reduction techniques reduce the dimensionality of the data while retaining important features, making subsequent analysis more efficient and effective.

- Data generation and augmentation: Unsupervised learning algorithms, particularly generative models such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), generate new synthetic data instances that resemble the training data. Data generationIt is a valuable capability in data augmentation, where synthetic samples are generated to supplement the labeled data, leading to improved model performance and generalization.

- Independence from human-labeled data: Unsupervised learning frees the learning process from the need for labeled examples, reducing the dependency on human-labeled data. Unsupervised learning It is particularly advantageous in domains where obtaining labeled data is expensive, time-consuming, or simply not available. The algorithm It allows for autonomous learning and the discovery of insights in an unsupervised manner.

- Anomaly sensing and outlier determination: Unsupervised learning algorithms are effective in identifying anomalies or outliers in the data. The algorithms flag instances that deviate significantly from the norm, indicating potentially interesting or unusual events by learning the normal patterns and structures. Anomaly sensing has crucial uses in fraud detection, network intrusion sensing, and quality control.

What are the disadvantages of Unsupervised Learning?

The disadvantages of Unsupervised Learning are listed below.

- Lack of labeled data for evaluation: The absence of labeled data for evaluating the performance of the model is one of the main drawbacks of unsupervised learning. It is struggling to objectively assess the quality and accuracy of the learned representations or clusters. Evaluation in unsupervised learning normally relies on subjective judgments or domain knowledge, which introduces biases or limitations.

- Ambiguity and subjectivity: Unsupervised learning is inherently ambiguous and subjective. The discovery of patterns or structures in the data depends on the choice of algorithms, parameters, or similarity measures. Different unsupervised learning algorithms yield different results, making it challenging to determine the “correct” or optimal solution. Interpretation of the discovered patterns is subjective and requires domain expertise.

- Difficulty in handling high-dimensional data: Unsupervised learning algorithms struggle with high-dimensional data due to the curse of dimensionality. The amount of available data decreases relative to the feature space’s size as the number of features or dimensions increases. It leads to issues such as increased computational complexity, sparsity of data points, and challenges in finding meaningful patterns or clusters.

- Limited guidance or explicit learning objectives: Unsupervised learning lacks explicit guidance or predefined learning objectives, unlike supervised learning. Insufficient guidance It makes it more challenging to steer the learning process toward specific goals or desired outcomes. The absence of explicit feedback or labels leads to ambiguity in what the model must learn and what constitutes success.

- Sensitivity to data preprocessing: Unsupervised learning algorithms are sensitive to the preprocessing and normalization steps applied to the data. Variations in scaling, feature selection, or handling missing data significantly impact the discovered patterns or clusters. Careful data preprocessing and feature engineering are necessary to obtain meaningful and reliable results.

- Scalability and computational complexity: Some unsupervised learning algorithms are computationally demanding, especially when dealing with large datasets or high-dimensional data. The complexity of the algorithms, such as clustering algorithms with quadratic time complexity, poses challenges in terms of memory requirements and computational efficiency.

What are examples of Unsupervised Learning applications?

There are numerous examples of unsupervised learning applications.

Customer segmentation is an application of unsupervised learning in which the algorithms group customers into distinct segments based on their purchasing patterns, behavior, demographics, or preferences. The information helps businesses tailor their marketing strategies, personalize recommendations, and optimize customer experiences.

Image clustering and retrieval is another application as unsupervised learning is used to cluster images based on similarities, such as colors, textures, or shapes. It enables tasks like image organization, content-based image retrieval, and automated image tagging.

Natural language processing (NLP) is an unsupervised learning application because the techniques are employed in NLP for tasks like text clustering, topic modeling, sentiment analysis, and word embeddings. The techniques help in organizing text corpora, extracting key themes, and understanding the semantic relationships between words.

Genetic sequencing is another field in which unsupervised learning is applied as the techniques are applied to analyze genetic data and identify patterns or clusters in genomic sequences. The techniqueIt aids in understanding genetic variations, identifying disease markers, and guiding personalized medicine.

Autonomous driving is where unsupervised learning is used in various aspects of autonomous driving, such as object detection, scene understanding, and path planning. Unsupervised algorithms learn to recognize objects, predict road conditions, and make informed driving decisions by analyzing sensor data from cameras, lidars, and radars, unsupervised algorithms learn to recognize objects.

Is ChinAI Newsletter an example of Unsupervised Learning?

No, the ChinAI Newsletter is not an example of Unsupervised Learning. The ChinAI Newsletter is a curated newsletter written by Jeff Ding, which provides insights, analysis, and updates on the developments in artificial intelligence (AI) in China. The newsletterIt primarily involves human curation and analysis of information rather than utilizing unsupervised learning algorithms. ChinAIIt does not employ unsupervised learning for its curation or analysis procedure, even though the newsletter references or discusses unsupervised learning algorithms or methods.

The ChinAI Newsletter covers a wide range of topics related to artificial intelligence (AI) in China, including policy developments, technological advancements, and social implications. The newsletter’sIts immediate focus is not specifically on unsupervised learning, although the newsletter touches upon various AI techniques, including unsupervised learning.

What is the difference between Unsupervised and Supervised Learning?

The difference between unsupervised and supervised learning lies in the availability of labeled data and the learning objectives of the algorithms.

Unsupervised learning algorithms do not have the ability to access information that is labeled during the learning period. The program discovers trends, frameworks, or connections in information without human supervision or predetermined labeling. Supervised during the training phase learning systems have possession of labeled data. Labeled examples provide the input information matched with related goal outputs or labels.

The main intent of unsupervised learning is to study and search for concealed trends, groups, or correlations in data. The objective of the algorithm is to extract relevant information from data without requiring specified outputs or predictions. The goal of supervised learning is to develop a mapping or function that anticipates the proper output or label for previously unidentified inputs. The method is designed to generalize from labeled instances and generate good predictions on unlabeled data.

Unsupervised learning algorithms often yield clusters, association rules, or reduced-dimensional representations. The emphasis is on comprehending the data structure rather than making specific predictions or classifications. Supervised learning algorithms generate output in the form of predictions or classifications. They learn to correlate input data with matching goal outputs, allowing them to make predictions on fresh, unlabeled data.

Unsupervised learning is commonly used for tasks such as clustering, anomaly detection, dimensionality reduction, and data exploration. It helps identify hidden patterns or groupings in data without relying on explicit labels. Supervised learning is widely used in tasks such as regression (predicting continuous values), classification (assigning discrete labels), and pattern recognition. It is applicable in various domains, including image recognition, speech recognition, sentiment analysis, and recommendation systems.

Unsupervised and supervised learning are two completely different ways of machine learning, but they are both highly useful in training artificial intelligence.

- 48 Online Shopping and Consumer Behavior Statistics, Facts and Trends - August 22, 2023

- B2B Marketing Statistics - August 22, 2023

- 38 Podcast Statistics, Facts, and Trends - August 22, 2023