Supervised learning is a key concept in machine learning that entails training a model to make predictions or judgments using labeled training data. It is an area of artificial intelligence that seeks to replicate how people pick up information from experience and apply it to fresh circumstances.

Learning in the context of supervised learning is the process of teaching a model to spot patterns, predict the future, or take actions based on input data. An array of input-output pairs, referred to as labeled training data, are given to the model, each input being paired with the appropriate output. The objective is for the model to understand the fundamental connection between the inputs and outputs and to predict the outputs accurately for unknown inputs.

Supervised learning works by iteratively modifying a model’s parameters to minimize the difference between expected and true outputs in labeled training data. An optimization approach involving gradient descent is used for it, which modifies the model’s parameters based on the difference in accuracy between expected and actual outputs. The procedure is repeated until the model’s accuracy on the training set is adequate.

Supervised learning is essential in many real-world situations. It lets machines carry out operations, including image and audio recognition, natural language processing, fraud detection, and recommendation systems without the need for human participation. Supervised learning algorithms generalize from the training data and generate precise predictions on new, unobserved data by learning from labeled instances, increasing efficiency and improving decision-making processes.

Applications for supervised learning are found in many fields. It is used to diagnose medical disorders or forecast the course of diseases in the healthcare industry. It supports market trend analysis, anomaly detection, and investment forecasting in finance. It helps autonomous vehicles with object detection and path planning. It even supports sentiment analysis for social media monitoring, improves email spam screening, and drives tailored recommendations in streaming and e-commerce platforms.

One of the best things about supervised learning is that it solves a wide range of problems and makes accurate guesses when given good-quality, labeled training data. It enables interpretability because the connection between inputs and outputs is examined and comprehended. It is dependent on labeled data, which is difficult and time-consuming to collect. The accuracy and representativeness of the training data play a critical role in the model’s success. Supervised learning models suffer in fresh or unfamiliar settings because they tend to overfit the training data, reducing their generalization ability.

Supervised learning is an effective and popular method in machine learning. It lets machines learn from cases with labels and make accurate predictions about data they haven’t seen yet. Supervised learning’s many uses and advantages mean it’s still at the forefront of AI research and development, helping to automate complicated processes across a wide range of industries.

What is Supervised Learning?

Supervised learning is a subfield of machine learning in which an algorithm learns to guess or classify new data based on labeled training examples. Each training example consists of an input, which is sometimes called features or attributes, and an associated output, commonly called the target or label. The goal is to train a model that correctly generalizes from the examples given and correctly guesses or classifies data it hasn’t seen before.

The labeled training data serves as a teacher in supervised learning, guiding the learning process. The algorithm learns by looking at the patterns and connections between the inputs and the results. It tries to find the underlying function or decision line that maps the information to the desired outputs. The model applies the learned function to new, unlabeled data to produce predictions or classifications.

The growth of proof comes from the fact that supervised learning needs labeled training data to work. The labels are the ground truth, so they are used to judge how well the model is doing during training and to change its settings to reduce prediction errors. It entails iteratively modifying the model’s parameters to minimize a predefined loss or error function, ensuring its accuracy improves over time.

There are different supervised learning algorithms based on the type of result they predict. Classification algorithms are used when the result is a set of categories or classes that are clear and finite. It includes things such as deciding whether an email is spam or not. Regression algorithms are used when the result is a constant value, such as when predicting the price of a house based on factors including location, size, and number of rooms.

What is the other term for Supervised Learning?

“Supervised machine learning” is another name for “supervised learning.” It comprises several methods and strategies for labeling patterns and analyzing data. It is referred to as pattern labeling and analysis.

Supervised learning emphasizes human oversight’s importance to learning. The system learns from labeled instances with input and output labels. Supervision helps the algorithm learn and find patterns and correlations between inputs and outputs.

The phrase describes the approaches and algorithms that fall under its purview in academic writing, research papers, and technical discourse. Supervised learning is helpful to set learning apart from other processes, such as unsupervised or reinforcement learning, where it is not dependent on labeled examples or direct supervision.

Practitioners and researchers use it to draw attention to algorithms that require labeled training data and operate under the supervision of known results. The terminology helps clarify the precise context and methods of discussing machine learning technologies.

What is the importance of Supervised Learning?

Supervised learning is crucial to machine learning and AI. It’s essential for labeled training data-based model prediction and decision-making.

Assisted learning automates difficult processes that require human interaction. Supervised learning algorithms apply experiences to new circumstances by using labeled examples. It lets machines handle picture and speech recognition, natural language processing, recommendation systems, and fraud detection. Supervised learning allows AI systems to evaluate massive volumes of data, detect patterns, and make intelligent judgments, improving industry efficiency and productivity.

Supervised learning improves AI. Supervised learning methods train models on labeled data to teach AI systems from human specialists. It allows machines to emulate human decision-making and intellect in specific fields. It helps AI systems forecast, classify, and do jobs that previously needed human intellect. Supervised learning further improves interpretability by enabling analysis and explanation of the AI system’s decision-making process.

Autonomous vehicles, medical diagnostics, and tailored suggestions require supervised learning. Supervised learning helps autonomous vehicles recognize objects and lanes and make decisions using real-time sensory data. It predicts disease outcomes, identifies biomarkers, and improves patient care. Supervised learning algorithms assess user preferences and historical data to adapt recommendations in recommendation systems, improving user experience.

Machine learning and AI require supervised learning. It lets machines learn from labeled instances, automate operations, and make accurate predictions or conclusions. Supervised learning allows AI systems to mimic human intellect, perform complex tasks, and develop numerous fields.

What is the goal of Supervised Learning?

Supervised learning aims to train a model to accurately predict or classify new data based on labeled training examples. The goal is to enable the model to generalize from labeled data and accurately predict unknown cases.

Supervised learning minimizes the difference between its anticipated outputs and the labeled training data’s true outputs by iteratively modifying the model’s parameters throughout training. An optimization approach resembling gradient descent adjusts the model’s parameters based on the difference between expected and true outputs.

Supervised learning algorithms learn patterns and correlations in labeled training data through iterative optimization. The model finds relevant aspects and builds a functional relationship between inputs and outputs by examining input features and labels. The acquired link allows the model to generalize its knowledge and accurately anticipate unseen examples.

Supervised learning’s success depends on many aspects. Labeled training data quality and representativeness affect model performance. The model learns wrong patterns and produces false predictions if the training data is inadequate, biased, or contains errors. The training data’s diversity and complexity limit the model’s performance.

Supervised learning achieves its purpose with high-quality and representative labeled training data. It has excelled in image classification, speech recognition, and natural language processing. It is vital to continuously analyze and enhance the model’s performance to ensure that it generalizes effectively to unforeseen examples and remains dependable in practical circumstances.

How does Supervised Learning work in Machine Learning?

Supervised learning helps machines learn patterns and correlations from labeled training data. Matching output labels to relevant input features trains a model to make accurate predictions or classifications.

Supervised learning begins with labeled training data. Each training example has input features and a label. The input features indicate data qualities, while the output label predicts or classifies that input. Model training relies on labeled data.

Supervised learning uses linear models, decision trees, support vector machines, or deep neural networks, depending on the challenge. Data type and challenge level decide the optimal model.

The model trains on labeled examples. It compares input feature predictions or classifications to output labels. Loss or error functions calculate the difference between projected and actual outputs. The model then optimizes its internal parameters using gradient descent to reduce error.

Optimization iteratively updates the model’s parameters to improve classifications or predictions. The model adjusts its parameters after each iteration until it achieves a suitable degree of accuracy on the training data. The model discovers data correlations and patterns to produce accurate predictions or classifications for new data.

The model helps try to predict or categorize brand-new, unlabeled data after it has been trained. The trained model creates the related predictions or classifications by applying the discovered patterns and relationships to the input attributes of the unobserved data through the help of machine learning.

What are the two Types of Supervised Learning?

Listed below are the two types of supervised learning. Supervised learning is categorized into two types of problems.

- Classification: Classification is one of its types, indicating absolute labels for new cases based on input features. The model learns a decision boundary or function to classify data. Each input instance receives a model-predicted class label. Some examples include spam detection, image classification, sentiment analysis, and disease diagnosis.

- Regression: Regression is another form that anticipates continuous or numerical values. It understands a procedure that turns input characteristics into a continuous target variable. Its tasks include estimating sales, stock, and housing prices based on area, room amount, and location.

1. Classification

Classification means predicting categorical or discrete class labels for incoming occurrences based on their input data. Classification aims to learn a decision boundary or function that separates various classes in data, allowing the model to assign the proper class label to previously unseen examples. It is accomplished by analyzing labeled training data, in which each sample is connected with a known class label. The model learns from the patterns and correlations between the input features and their corresponding class labels, allowing it to predict new, unlabeled data.

Classification is critical because it allows machines to automate assigning class labels to distinct objects or instances. It has numerous uses, including image recognition, text classification, fraud detection, sentiment analysis, and disease diagnosis. Machines help in decision-making processes, extract essential insights, and solve complicated problems by effectively classifying data. It is critical in many real-world circumstances, contributing to efficiency, accuracy, and reliability across various sectors.

Email spam detection is an example of a classification job in which the model predicts whether an incoming email is spam based on its content and other factors. Another example is picture classification, in which the model recognizes and labels various objects or situations in photos, such as determining if an image contains a cat or a dog. Another example is text categorization, in which the model divides text documents into specified categories depending on their content, such as categorizing news stories into sports, politics, or entertainment. Classification algorithms are employed in medical diagnostics, where the model predicts whether disease exists based on patient symptoms and test findings.

2. Regression

Regression predicts continuous outcomes. It involves modeling input and continuous target variables. Regression approximates the function that translates input data to output values and lets the model predict future events.

Regression analyzes labeled training data with continuous target values and input attributes. The model fits a function that best describes the data using variable input patterns and goal variable correlations. Relationship complexity determines linearity. The trained regression model predicts fresh data using the input feature function.

Regression aids continuous target variable prediction and decision-making. It affects social sciences, healthcare, finance, and the economy. Regression analysis helps identify key variables that impact the target variable, analyze their correlations, and make data-driven predictions. Quantitative analysis, forecast trends, estimation, and hypothesis testing are appropriate.

Regression makes it hard to value a property based on its location, size, number of rooms, and other criteria. Its models predict product sales based on previous data, measure advertising’s impact on sales, and analyze economic indicators and stock market performance. Clinical characteristics that predict patient outcomes include illness progression and drug efficacy.

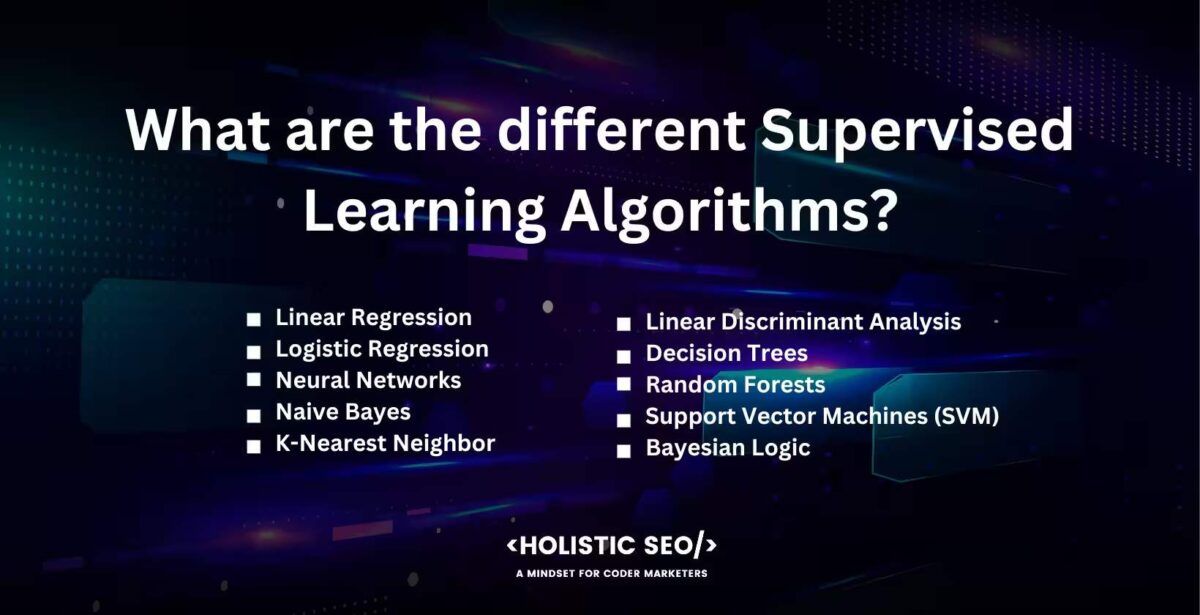

What are the different Supervised Learning Algorithms?

Supervised learning algorithms are a collection of computational approaches and techniques used to train models in supervised learning. The algorithms involved are intended to learn from labeled training data, with each example consisting of input attributes and associated output labels. The purpose of it is to uncover patterns and relationships from labeled data and use them to produce accurate predictions or classifications for new, unseen cases.

Listed below are the different Supervised Learning Algorithms.

- Linear Regression: It is a basic approach representing the connection between the input data and the target variable as a linear function. It seeks the best-fit line that minimizes the difference between expected and actual values.

- Logistic Regression: It is used for binary classification tasks. It employs a logistic function to represent the relationship between the input features and the risk of belonging to a specific class.

- Neural Networks: It is a versatile family of algorithms inspired by the structure of the human brain. They are made up of interconnected nodes “neurons” that are structured in layers and are capable of learning complicated patterns and relationships.

- Naive Bayes: It is a probabilistic algorithm based on Bayes’ theorem. It assumes that the input features are independent of one another and computes the probability of each class label given the features.

- K-Nearest Neighbor: It is a non-parametric algorithm that predicts new instances based on their resemblance to labeled training examples. It assigns the most common class label among the k nearest neighbors.

- Linear Discriminant Analysis: A dimensionality reduction method and classification algorithm, linear discriminant analysis (LDA), is used to identify patterns in data. It projects the input features into a lower-dimensional space while maximizing the separation between various classes.

- Decision Trees: It is a tree-like model of decisions and their repercussions. They divide the input into distinct qualities and create predictions by traversing the tree from the root to the appropriate leaf node.

- Random Forests: It is an ensemble method for making predictions that incorporate several decision trees. Each tree in the forest is trained on a random subset of the data and casts a vote for the final forecast.

- Support Vector Machines (SVM): It is a sophisticated technique that is utilized for both classification and regression tasks. It finds the ideal hyperplane that best divides data points from distinct classes.

- Bayesian Logic: Bayesian logic, called probabilistic graphical models, uses directed acyclic graphs to depict relationships between variables. It employs Bayesian inference to learn conditional dependencies and create predictions based on observable data.

1. Linear Regression

Linear regression uses input data and the target variable to predict continuous or numerical values. It does it by assuming that there is a linear connection between the characteristics that are input and the variables that are being targeted. It determines the line that gives the greatest fit by minimizing the difference between the values that were expected and the values that were actually observed.

Linear regression does it by calculating the coefficients of the linear equation that describes the relationship between the input data and the target variable. The coefficients are the slope and the intercept. Ordinary Least Squares (OLS) are used to calculate the ideal coefficient values since they minimize the sum of the squared differences that exist between the expected and actual values.

The linear regression model calculates the projected value for each input instance by multiplying the input characteristics by their coefficients and adding the intercept term. Applying learned coefficients to features predicts additional cases.

Linear regression predicts continuous variables in supervised learning. It simplifies modeling the input features-goal variable relationship. It is often used as a baseline or to compare to more complex methods.

Linear regression differs from supervised learning methods. First, it assumes a linear relationship between input features and goal variables, which is false in complicated datasets with nonlinear relationships. Neural networks and decision trees manage more complicated interactions. It assumes regularly distributed residuals with a constant variance. Forecasts are affected by assumption violations. Decision trees and random forests are non-parametric and make fewer input assumptions. Linear regression’s interpretable coefficients show how each input characteristic impacts the target variable, opposing neural networks’ convoluted structure.

2. Logistic Regression

Logistic regression is used for binary classification. It mimics how to input features to alter class membership. Logistic regression classifies, not regresses. Logistic regression fits a logistic “sigmoid” function to input features. The logistic function converts continuous inputs to a probability range between 0 and 1, indicating the positive class. Maximum certainty estimation estimates the logistic function coefficients.

Logistic regression predicts positive class membership using input features. The occurrence is classed as positive if the rate of it happening is above a preset threshold which is typically 0.5, and negative otherwise. Logistic regression is essential in supervised learning, especially binary classification tasks. It is beneficial for categorical target variables and class prediction. Logistic regression estimates class rates from input features in a straightforward manner.

Binary classification problems typically use logistic regression. It sets a benchmark or compares to more complicated algorithms. Logistic regression’s interpretability lets one examine how each input feature affects positive class certainty. Logistic regression differs from other supervised learning algorithms. First, logistic regression is designed for binary classification, while linear regression predicts continuous values. Logistic regression models the link between input features and class probability, while linear regression models the objective variable.

Output form differs. Logistic regression creates probabilities between 0 and 1, while linear regression produces continuous results. Logistic regression’s sigmoid function limits output to numbers. Logistic regression assumes error independence and linearity between log rates of opportunities and input variables. Violating such presumptions influence prediction accuracy. Decision trees and support vector machines handle nonlinear relationships and make fewer data assumptions.

3. Neural Networks

Neural networks are algorithms modeled after the brain. They learn and simulate complex data patterns and relationships. Neurons establish layers in neural networks. Each neuron receives incoming signals, transforms them, and outputs them to the next layer. Neural networks learn patterns and correlations from labeled training data. They have input, hidden, and output layers. The hidden layers process input features from the input layer. The output layer makes predictions or classifications.

Neural networks reduce the discrepancy between expected outputs and true labels in training data by adjusting neuron weights and biases. Backpropagation adjusts weights and biases by propagating the error signal through the network. Iterations continue until the model performs well.

Neural networks understand complex patterns and correlations from labeled data, making them essential to supervised learning. They excel at picture, natural language, and speech recognition on massive datasets. Neural networks handle high-dimensional data and capture intricate feature connections by automatically extracting and representing features.

Neural networks changed computer vision, speech recognition, and natural language processing. They excel at picture classification, object detection, machine translation, and sentiment analysis. They solve complicated supervised learning problems due to their flexibility and data learning.

Neural networks differ from supervised learning methods in various ways. First, their structure and architecture are more sophisticated than linear regression or decision trees. Neuron layers in neural networks record and model complex data interactions.

Neural networks automatically learn features from data, opposing classic algorithms, which require hand-crafted feature engineering. Training neural networks to learn feature representations and hierarchies, eliminating the requirement for human feature extraction. Neural networks excel at processing complex, nonlinear data, including images and sequences. They excel at computer vision, natural language processing, and time series analysis because they detect subtle patterns and dependencies.

Training neural networks require a lot of data and processing power. Their performance depends on choosing the right architecture and hyperparameters. Neural networks are sophisticated and black-box compared to linear regression, making interpretability difficult.

4. Naive Bayes

Naive Bayes is a Bayes’ theorem-based supervised learning technique that presupposes feature independence. It’s employed for classification despite its simplicity and naivety. Naive Bayes calculates an instance’s class probability using feature presence or absence. Its theorem calculates a class’s conditional probability given input attributes. The “naive” assumption is that features are conditionally independent given the class.

Naive Bayes uses training data to estimate class rates for predictions. It calculates class prior probabilities and features conditional probabilities. The predicted class label is the class with the highest likelihood. Supervised learning, especially classification, relies on Naive Bayes. It handles high-dimensional data efficiently. Naive Bayes is typically used as a classification baseline or ensemble method.

Naive Bayes’s simplicity and interpretability are advantages. It makes simple probabilistic predictions and lets people examine how features affect classification. Naive Bayes works best when the naive feature independence assumption holds or when training data is scarce.

Naive Bayes varies from supervised learning algorithms in several ways. Naive Bayes presupposes feature independence, which is impractical. The assumption results in inferior predictions when characteristics are interdependent. Decision trees and neural networks capture more complex feature interactions.

Naive Bayes is a Bayesian probabilistic approach, while logistic regression and support vector machines estimate discriminative functions directly. Naive Bayes predicts class membership probabilities. Naive Bayes is computationally efficient and requires less training data than other methods. However, imbalanced classes and feature independence violations degrade its performance.

5. K-Nearest Neighbor

The supervised learning algorithm K-Nearest Neighbor (KNN) is used for classification and regression. The similarity is used to classify or forecast an instance based on its proximity to labeled examples in the training set. KNN implies similar characteristics belong to the same class or have similar output values.

The distance between the input instance and all instances in the labeled training data is calculated via K-Nearest Neighbor. Euclidean distance is the most popular distance metric. KNN finds the k nearest neighbors, where k is a user-defined parameter. The input instance’s class label or output value is determined by majority voting (classification) or average (regression) among the k neighbors.

K-Nearest Neighbor predicts categorization problems using majority voting. The input instance receives the class label with the most k nearest neighbors. It predicts the input instance using its average output values of it in regression tasks.

K-Nearest Neighbor is important in supervised learning, especially when the decision boundary or feature-output relationship is not well-defined or linear. It makes no assumptions regarding data distribution. It is flexible because of its simplicity and ability to do classification and regression tasks. It’s typically compared to more complicated models. It captures local patterns and nonlinear interactions in small to intermediate datasets.

K-Nearest Neighbor has some significant differences from conventional supervised learning methods. KNN is a lazy learner, and it doesn’t learn a model during training. It memorizes training instances and computes them at prediction time. Its prediction is computationally expensive, especially with large datasets. Another difference is that KNN does not actively learn data patterns or correlations. Predictions are based on feature space instance proximity. It is affected by outliers and noisy data points.

The number of neighbors, or k, must be chosen carefully in a KNN algorithm. A few k render the model susceptible to noise, while a large value over smooth and lost local patterns. Distance-based calculations in high-dimensional domains influence KNN. Feature scaling and dimensionality reduction are used to address the problem.

6. Linear Discriminant Analysis

LDA is a supervised learning technique for dimensionality reduction and classification. It seeks a linear feature combination that maximizes data class separation. It assumes input features have multivariate normal distributions and similar covariance matrices across classes.

Linear Discriminant Analysis maximizes class separation by translating the high-dimensional feature space into a lower-dimensional space. The projection maximizes between-class scatter and decreases within-class scatter.

Linear Discriminant Analysis calculates class mean vectors and covariance matrices. It then creates a between-class scatter matrix by computing class mean differences and weighting them by class instances. Summating class covariance matrices yields a within-class scatter matrix. It finds the matrix inverse’s eigenvectors and eigenvalues. Discriminant directions, or eigenvectors, maximize class separability. Eigenvalues show discriminant direction relevance.

Linear Discriminant Analysis is essential for supervised learning with numerous classes and feature dimensionality reduction. It optimally classifies and decreases feature space dimensionality. It captures the most important class-separating information and improves classification algorithms. It preprocesses classification methods, including logistic regression and support vector machines. Reduced feature dimensionality and class separability improve the algorithms’ efficiency and effectiveness.

Linear Discriminant Analysis has numerous differences from other supervised learning algorithms.LDA finds a linear combination of features that maximizes class separation rather than class labels. It differs from logistic regression and decision trees that explicitly model feature-class label relationships. It assumes multivariate normal distribution and identical covariance matrices across classes. LDA fails if the assumptions are violated. Decision trees and SVMs make fewer data distribution assumptions.

Linear Discriminant Analysis reduces dimensionality by projecting the data onto a lower-dimensional subspace, while principal component analysis (PCA) captures the highest variance without class information. LDA, a linear classifier, performs poorly when class boundaries are nonlinear. Support vector machines with kernel functions or neural networks work better.

7. Decision Trees

Decision trees are supervised learning methods for classification and regression. They organize decisions and outcomes by input features. Each tree node represents a feature-based judgment and a class label or output value.

Decision trees split the feature space recursively using input features. The algorithm chooses the optimal feature to partition the data based on a criterion, including information gain or Gini impurity, as it builds the tree top-down. Splitting continues until a stopping condition, such as a maximum depth or all instances in a node being the same class. Decision trees make predictions clear and interpretable in supervised learning. Their simplicity and capacity to perform classification and regression tasks make them a baseline algorithm.

Decision trees capture complicated and nonlinear feature-target interactions. They handle numerical and categorical features and outliers. It handles missing data through surrogate splits or imputation. It reveals the most important decision-making aspects by measuring feature importance or relevance. They are used to prioritizing features.

Decision trees differ from supervised learning methods in various ways. It organizes decisions and outcomes hierarchically for clarity and interpretation. Neural networks and SVMs are black-box models. It partitions the feature space by input features, capturing complicated and nonlinear relationships. Linear regression and logistic regression presuppose linearity and are not capture complex relationships.

Overfitting occurs when decision trees get too complicated and capture noise or quirks in the training data. Pruning, halting criteria, and random forests help reduce overfitting. Small changes in training data modify decision tree architectures. Combining numerous decision trees in algorithms involving random forests or gradient boosting makes forecasts more accurate and consistent.

8. Random Forests

Random Forests predict using several decision trees in supervised learning. It is an ensemble learning strategy that reduces overfitting and increases generalization to improve decision tree performance and robustness. Random Forests classify and regress.

Random Forests use decision trees trained on diverse subsets of training data and attributes. The approach bootstraps randomly selects training data subsets to build decision trees. Each decision tree split considers a random selection of features. The ensemble becomes random and diverse. Each Random Forest decision tree independently forecasts the class label “classification” or output value “regression” for a given instance. Decision tree predictions are averaged, or the majority voted in classification or regression.

Random Forests help solve overfitting and increase generalization in supervised learning. They manage complicated linkages and interactions between features and are much harder to overfit than individual decision trees.

Random Forests work well with high-dimensional data. They handle categorical and numerical features and are less sensitive to outliers and noisy data. Random Forests measure feature importance, revealing feature relevance in prediction. Random Forest ensembles improve forecast accuracy and stability. Random Forests reduce bias and variation by combining many decision tree predictions, improving performance and resilience.

Random Forests have several differences from conventional supervised learning techniques. They are an ensemble method that integrates many decision trees. Random Forests add randomization through bootstrapping and feature subset selection. Randomness promotes decision tree diversity, lowering overfitting and enhancing generalization. Individual decision trees overfit because they try to match training data.

Random Forests estimate feature importance, revealing relevance. Random Forests provide the feature importance measure compared to other methods. Random Forests require more computational resources and training time than individual decision trees due to their ensemble nature. Performance and robustness often outweigh computational costs.

9. Support Vector Machines (SVM)

Support Vector Machines (SVM) is a supervised learning algorithm used for both classification and regression tasks. It aims to find the optimal hyperplane that maximally separates instances of different classes or maximizes the margin between instances in the case of regression. It handles linear and non-linear classification and regression problems.

Support Vector Machines (SVM) convert input data into a higher-dimensional space and locate the hyperplane that best distinguishes classes or maximizes margin. Some instances nearest to the hyperplane determine the ideal hyperplane. It optimizes the margin between support vectors and the decision border while minimizing misclassifications and regression errors to identify the best hyperplane. Quadratic programming handles that optimization challenge.

A kernel function implicitly maps input features into a higher-dimensional space when a linear hyperplane is not divided into classes. It handles complex interactions with non-linear decision bounds. It predicts new instances based on their position relative to the learned hyperplane. Examples on each side of the hyperplane are classed as one class or the other.

Support Vector Machines (SVM) handle complex decision boundaries and perform well in generalization, especially when the number of features exceeds the number of cases. It works for binary and multiclass classification. Image, text, bioinformatics, and finance use it. It is powerful in supervised learning because it handles non-linear correlations and controls overfitting with the margin parameter.

Support Vector Machines (SVM) differ from other supervised learning algorithms in various ways. It finds a hyperplane that maximizes instance margin or class separation. It differs from decision trees and neural networks that explicitly simulate feature-class label interactions. It implicitly maps data into a higher-dimensional space using kernel functions to accommodate non-linear relationships. It allows complex decision boundaries without computing the higher-dimensional feature space.

Support Vector Machines (SVM) maximize the decision boundary-support vector distance as a margin-based technique. It is less susceptible to outliers and more resilient to noise than logistic regression or k-nearest neighbors since it focuses on the margin. The linear, polynomial, and radial basis function (RBF) kernel functions allow for flexible data modeling and decision limits. The kernel function affects SVM performance and pattern capture.

10. Bayesian Logic

Bayesian Logic uses probability to measure uncertainty in formal reasoning. Conditional probability, Bayes’ theorem, and previous knowledge change opinions based on fresh data.

Bayesian logic begins with a previous belief or probability distribution about an event or hypothesis. Bayes’ theorem calculates the posterior probability from fresh evidence. The posterior probability is the revised belief based on evidence. Bayesian logic requires updating probabilities with new evidence. It allows prior knowledge and data-driven belief revision for rational decision-making under ambiguity.

Bayesian logic estimates model parameters and makes predictions in supervised learning. Bayesian approaches employ previous information or beliefs to model, which is advantageous when training data is scarce. Bayesian linear regression and neural networks use Bayesian inference. It makes model parameter estimates, uncertainty quantification, and probabilistic predictions principled.

Bayesian logic differs from classical or propositional logic, which uses deductive reasoning and truth values. Bayesian logic updates beliefs and makes rational decisions under uncertainty using probability and inference. Bayesian approaches include previous information or beliefs, update probabilities depending on observed data, and produce probabilistic predictions with specified uncertainties in supervised learning. Frequentist methods, particularly non-Bayesian methods, estimate parameters using just observed data.

Bayesian reasoning and procedures have benefits, but prior definition and model selection issues make them computationally complex. Bayesian and non-Bayesian techniques depend on the task, data, and trade-offs between interpretability, flexibility, and processing resources.

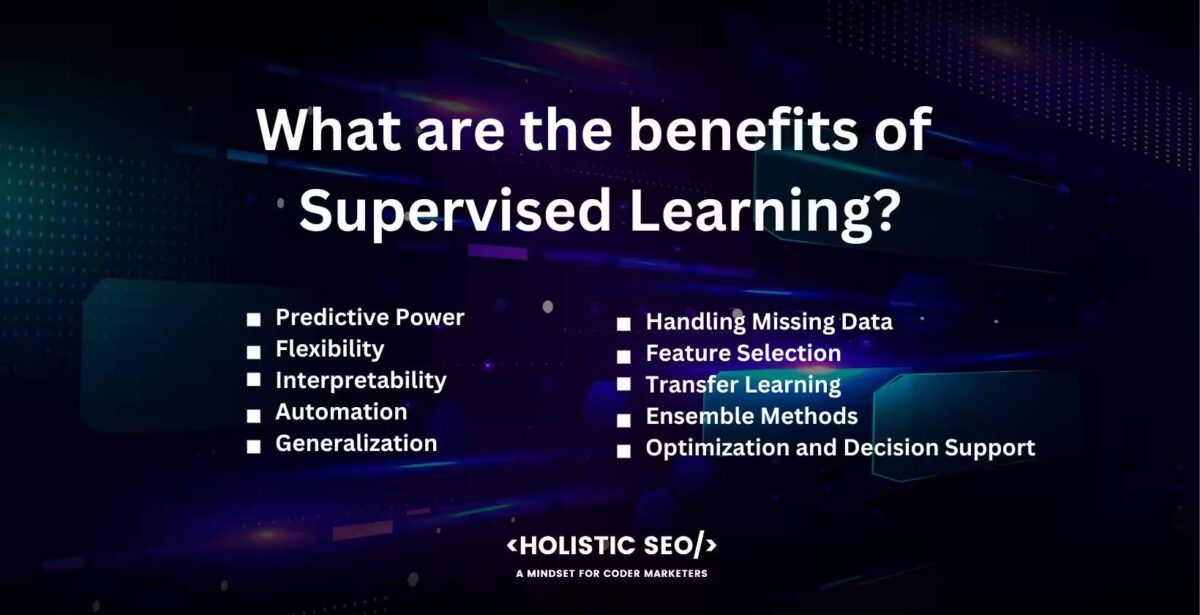

What are the benefits of Supervised Learning?

Listed below are the benefits of Supervised Learning.

- Predictive Power: Its algorithms learn from labeled training data and make accurate predictions or classifications on fresh, unseen instances. They provide precise predictions based on input attributes because they capture complex patterns and relationships in the data.

- Flexibility: A wide range of tasks, including classification, regression, and time series analysis, are handled by supervised learning algorithms. They are adaptable in various fields since they handle numerical and category information.

- Interpretability: Some supervised learning methods, such as decision trees or linear regression, offer models that are easy to understand. They enable a better understanding and interpretation of the findings by providing insights into how input features affect projected outcomes.

- Automation: Its models generate predictions or categorize new occurrences without human interaction after training. It saves time and resources in activities where the manual analysis is too time-consuming or unfeasible.

- Generalization: Its goal is a generalization, transferring knowledge from training data to new situations. They discover patterns and connections used to analyze fresh data and predict upcoming or unforeseen events.

- Handling Missing Data: Two methods of it used to manage missing data are imputation or treating missingness as a separate category. It permits the use of incomplete datasets while maintaining the precision of predictions.

- Feature Selection: Selecting relevant characteristics or variables that are most helpful for the prediction or classification task is done with the help of supervised learning algorithms. The feature selection procedure boosts interpretability, decreases dimensionality, and improves model performance.

- Transfer Learning: Its models are trained on one task or domain and are occasionally applied to or modified to perform better on related tasks or domains. It saves time and costs when labeled data for a certain job is scarce or expensive to collect.

- Ensemble Methods: Using ensemble approaches, such as random forests or gradient boosting, which combine many models to increase prediction accuracy and robustness, is achievable with supervised learning. Ensemble approaches manage complicated relationships in the data and overfitting.

- Optimization and Decision Support: Its models are used to streamline procedures, reach wise choices, or assist in decision-making across various areas. They help with crucial applications, including resource allocation, risk assessment, fraud detection, and medical diagnostics.

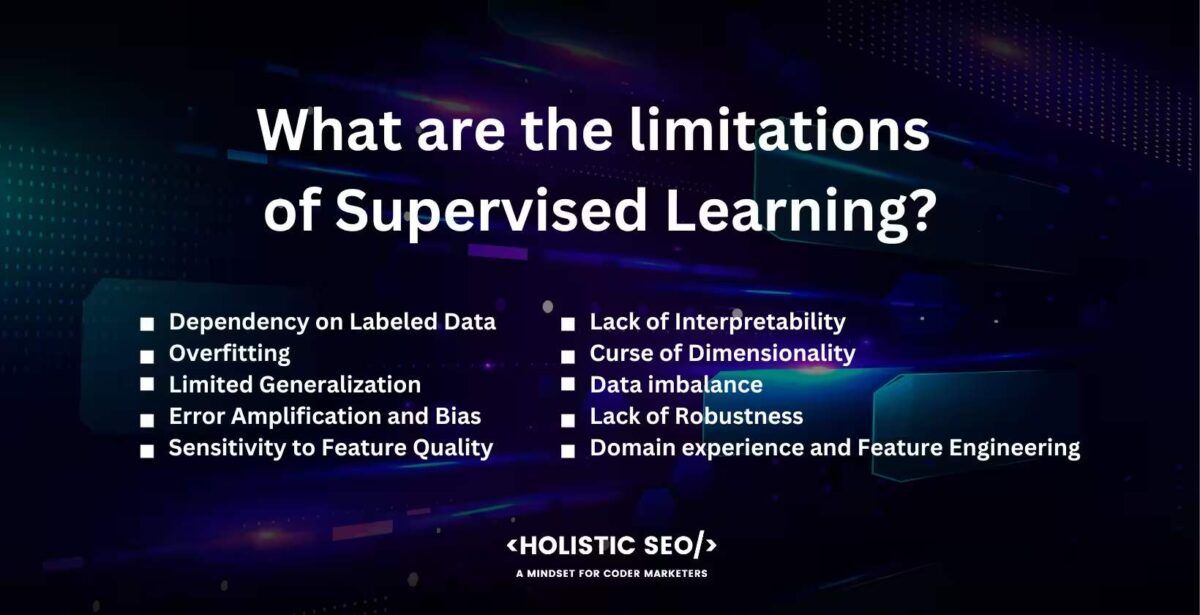

What are the limitations of Supervised Learning?

Listed below are the limitations of Supervised Learning.

- Dependency on Labeled Data: Supervised learning needs a lot of labeled training data, where each instance is linked to the output value or class label that corresponds to it. It takes a long time, expensive, or impracticable in some domains to gather and classify a significant amount of high-quality training data.

- Overfitting: Supervised learning models are prone to overfitting, particularly if they are overly complicated or if the training data is noisy or contains outliers. Overfitting is when a model fits the training data too closely, negatively affecting the generalization and performance of new data.

- Limited Generalization: Supervised learning models frequently generalize successfully to examples beyond the training data’s range but have difficulty doing so. Complex relationships or patterns present in the data but poorly represented in the training set escape the attention of models.

- Error Amplification and Bias: Supervised learning algorithms are biased if the training data is skewed or has inherent biases. Predictions made with biased training data reinforce preexisting societal or cultural biases. Inaccurate predictions result from errors or mislabeling in the training data that are propagated and magnified by the learning system.

- Sensitivity to Feature Quality: The quality and applicability of the chosen features significantly impact how well-supervised learning models perform. The model’s accuracy is hampered if the features do not capture the crucial data for the task or contain irrelevant or noisy data.

- Lack of Interpretability: It is difficult to explain the learned correlations between features and predictions when using some supervised learning algorithms, such as neural networks or ensemble approaches, which are frequently referred to as “black-box models.” The lack of interpretability hinders confidence, comprehension, and acceptance of models in domains where interpretability is critical.

- Curse of Dimensionality: It refers to the exponential growth in data requirements as dimensionality increases in feature spaces. The performance of supervised learning models suffers in high-dimensional spaces due to data sparsity and the greater danger of overfitting.

- Data imbalance: Supervised learning models find it difficult to efficiently learn from the underrepresented class or correctly forecast the underrepresented class when the distribution of class labels or output values in the training data is significantly unbalanced. It results in inaccurate forecasts and poorer performance in minority-serving classes.

- Lack of Robustness: Supervised learning models are sensitive to minor adjustments in the training data, leading to a change in the model’s predictions or results. Models are not very robust in real-world circumstances since they don’t always generalize well to new or barely modified cases.

- Domain experience and Feature Engineering: Supervised learning frequently necessitates domain experience and manual feature engineering to find significant features and generate useful data representations. The ability of the data scientist or domain expert to create meaningful features determines the success of supervised learning models.

How to use Supervised Learning?

Listed below are the steps on how to use Supervised learning.

- First, Define the Issue. Define the problem they wish to solve, whether it’s a classification, regression, or another form of supervised learning challenge.

- Second, Gather Labeled Training Data. Gather a sizable, representative dataset with examples that have been labeled. Make sure the labels for each instance appropriately represent the desired results.

- Third, Prepare the Data. The data is cleaned by dealing with missing values, eliminating outliers, and scaling or normalizing the characteristics as required. Divide the data into training and testing sets for evaluation.

- Fourth, Select a Suitable Algorithm. Choose an appropriate supervised learning algorithm based on the nature of the problem, the type of data, and the available resources. Think about elements including performance, scalability, and interpretability.

- Fifth, Develop the Model. Input the training data into the selected algorithm, then train the model by tuning its internal parameters. Use techniques, including gradient descent, or optimization strategies unique to the chosen algorithm.

- Sixth, Evaluate Model Performance. Use the testing set and evaluate the model’s performance. Measure metrics such as accuracy, precision, recall, or mean squared error based on the type of issue. Adjust the model or conduct tests with various hyperparameters if necessary.

- Seventh, Fine-tune the Model. Optimize the model’s performance by adjusting it. Find the ideal hyperparameters or configurations using cross-validation, grid search, or Bayesian optimization methods.

- Eighth, Make Predictions. Use the trained model to classify or predict fresh data that hasn’t been seen before. Ensure the input data is properly preprocessed and prepared for the model’s specifications.

- Ninth, Evaluate Predictions. Determine the accuracy of the model’s predictions by comparing them to the ground truth labels or the desired outputs. Calculate assessment metrics to assess the precision and dependability of the model.

- Tenth, Iterate, and Improve. Re-examine the data, model, and evaluation findings as they iterate on the process. Improve performance by modifying the features, changing the model architecture, or investigating ensemble techniques.

- Eleventh, Deploy and Monitor. Deploy it in a production environment to make predictions in real-time once happy with the model’s performance. Maintain accuracy by regularly assessing and updating the model’s performance as necessary.

How is Supervised Learning used in AI Newsletter?

Supervised learning plays an important role in creating an AI newsletter, particularly in content recommendation, personalization, and user interaction. The email platform uses supervised learning algorithms to monitor user behavior, preferences, and interactions to customize the content and recommendations for specific subscribers.

Content recommendation is a typical application of supervised learning in an AI newsletter. The platform gathers information on user activity, such as click-through rates, reading duration, or article evaluations. It uses labeled information to train a recommendation model. The model forecast and recommend articles, news, or resources that are expected to be of interest to each subscriber based on their previous interactions.

Personalization is another application of supervised learning in an AI newsletter. User demographics, historical reading habits, and explicit input are all used by the platform to make bespoke newsletters that are catered to specific tastes. The system produces customized newsletters that are tailored to the preferences of each subscriber by training a model on labeled data that links user attributes with particular content categories or themes.

Supervised learning helps to churn prediction and user engagement. The platform trains a model to anticipate whether subscribers are going to churn or become disengaged by examining user behavior and historical data. It allows the AI newsletter provider to actively target those subscribers with relevant offers or content to boost retention.

Supervised learning is very important in producing an AI newsletter. It is crucial to remember that additional methods, including natural language processing (NLP), sentiment analysis, or unsupervised learning, further enhance the whole system. Generating AI newsletter boosts content curation and better user experience and raises the relevance and value of the newsletter for its subscribers by integrating several methodologies.

How to use Supervised Learning to filter Email Spam?

Listed below are the steps to use Supervised Learning to filter Email Spam.

- First, Collect and Label Training Data. Compile various email datasets, both spam and real “ham”, and assign labels to each. Give each email in the dataset the designation “spam” or “ham” based on its current condition.

- Second, Preprocess Email Data. Remove extraneous data from the email data, such as email headers or HTML elements. Put the text in a manner that allows users to extract and analyze features from it.

- Third, Extract Relevant Attributes. Find relevant attributes that are used to tell spam from real communications using email data. Words, phrases, email headers, or structural characteristics are examples. Bag-of-words representation and Term Frequency-Inverse Document Frequency (TF-IDF)are frequent methods for feature extraction.

- Fourth, Divide the Data. Create training and test sets from the labeled email dataset. The supervised learning model is trained using the training set, and its effectiveness is assessed using the testing set.

- Fifth, Select a Sufficient Supervised Learning method. Pick a sufficient supervised learning method, such as logistic regression, support vector machines, or naive Bayes for email spam filtering. Think about things such as handling high-dimensional data, interpretability, and performance.

- Sixth, Develop the Model. Feed the selected supervised learning algorithm with the preprocessed email data and associated labels. Utilize the training set to train the model by refining its parameters.

- Seventh, Evaluate Model Performance. Evaluate the trained model’s performance using the testing set. Determine assessment measures such as accuracy, precision, recall, or F1 score to assess how well the model distinguishes between spam and real emails.

- Eighth, Adjust Model Parameters. Fine-tune the model by modifying its parameters or experimenting with various hyperparameter configurations. Finding the best options for the selected algorithm require methods including grid search or cross-validation.

- Ninth, Apply the Model to New Emails. Use it to identify new, unused emails as either legitimate or spam after being pleased with the model’s performance. Use the learned model to make predictions after preprocessing the new emails in the same manner as the training data set.

- Tenth, Update and Refine the Model Periodically. The model must be updated and refined to account for evolving spam methods and patterns. Monitor the model’s performance and gather user feedback to increase accuracy and reduce false positives and negatives.

Can Supervised Learning be Used in Email Marketing?

Yes, supervised learning is used in Email Marketing. Supervised learning benefits user experience, content, and email marketing methods. Marketers use supervised learning algorithms to examine email marketing data and customer behavior to develop data-driven projections. Building a model from labeled data, such as email open rates, click-through rates, or conversion rates, is required to understand effective campaigns.

They use supervised learning to segment email lists according to demographics, preferences, and prior interactions. Personalized, targeted email messages targeting certain customer groups boost engagement and conversion rates. They employ supervised learning to personalize content based on client information such as surfing history, shopping behaviors, and email conversations. Dynamic email content increases engagement and revenue.

Predictive analytics for email marketing take advantage of supervised learning. Marketers use customer data to forecast email open, click-through, and purchase probabilities. Email campaigns are optimized by identifying high-value consumers, maximizing send times, and customizing offers based on anticipated preferences.

Can Supervised Learning be used to drive traffic to a website?

No, Supervised Learning is not used to drive traffic to a website. One is unable to directly increase website traffic by using supervised learning alone. A machine learning technique called supervised learning trains models to make predictions or categorizations based on labeled data. It is not intended primarily to create or direct visitors to a website.

Regression or classification models are supervised learning algorithms for user behavior prediction, content personalization, or marketing campaign optimization. They do not directly create or drive traffic to a website, but they offer insights into user preferences, segment users, or predict user behavior.

Other methods and strategies for increasing website traffic include search engine optimization (SEO), content marketing, social media marketing, online advertising, influencer alliances, and referral schemes. They center on boosting exposure, luring users, and promoting the website through focused marketing initiatives.

Supervised learning is used to evaluate user behavior, improve conversion rates, or customize the user experience on the website once traffic has been produced. Website owners or marketers improve user engagement, enhance content recommendations, or optimize user flows to boost conversions and retention by utilizing information from supervised learning models.

Can Supervised Learning be used to add voice commands to an AI?

Yes, voice commands are added to an AI system using supervised learning. Train a voice recognition model with supervised learning methods to enable spoken commands in an AI system. Inference follows the training.

Labeled audio data train a supervised learning model. It includes voice command audio samples and labels. The model recognizes audio data patterns that match speech commands. Deep learning techniques, including RNNs and CNNs, train the model using labeled data.

The trained model processes real-time voice commands during inference. The model processes auditory input from a user and anticipates the command based on learned patterns and features. The AI system acts on the command.

Supervised learning allows the voice recognition model to generalize from training data to accurately recognize a wide range of spoken commands, including ones it has not seen before. The model better recognizes voice commands from different people and surroundings with more diverse and representative training data.

What are examples of Supervised Learning application?

There are numerous fields where supervised learning is used, including Email Spam Filtering, image classification, Credit Scoring, Medical Diagnosis, and Sentiment analysis.

The first example is Email Spam Filtering. Supervised learning algorithms are trained to reliably categorize emails as either spam or valid “ham,” enabling efficient email spam filtering and eliminating unwanted emails from reaching users’ inboxes by utilizing labeled data.

The second example is Image classification. It uses supervised learning techniques to group photos into predetermined categories. Applications, including object recognition and emotion detection, are made achievable by the ability of its models to be trained to recognize items, animals, or facial emotions in images.

The next example is Credit Scoring. Its models examine previous credit data, including income, credit history, and financial records, to determine the probability that a consumer defaults on a loan. Lenders make educated decisions using the information, which helps with risk assessment and credit rating.

Another example is Medical Diagnosis. Its algorithms are used in medical diagnosis by learning from labeled data, such as patient symptoms, medical records, and diagnostic outcomes. It makes it attainable to create models that aid in the diagnosis of diseases or ailments by helping to forecast them.

Sentiment analysis is an example too of a Supervised Learning application. It analyzes text data, such as customer reviews, social media posts, or survey replies, to ascertain the sentiment expressed positive, negative, or neutral and the degree of that attitude. Supervised learning is a key component of the process. Businesses learn more about the attitudes and opinions of their customers by using the data.

The examples highlight the wide range of supervised learning applications and highlight the technology’s capacity to solve issues in several fields. Other applications for supervised learning include fraud detection, speech recognition, natural language processing, recommendation systems, and others.

Is the Exponential View Newsletter an example of Supervised Learning?

No, the Exponential View Newsletter is not an example of supervised learning. Azeem Azhar is the editor and author of the email The Exponential View weekly, which covers subjects in technology, society, and the economy. It is a human-driven content curation and distribution process rather than an application or system that employs supervised learning algorithms.

Supervised learning is a machine learning technique that involves training models to generate predictions or classifications based on labeled data. It frequently appears in various applications, including sentiment analysis, picture recognition, and spam filtering. The content generation and delivery processes for the Exponential View Newsletter do not rely on supervised learning algorithms.

Azeem Azhar has chosen and written the newsletter’s material based on the study, analysis, and curation. The selected content offers insights, research, and perspectives that spark discussion about the meeting point of technology and society.

Email newsletters use supervised learning algorithms for personalization and content recommendation. The features are more prone to be implemented in the infrastructure supporting the email delivery platform than in the content curation process for the Exponential View Newsletter.

What is the difference between Supervised and Unsupervised Learning?

Supervised and unsupervised machine learning employ distinct data-driven learning methodologies. The supervised learning algorithm is given labeled training data, where each instance corresponds to an objective or output value. Find a mapping function that uses labeled data patterns to predict or categorize unobserved instances. Unsupervised learning employs data without labels and has no goals. It discovers data patterns, structures, and relationships without using class labels or outputs. Algorithms for unsupervised learning, such as dimensionality reduction, generative modeling, and clustering, find groupings, aggregates, and latent components.

Listed below are the differences between supervised and unsupervised learning.

- Labeled vs. Unlabeled Data: Supervised learning uses labeled training data with known output values, while unsupervised learning uses unlabeled data to find patterns and structures without target values.

- Predictive vs. descriptive: Supervised learning predicts or classifies input features and labeled outputs. Unsupervised learning describes data structures without making predictions or classifications.

- Evaluation: Accuracy, precision, and mean squared error is used to evaluate supervised learning models. Unsupervised learning evaluation uses cluster quality, cohesiveness, and visual inspection.

- Task Complexity: Supervised learning uses known output labels to structure and control learning. Unsupervised learning is more complicated, since it entails finding hidden patterns or structures without guidance.

- Data Availability: Supervised learning requires labeled training data, which is expensive or time-consuming. Unsupervised learning works with unlabeled data, making it better for situations with less labeled data.

Supervised and unsupervised learning has advantages. Supervised learning helps make particular predictions or classifications with labeled data, while unsupervised learning helps understand data structures and gain significant insights when labeled data is scarce. Supervised and unsupervised learning are different learning algorithms, yet both are helpful.

- 48 Online Shopping and Consumer Behavior Statistics, Facts and Trends - August 22, 2023

- B2B Marketing Statistics - August 22, 2023

- 38 Podcast Statistics, Facts, and Trends - August 22, 2023