This article has been prepared as a tutorial to show how to Retrieve Google SERP with regular periods, animate the differences to see Google Search Engine’s preference and experiment algorithms. In this article, we will use Semantic Search Queries to understand the topical coverage and topical authority of certain domains. We will retrieve all of the related Search Engine Result Pages (SERP) for these queries, crawl landing pages, blend the crawl data with also Google Search Engine’s SERP dimensions and third-party page information to find different correlations and insights for the SEO via Data Science.

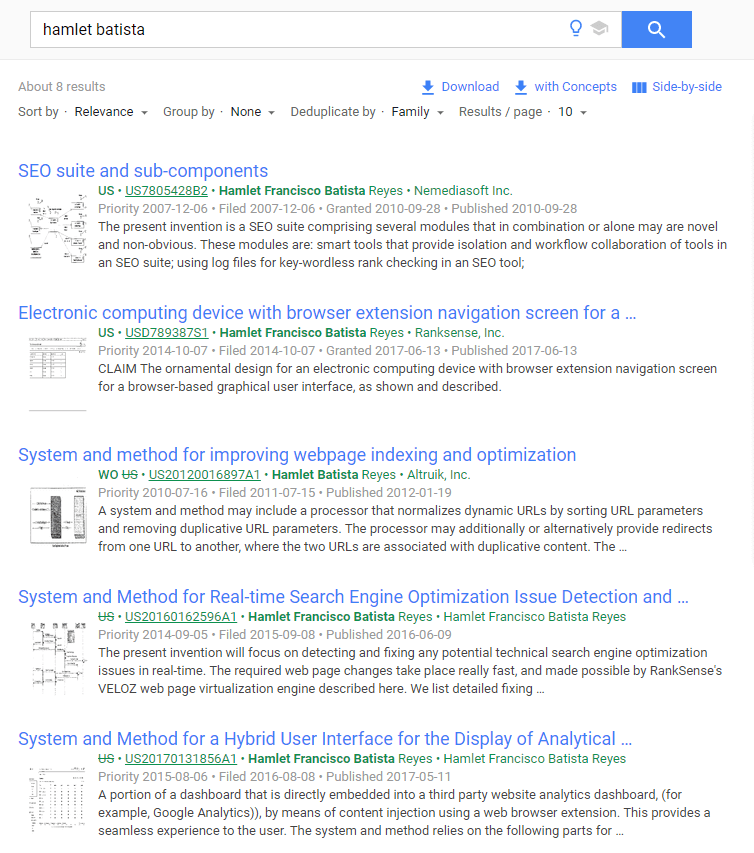

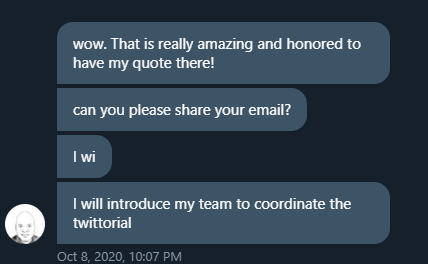

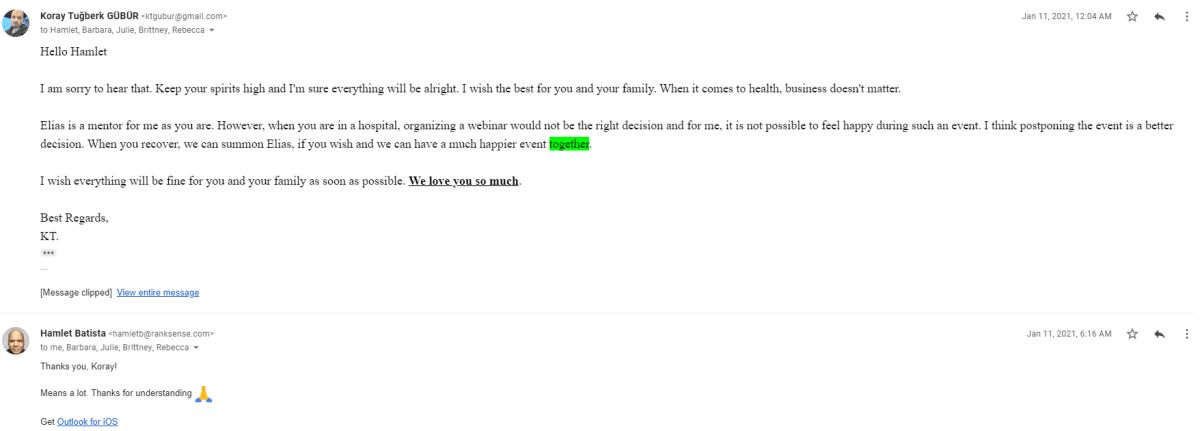

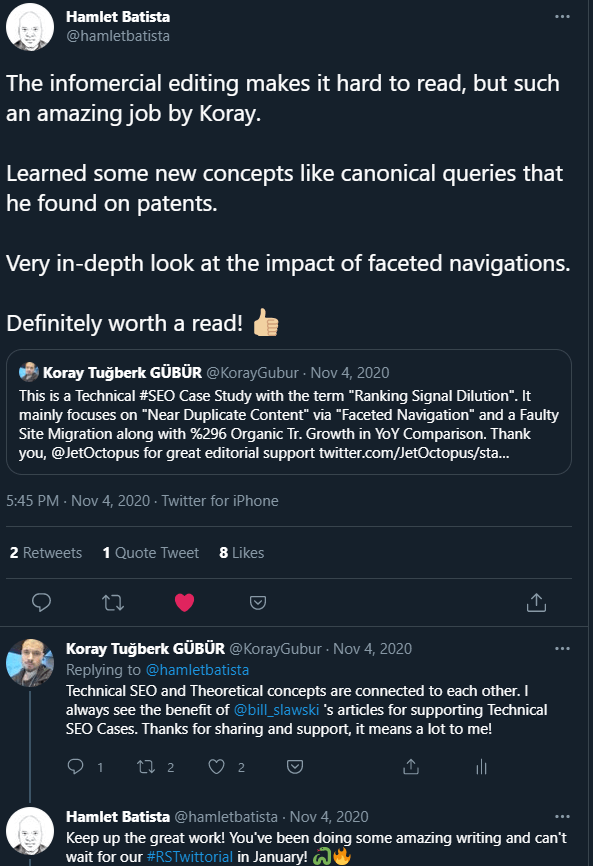

You can watch the related RankSense Webinar that has been performed with Dear Elias Dabbas (a.k.a, Professor) and Koray Tuğberk GÜBÜR. And, all of this Jupyter notebook normally was prepared in December for a planned and canceled webinar with Genius and Dear Hamlet Batista.

The webinar recording is below.

This Data Science and Visualization tutorial includes a comprehensive, complete guideline for analyzing algorithms, Search Engines’ decision trees, on-page, off-page, technical, non-technical features of landing pages along with text-analysis, SERP data insights, and topical search engine features. This mini Python and Data Science for SEO book have been written in the memory of Dear Hamlet Batista. At the end of the educational SEO Data Science article, you will see a complete tribute to him.

If you want to support his family, you can join Lily Ray’s campaign: https://www.gofundme.com/f/in-memory-of-hamlet-batista

The webinar for the Data Science, Visualization for SEO has been published with Koray Tuğberk GÜBÜR and Elias Dabbas. You can find the related webinar video below.

By examining the Data Science, Visualization for SEO Twittorial with RankSense, you can see some summative instructions and visualizations for this tutorial.

After Elias Dabbas and Koray Tuğberk Gübür performs the Data Science for SEO Webinar with RankSense and OnCrawl, the video of the webinar will be added here. If you want to wonder what RankSense is you can click the image below to support it.

I am a Star Author in OnCrawl for the last 2 years, and I thank you to OnCrawl for all their support during my SEO Journey. I also recommend you to check OnCrawl’s SEO and Data Science vision which is supported by Dear Vincent Terrasi.

You can see the content of tables for the Data Science, Visualization and SEO tutorial/guideline below.

Connecting to the Google Custom Search API (Programmable Search Engine API)

To connect the Google Custom Search API and retrieve Google Search Results with Python, you need two things.

- Google Developer Console Account

- Custom Search API Key

- Custom Search Engine ID.

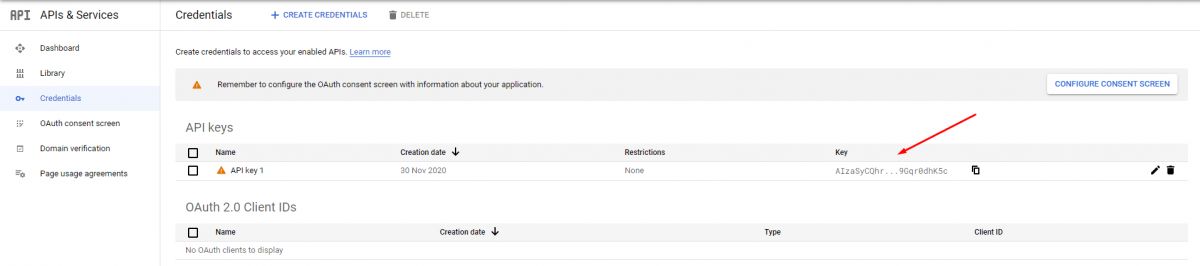

Below, you will see the section where you can create and copy a Custom Search API Key from Google Developer Console.

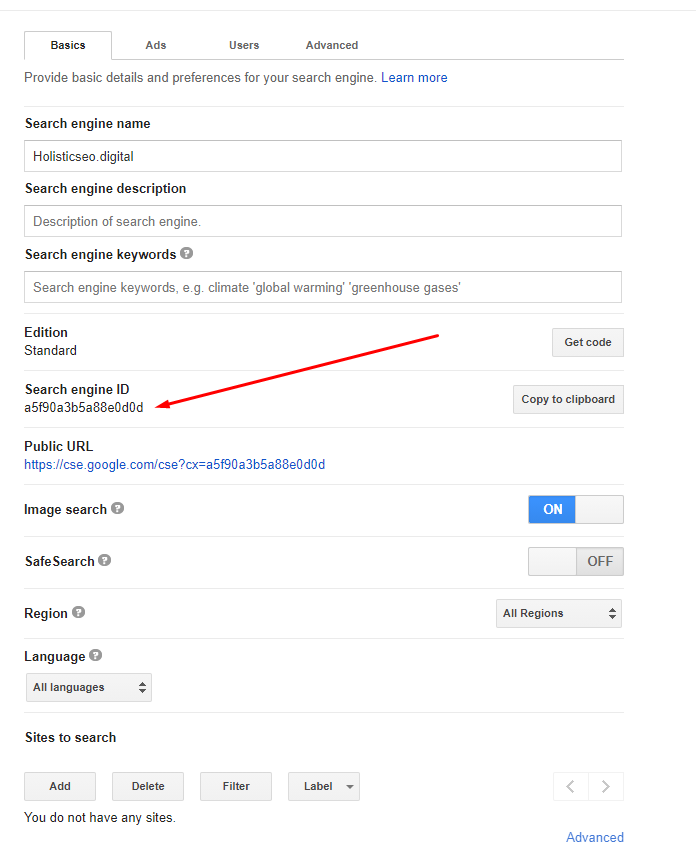

Below, you will see how you can create and find custom Search Engine ID.

Note: I recommend you to not change any kind of settings in your Custom Search Engine, it might affect the results that we will get via Python’s Advertools Package from Google.

Using Google Custom Search API via Advertools to Retrieve the SERP Data

To use Google’s Custom Search API via Advertools, you will need to write three lines of code as below.

import advertools as adv

api_key, cse_id = "YOUR API KEY", "YOUR CSE ID"

adv.serp_goog(key=api_key, cx=cse_id, q="Example Query", gl=["example country code"])With these three lines of code, you can connect to Google Custom Search API, use a custom search engine, and retrieve search engine results page data from Google. Below, you will see a live example:

Retrieving the Search Engine Results Pages with Regular Periods with Python

To take the Google Search Engine’s Result Pages with regular periods, you will need a timer. In Python, there are different methodologies for timing a function call. Below, you will see a representative example.

import schedule

import advertools as adv

api_key, cse_id = "YOUR API KEY", "YOUR CSE ID"

adv.serp_goog(key=api_key, cx=cse_id, q="Example Query", gl=["example country code"])

def SERPheartbeat():

"""

Calls the function with the determined frequency, for the determined queries.

Creates a CSV Output with the name of the actual date which the function called.

"""

date = datetime.now()

date = date.strftime("%d%m%Y%H_%M_%S")

df = adv.serp_goog(

q=['Credit Calculation', "Insurance", "Medical Coding Services", "Lawyer", "Business Services"], key=api_key, cx=cse_id, gl=["us"], start=[1,11,21,31,41,51,61,71,81,91])

df.to_csv(f'serp_recording_{date}_' + '_' + 'scheduled_serp.csv')

schedule.every(10).minutes.do(SERPHeartbeat)With the “schedule” package of Python, you can schedule Python function calls. And, in this example, we have created a function which is called as “SERPheartbeat()”. Below you can see the explanation of the “SERPheartbeat()” function.

- In the first line, we have created a variable which is a date, we have assigned “datetime.now” as a value to it.

- In the second line, we have changed the format of the “datetime.now” to “Day, Month, Year, Hour and Second”. We have called our “serp_goog” function for the queries of “Credit Calculation”, “Insurance”, “Medical Coding Services”, “Lawyer”, “Business Services”. These queries are the most expensive queries on Google.

- We have extracted all of the results for the “United States” with the “gl” parameter.

- We have taken the first 100 Search Results with the “start” parameter and its values.

- We have assigned the result to the variable “df”.

- We have called the “schedule.every.minutes.do” method to call our function every 10 minutes.

There is als oanother method to call a function with regular periods. You can see it below.

n=0

while n<99:

SERPheartbeat()

time.sleep(1)

n += 1A simple explanation of this code blog is below.

- Equal the “n” to the 0.

- Until “n + 1” is equal to the 99,

- Determine how many seconds or minutes to wait for another SERP Retrieving function call.

- Call the “SERPheartbeat()” function.

How to Unite All of the Outputs of SERP Retrieving Python Script?

To unite multiple CSV, XLSX files in Python, there are various ways. The first methodology for uniting multiple CSV outputs is a little bit more mechanical, you can see it in the code block below.

df_1 = pd.read_csv("first_csv_output.csv", index_col='queryTime')

df_2 = pd.read_csv("second_csv_output.csv", index_col='queryTime')

df_3 = pd.read_csv("third_csv_output.csv", index_col='queryTime')

df_4 = pd.read_csv("fourth_csv_output.csv", index_col='queryTime')

united_serp_df = df_1.append([df_2,df_3,df_4])

serp_csv.drop(columns="Unnamed: 0", inplace=True)

serp_csv.set_index("queryTime", inplace=True)

The explanation of the first methodology is below.

- We have created four different variable for four different “pd.read_csv()” command.

- We have determined the “queryTime” as the index column, it is important because it will be used while animating the result differences.

- We have united all the data frames with the “pd.append()” method with a list argument.

- We have dropped one of the unnecessary columns, we have changed the indexing column of the united data frame.

Still, this is too many lines of code, and also according to the SERP Retrieving calls, the count of the data frames to be united will vary. To prevent this situation, there is a more pratic methodology with the “glob” module. You can see it below.

serp_csvs = sorted(glob.glob(("str*.csv")))

serp_csv = pd.concat((pd.read_csv(file) for file in serp_csvs), ignore_index=True)- Two lines of code… With the “sorted(glob.glob((“str*.csv”)))” method, we have taken all of the “CSV” files that start with the “str” character, sorted them according to the “dates” that they are being recorded.

- We have contacted all of the retrieved SERP files with “pd.read_csv” method and list comprehension.

How to Animate Search Result Differences based on Periodic Search Engine Result Pages Records

To animate the Search Engine Result Page ranking differences based on different domains, dates and queries, Python’s Plotly library should be used with a sub-module which is “Express”. Below, you will see a code example and its explanation for using Plotly Express animations.

query_serp = serp_csv[serp_csv['searchTerms'].str.contains("your query", regex=True, case=False)]

query_serp['bubble_size'] = 35

fig = px.scatter(query_serp, x="displayLink", y="rank", animation_frame=query_serp.index, animation_group="rank",

color="displayLink", hover_name="link", hover_data=["searchTerms","title","rank"],

log_y=True,

height=900, width=1100, range_x=[-1,11], range_y=[1,11], size="bubble_size", text="displayLink", template="plotly_white")

#fig['layout'].pop('updatemenus')

fig.layout.updatemenus[0].buttons[0].args[1]["frame"]["duration"] = 2500

fig.layout.updatemenus[0].buttons[0].args[1]["transition"]["duration"] = 1000

fig.update_layout(

margin=dict(l=20, r=20, t=20, b=20),

paper_bgcolor="white",

)

fig.show(renderer='notebook')Most of the Plotly functions have many parameters, but they are all in a semantic structure and logic. So, their documentation can be very long, but they are also human-readable. Below, you can find an explanation for this code block.

- At the first line, we have filtered a “search term” to create a ranking change animation for only that query, in this example, it is “your query” which means that whichever query you want.

- At the second line, we have created a new column for the “bubble size” for the data that we will animate.

- At the third line, we have called “px.scatter()” function, which includes the data frame which is “query_serp”, x and y axes, animation frame which is our index column, a text which will be displayed on the data and color palette of the chart.

- In the fourth and fifth lines, we have used “updatemenus” property to change the animation frame duration and transition duration between different frames.

- We have updated the layout of the chart for its paper background color and margin.

- We have called our plotly animation chart which shows the SERP Ranking Differences in an animated way in the notebook, with an inlined way.

Basiaclly, for retrieving and animating Search Engine Results Pages, we have shown all of the related steps. In the next section you will see a practical example with real-world data.

Retrieving and Animating the Search Engine Results Page Ranking Changes to See the General Situation

First, we need to import the necessary libraries.

import schedule

import advertools as adv

import pandas as pd

import plotly.express as px

import glob

import time

from datetime import datetimeCreate the Custom Search API Key and Search Engine ID as below.

cse_id = "7b8760de16d1e86bc" # Custom Search Engine ID

api_key = "AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA" # API Key for Custom Search API of GoogleDefine the function that we need for recording the SERP.

def SERPHeartbeat():

date = datetime.now()

date = date.strftime("%d%m%Y%H_%M_%S")

df = adv.serp_goog(

q=['Calories in Pizza', "Calories in BigMac"], key=api_key, cx=cse_id)

df.to_csv(f'serp{date}' + '_' + 'scheduled_serp.csv')

Call the related function with “schedule” and a for loop.

schedule.every(10).seconds.do(SERPHeartbeat)

#For making the Schedule work.

n = 5

while True:

schedule.run_pending()

time.sleep(1)

n += 1

OUTPUT>>>

2020-12-28 21:44:27,994 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: [never], next run: 2020-12-28 21:44:27)

2020-12-28 21:44:27,998 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:44:28,640 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:44:39,451 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: 2020-12-28 21:44:29, next run: 2020-12-28 21:44:39)

2020-12-28 21:44:39,452 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:44:40,074 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:44:50,872 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: 2020-12-28 21:44:40, next run: 2020-12-28 21:44:50)

2020-12-28 21:44:50,873 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:44:51,417 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:02,195 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: 2020-12-28 21:44:52, next run: 2020-12-28 21:45:02)

2020-12-28 21:45:02,196 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:02,737 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:13,553 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: 2020-12-28 21:45:03, next run: 2020-12-28 21:45:13)

2020-12-28 21:45:13,553 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:14,205 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:17,915 | INFO | __init__.py:465 | run | Running job Every 1 minute at 00:00:17 do SERPHeartbeat() (last run: [never], next run: 2020-12-28 21:45:17)

2020-12-28 21:45:17,916 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:18,680 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:25,531 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: 2020-12-28 21:45:14, next run: 2020-12-28 21:45:24)

2020-12-28 21:45:25,532 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:26,074 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:36,880 | INFO | __init__.py:465 | run | Running job Every 10 seconds do SERPHeartbeat() (last run: 2020-12-28 21:45:26, next run: 2020-12-28 21:45:36)

2020-12-28 21:45:36,880 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in Pizza, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

2020-12-28 21:45:37,712 | INFO | serp.py:698 | serp_goog | Requesting: q=Calories in BigMac, cx=7b8760de16d1e86bc, key=AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA

Until this section, we have retrieved the related Search Results. Below, you will see the animation phase of SERP differences of this tutorial.

fig = px.scatter(pizza_serp, x="displayLink", y="rank", animation_frame=pizza_serp.index, animation_group="displayLink",

color="displayLink", hover_name="link", hover_data=["searchTerms","title","rank"],

log_y=False,

height=900, width=1700, range_x=[-1,11], range_y=[1,11], size="bubble_size", text="displayLink", template="plotly_white", title="Heartbeat of SERP for 'Calories in Pizza'", labels={"rank":"Rankings","displayLink":"Domain Names"})

#fig['layout'].pop('updatemenus')

fig.layout.updatemenus[0].buttons[0].args[1]["frame"]["duration"] = 500

fig.layout.updatemenus[0].buttons[0].args[1]["transition"]["duration"] = 1000

"""fig.update_layout(

margin=dict(l=20, r=20, t=20, b=20),

paper_bgcolor="white",

)"""

fig.update_xaxes(

title_font = {"size": 20},

title_standoff = 45)

fig.update_yaxes(

title_font = {"size": 20},

title_standoff = 45)

fig.show(renderer='notebook')With the “fig.update_xaxes” and “fig.update_yaxes” we have changed the title style of the x and y axes. Below, you can watch the animation of SERP Ranking Changes.

As you can see certain sources (websites and domains) switches their rankings with other certain types of sites with regular bases. You can also check these changes’ correlative with their update frequency, link velocity, or Google’s own algorithm updates. Below, you will see another visualization and animation example for the query of “calories in BigMac”.

bigmac_serp = serp_csv[serp_csv['searchTerms'].str.contains("bigmac", regex=True, case=False)]

bigmac_serp['bubble_size'] = 35

bigmac_serp

fig = px.scatter(bigmac_serp, x="displayLink", y="rank", animation_frame=bigmac_serp.index, animation_group="displayLink",

color="displayLink", hover_name="link", hover_data=["title"],

log_y=False,

height=900, width=1100, range_x=[-1,11], range_y=[1,11], size="bubble_size", text="displayLink", template="plotly_dark", title="Heartbeat of SERP for 'Calories in BigMac'", labels={"rank":"Rankings","displayLink":"Domain Names"})

#fig['layout'].pop('updatemenus')

fig.layout.updatemenus[0].buttons[0].args[1]["frame"]["duration"] = 450

fig.layout.updatemenus[0].buttons[0].args[1]["transition"]["duration"] = 1500

"""fig.update_layout(

margin=dict(l=20, r=20, t=20, b=20)

)"""

fig.update_xaxes(

title_font = {"size": 20},

title_standoff = 45)

fig.update_yaxes(

title_font = {"size": 20},

title_standoff = 45)

fig.show(renderer='notebook')You can see the result below.

You can also plot the different SERP changes from different dates without animation as vertically. You can see an example below.

Below, you will see an example of multiple SERP Animation in one chart.

serp['bubble_size'] = 15

fig = px.scatter(filtered_serp,

x="displayLink",

y="rank",

color="displayLink",

hover_name="link",

hover_data=["title","link","searchTerms"],

log_y=False, size="bubble_size", size_max=15,

height=1000,

width=1000,range_y=[0,10],

template="plotly_white",

animation_group="displayLink",

animation_frame="queryDay",

opacity=0.60,

facet_col="searchTerms",

facet_col_wrap=1,facet_row_spacing=0.01,

text="displayLink",

title="Multiple SERP Animating for the Ranking Differences",

labels={"rank":"Rankings", "displayLink":"Domain Names"})

#fig['layout'].pop('updatemenus')

"""

fig.update_layout(

margin=dict(l=20, r=20, t=20, b=20)

)"""

fig.update_yaxes(categoryorder="total ascending", title_font=dict(size=25))

fig.update_xaxes(categoryorder="total ascending", title_font=dict(size=25), title_standoff=45)

fig.layout.updatemenus[0].buttons[0].args[1]["frame"]["duration"] = 1500

fig.layout.updatemenus[0].buttons[0].args[1]["transition"]["duration"] = 2000

fig.update_yaxes(categoryorder="total ascending")

fig.show(renderer='notebook')Below, you will see an example of multiple SERP Animation at the same time. These Search Engine Results Page Changes are more intense because, they were retrieved during the 5th of December 2020 Google Broad Core Algorithm Update.

Besides these, you can also animate more than 10 SERP Ranking Changes but after a point, Plotly Graphical Interface will be broken. That’s why I recommend you animate the top 4 SERP Changes based on different dates at the same time. Below, you will see that I am animating SERP more than 20 queries at the same time, and Plotly is not handling it very well.

This tutorial is not just about taking the Search Engine Results Page with regular time differences and animating the ranking changes based on different days or hours. Also, it is about using Data Science, Data Visualization for analyzing the Google Algorithms with data blending and analytical thinking. The next section will be about more Data Science for SEO.

Extracting Semantic Entities from Wikipedia and Creating Related Search Queries based on Semantic Search Intents

To extract Semantic Queries from Web and examine their SERP with Data Science for SEO, we need to install and import the related Python Modules and Libraries as below.

#Install them if you didn't install before.

"""

!pip install advertools

!pip install spacy

!pip install nltk

!pip install plotly

!pip install pandas

!pip install termcolor

!pip install string

!pip install collections

!pip intall seaborn

!pip install matplotlib

!pip install urlparse

!pip install re

!pip install datetime

!pip install numpy

!pip install sklearn

"""

import advertools as adv

from termcolor import colored

import pandas as pd

import plotly.express as px

import matplotlib.pyplot as plt

from matplotlib.cm import tab10

from matplotlib.ticker import EngFormatter

import seaborn as sns

import numpy as np

from nltk.corpus import stopwords

import datetime

import nltk

import spacy

from spacy import displacy

from collections import Counter

import en_core_web_sm

from nltk.stem.porter import PorterStemmer

import string

from urllib.parse import urlparse

import re

from sklearn.feature_extraction.text import CountVectorizer

import time

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import numpy as npBelow, you can find and learn why we do need these Python Libraries and Modules.

- “Advertools” is for extracting Google Search Results, Crawling Landing Pages, Connecting to the Google Knowledge Graph, Taking Word Frequencies.

- “Termcolor” is for coloring the printed output so that we can show the difference between different outputs.

- “Matplotlib” is for creating static plots with Data Science

- “Plotly” is for creating interactive plots and animating the frames in charts.

- “Matplotlib.cm.tab10” is for coloring the different plots and plot sections.

- “Seaborn” is for finding and visualizing the quick correlations.

- “Numpy” is for making Pandas methods more effective.

- “Pandas” is for aggregating, uniting, blending the data for different dimensions.

- “Spacy” is for Natural Language Processing.

- “NLTK” is for Natural Language Processing

- “Datetime” is for using the dates in related Python code blocks.

- “Re” is for using regex.

- “SKlearn” is for vectorizing the sentences from the landing pages’ content.

- “Displacy” is for showing the NLP Relations in the contents.

- “String” is for helping the data cleaning.

- “EngFormatter” is for changing the X-Axes format in Matplotlib Charts.

- “PorterStemmer” is for lemmatization of the targeted content blocks.

- “URLParse” is for parsing the URLs from landing pages in the retrieved SERP.

Since, this is a long and detailed tutorial, every one of these modules and libraries are being used for multiple reasons and purposes. At the second phase, you need to add the Custom Search Engine ID and Custom Search API Key.

cse_id = "a5f90a3b5a88e0d0d"

cse_id = "7b8760de16d1e86bc"

api_key = "AIzaSyD2KDp26_TBAvBQdckjWLFiw24LHOqjTdI"

api_key = "AIzaSyAqUXT6JCU9bnpNv6ybAmgiJ2wPC-56CLA"

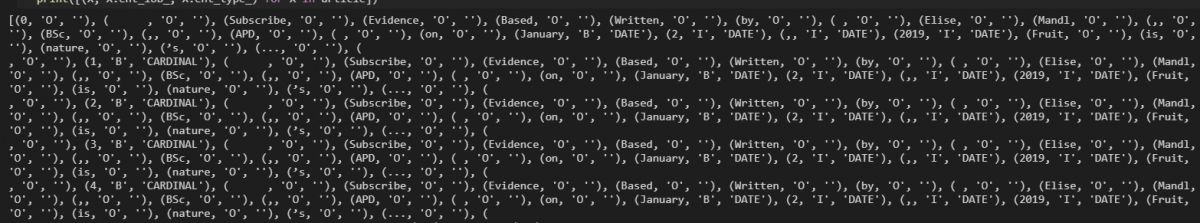

#I have used multiple IDs and API Keys in this tutorial.At the third phase, you need to extract the semantic queries from web. Any topic has some semantic queries. In this example, I have chosen “culinary fruits”, and I have extracted the list of culinary fruits with the help of “read_html” method of Pandas and Wikipedia.

culinary_fruits_df = pd.read_html("https://en.wikipedia.org/wiki/List_of_culinary_fruits", header=0)

culinary_fruits_merge_df = pd.concat(culinary_fruits_df)“header=0” means that the first row is for headers. With, “pd.concat” I have concatenated all of the HTML Tables as a Pandas Data Frame with the “culinary_fruits_merge_df”. Now, we can generate some Google Queries with these fruit names.

culinary_fruits_queries = ["calories in " + i.lower() for i in culinary_fruits_merge_df['Common name']] + ["nutrition in " + i.lower() for i in culinary_fruits_merge_df['Common name']]

culinary_fruits_queriesExplanation is below.

- We have used the “list comprehension” and “string.lower()” methods for creating semantic queries.

- We have used “calories in X” and “nutrition in Y” types of queries.

Below, you can see the result.

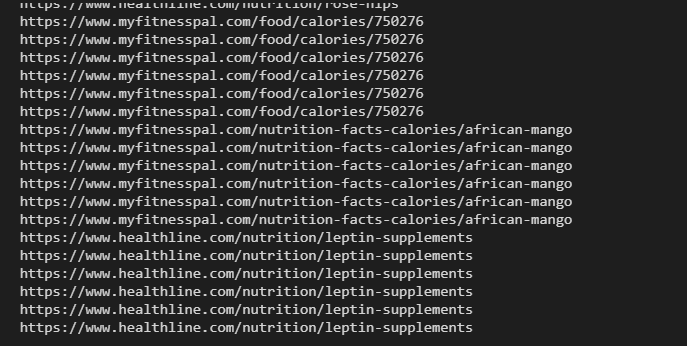

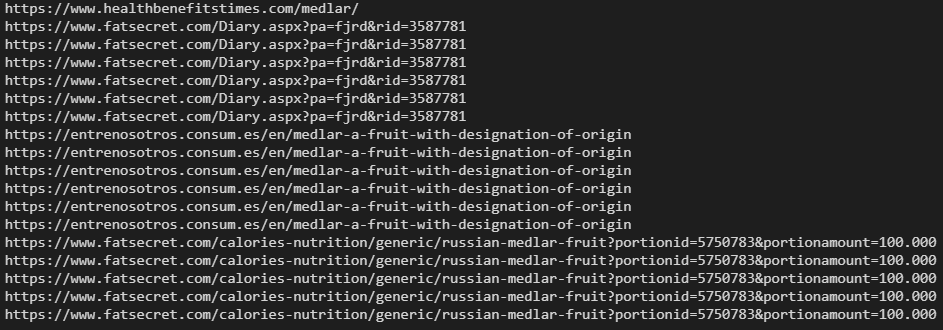

Since, we have queries from the same topic. Now, we can extract Search Engine Results Pages for these semantic search queries.

#serp_df = adv.serp_goog(cx=cse_id, key=api_key, q=culinary_fruits_queries[0:30], gl=["us"])

serp_df.to_csv("serp_calories.csv") # Taking Output of Our SERP Data as CSV

serp_df = pd.read_csv("serp_calories.csv") # Assigning our SERP Data into a Variable

serp_df = pd.read_csv("serp_calories_full.csv")

serp_df.drop(columns={"Unnamed: 0"}, inplace=True)Explanation of code block is below.

- We have extracted all of the Search Engine Results Pages for more than 1000 Queries in 10 seconds.

- We have assigned it to a variable.

- We have exported all of the information to the “serp_calroies.csv”.

- We have read and dropped an unnecessary column.

serp_df.shape

OUTPUT>>>

(8910, 633)

We have 8690 rows and 633 columns. All of these columns are coming from Google’s Programmable Search Engine vision, and some of these columns are not being talked about by the Search Engine Optimization Experts before, I recommend you to examine these column names and their data in the context of Holistic SEO.

serp_df.info()

OUTPUT>>>

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 8910 entries, 0 to 8909

Columns: 633 entries, Unnamed: 0.1 to branch:deeplink:canonical_identifier

dtypes: float64(68), int64(5), object(560)

memory usage: 43.0+ MBOur dataframe is more than 43 MB and most of the data types are object. Let’s print all of these columns as below.

for column20, column40, column60, column90 in zip(serp_df.columns[:90], serp_df.columns[90:180],serp_df.columns[180:360],serp_df.columns[360:438]):

print(colored(f'{column20:<22}', "green"), colored(f'{column40:<37}', "yellow"), colored(f'{column60:<39}', "white"),colored(f'{column90:<12}', "red"))We have used the “zip()” function for columns of our SERP data frame, and you can see all of the columns and their names with different colors and within the shape of four columns.

For instance, we have “google-signing-cookiepoliy”, “or “citation_date” columns, as a Holistic SEO, I care about everything that affects a site, every pixel, every code, byte, letter, color. And, I am happy to see such a detailed Search Engine perspective from the Custom Search API. We see that Google takes all the results and uses different types of dimensions while aggregating the data, and these dimensions can really be detailed. Let’s call a single row with every aspect to examine how it looks.

pd.set_option("display.max_colwidth", 90) #For changing the max Column Width.

serp_df.loc[35].head(50)

OUTPUT>>>

Unnamed: 0.1 35

gl us

searchTerms calories in cocky apple

rank 6

title Ciders – Buskey Cider

snippet 100% virginia apples fermented the right way. this cider is refreshing and \ndrinkable...

displayLink www.buskeycider.com

link https://www.buskeycider.com/pages/ciders

queryTime 2021-01-03 19:30:09.489752+00:00

totalResults 1370000

cacheId njJYPs0wr1EJ

count 10

cseName Holisticseo.digital

cx 7b8760de16d1e86bc

fileFormat NaN

formattedSearchTime 0.350000

formattedTotalResults 1,370,000

formattedUrl https://www.buskeycider.com/pages/ciders

htmlFormattedUrl https://www.buskeycider.com/pages/ciders

htmlSnippet 100% virginia <b>apples</b> fermented the right way. this cider is refreshing and <br>...

htmlTitle Ciders – Buskey Cider

inputEncoding utf8

kind customsearch#result

mime NaN

outputEncoding utf8

pagemap {'cse_thumbnail': [{'src': 'https://encrypted-tbn2.gstatic.com/images?q=tbn:ANd9GcRF7y...

safe off

searchTime 0.347557

startIndex 1

cse_thumbnail [{'src': 'https://encrypted-tbn2.gstatic.com/images?q=tbn:ANd9GcRF7yzgNJEAqYI2d2ZBKixW...

metatags [{'og:image': 'https://cdn.shopify.com/s/files/1/0355/2621/3768/files/Buskey_RVA_Cider...

cse_image [{'src': 'https://cdn.shopify.com/s/files/1/0355/2621/3768/files/Buskey_RVA_Cider_Can_...

hcard NaN

thumbnail NaN

person NaN

speakablespecification NaN

Organization NaN

techarticle NaN

BreadcrumbList NaN

organization [{'logo': 'https://cdn.shopify.com/s/files/1/0355/2621/3768/files/Buskey_logo_design_n...

product NaN

hproduct NaN

nutritioninformation NaN

breadcrumb NaN

imageobject NaN

blogposting NaN

listitem NaN

postaladdress NaN

offer NaN

videoobject NaN

Name: 35, dtype: objectWe have changed the column width with “pd.set_option” method.

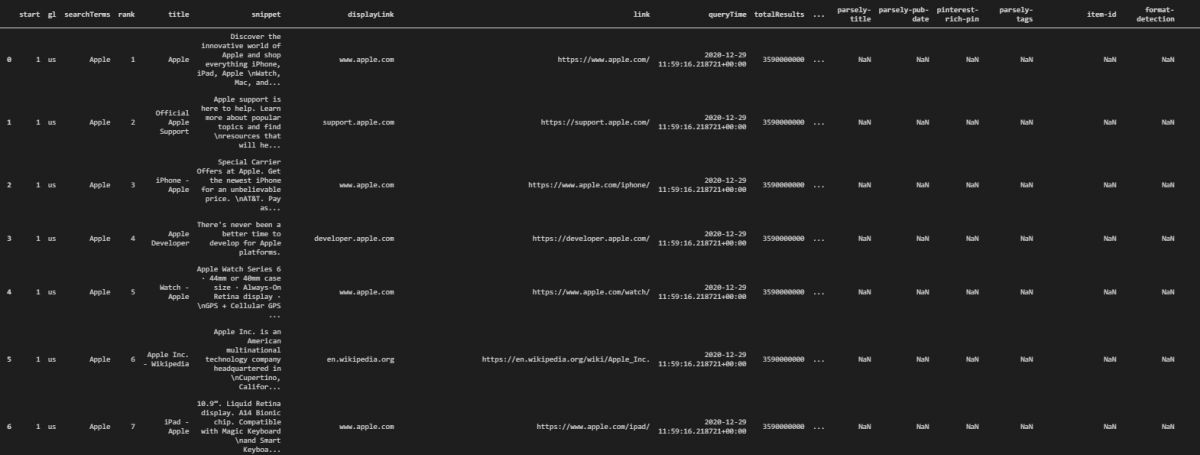

We have called the first 50 columns of the 35th row. You can see that it doesn’t have a breadcrumb, breadcrumbList, organization data, it doesn’t include any list item, product or postal address, and person data either. But, it has “title and encoding” information. Below, you will see the general view of the united SERP output.

serp_dfWe have called our data frame.

The introduction section of the SEO with Data Science has ended in this section. At the next section, we will start to aggregate and visualize the general information that we have gathered from the Search Engine Results Pages for the semantic queries that we have created from Wikipedia’s “list of culinary fruits” pages with the help of list comprehension and string manipulation.

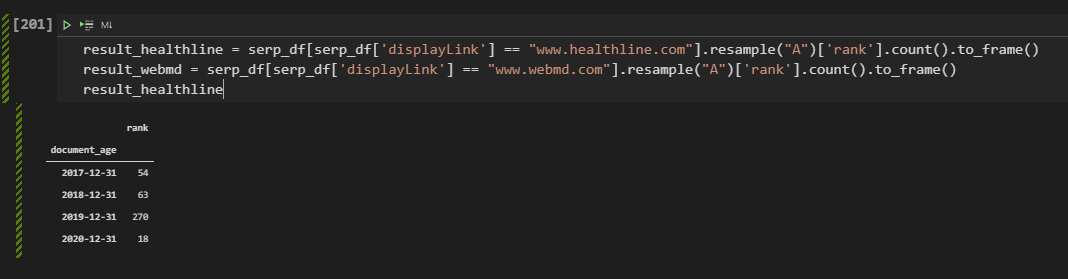

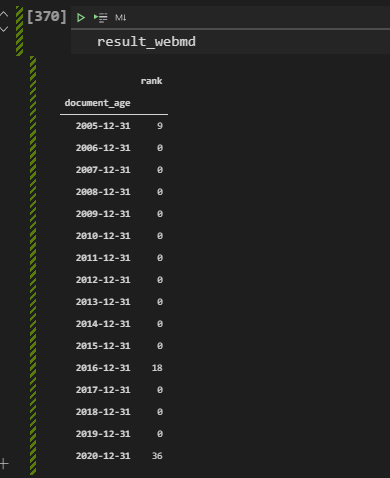

Calculating the Topical Coverage and Authority of Different Sources with Pandas

To aggregate a data with the chosen dimensions, there are two different methodologies in Pandas.

- Pivoting the data frame.

- Groping the data frame.

Below, you will see how to aggregate SERP data with the “pivot_table” method with Pandas for SEO. And, you will see how to learn which domain has the best topical authority and coverage for a semantic search network.

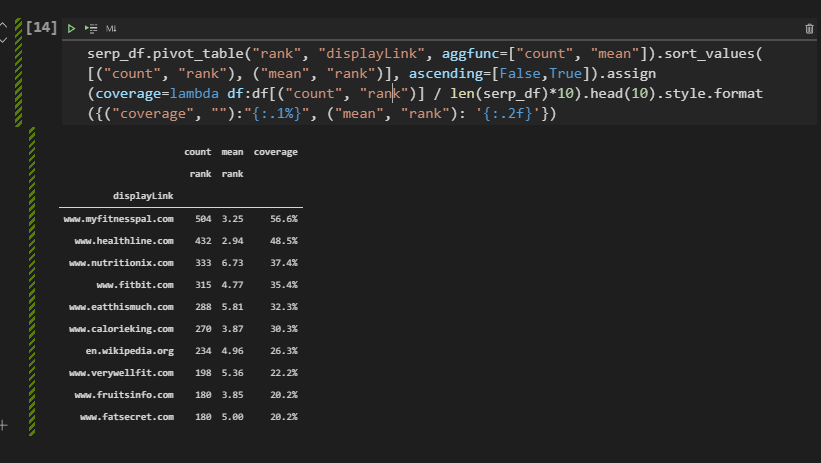

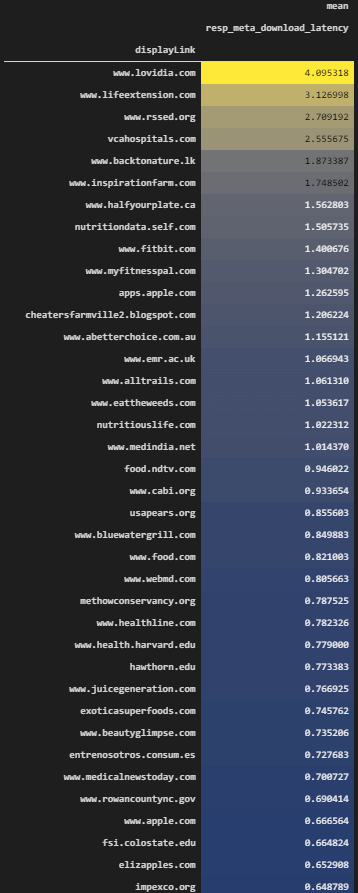

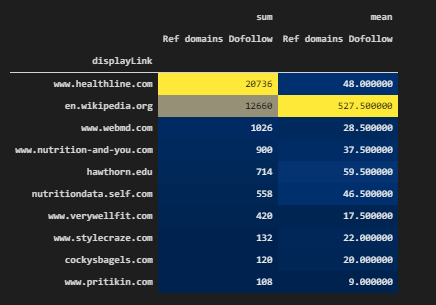

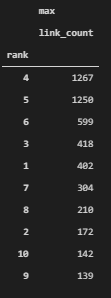

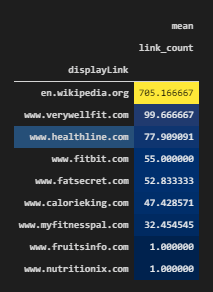

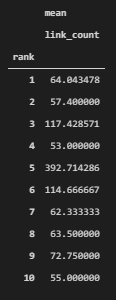

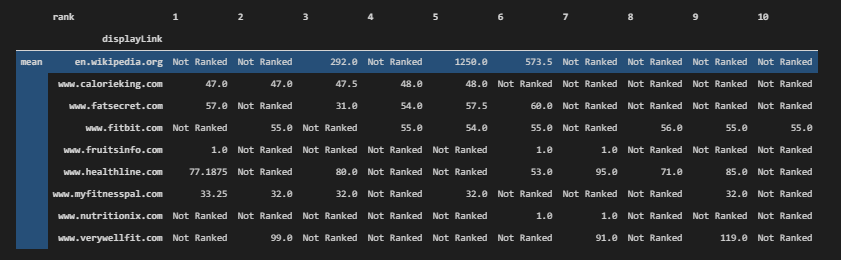

serp_df.pivot_table("rank", "displayLink", aggfunc=["count", "mean"]).sort_values([("count", "rank"), ("mean", "rank")], ascending=[False,True]).assign(coverage=lambda df:df[("count", "rank")] / len(serp_df)*10).head(10).style.format({("coverage", ""):"{:.1%}", ("mean", "rank"): '{:.2f}'})The explanation of this code block is below.

- Choose the data frame to be implemented “pivot_table” method.

- Choose which columns are pivoted. (“rank” and “displayLink)

- Choose which methods will be used. (“count” and “mean”)

- Sort the values according to their order and values.

- Create a new column with the “assign()” method.

- Use lambda function for creating new columns.

- Use data frame’s columns to aggregate data.

- Style the data of the newly created column.

We have divided the number that shows a domain’s ranked query count by the total length of our data frame which shows the total query count. Below, you will see the output.

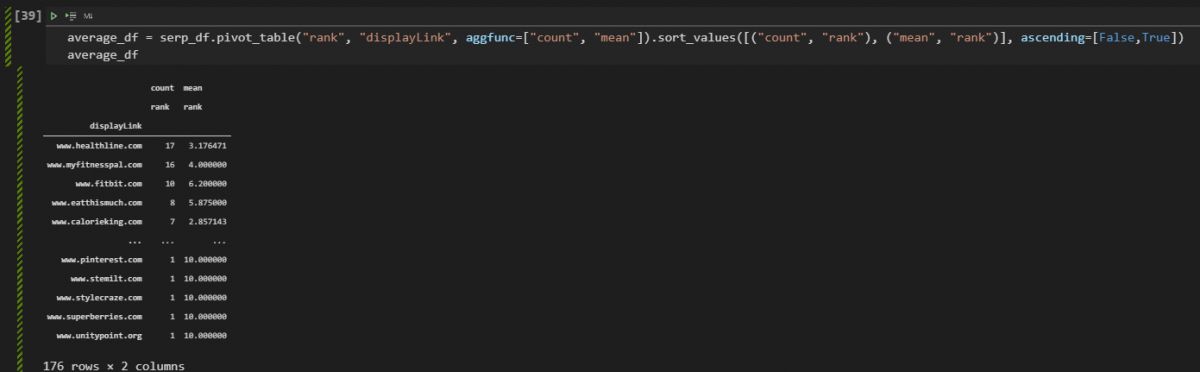

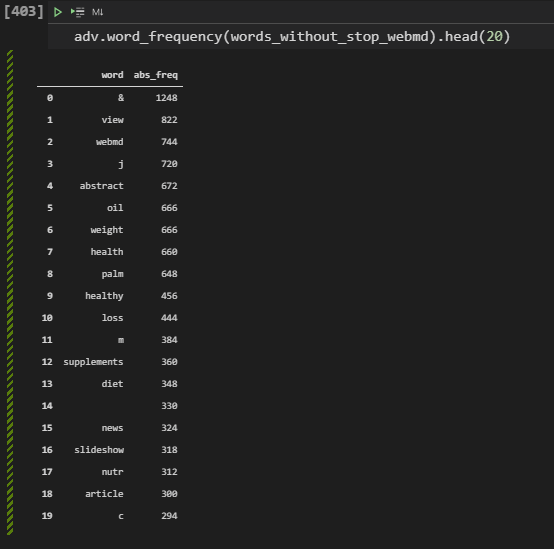

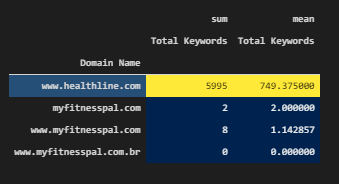

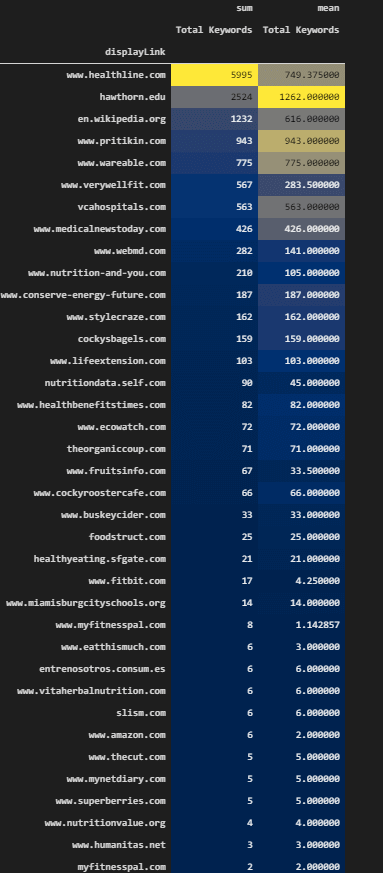

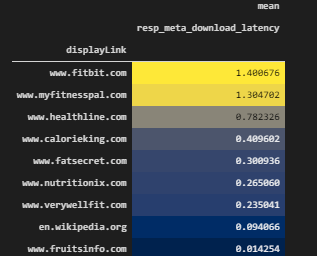

After explaining the Pivot Table method of Pandas while measuring the topical coverage of the domains (sources), we can also show the method with the “groupby”.

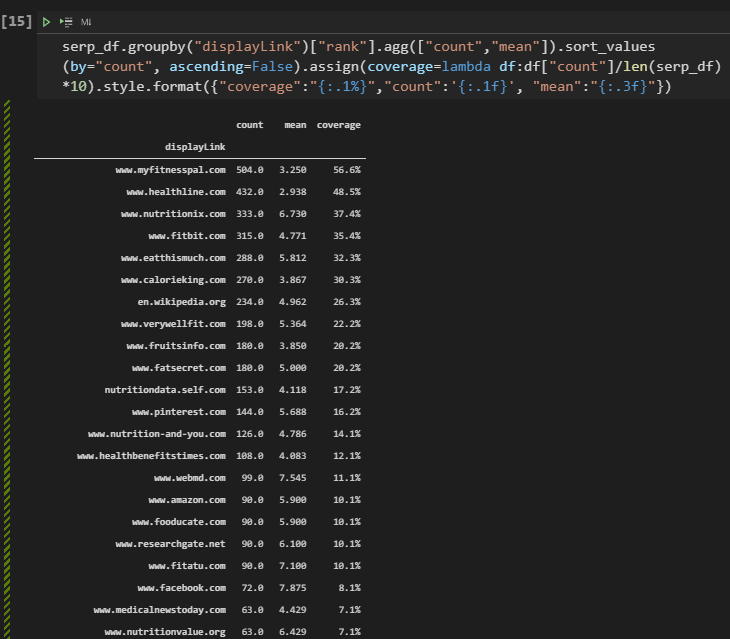

serp_df.groupby("displayLink")["rank"].agg(["count","mean"]).sort_values(by="count", ascending=False).assign(coverage=lambda df:df["count"]/len(serp_df)*10).style.format({"coverage":"{:.1%}","count":'{:.1f}', "mean":"{:.3f}"})Explanation of the “groupby” method is below.

- Group data frame with “displayLink” column values.

- Use “agg()” method to aggregate the data with “mean” and “count” values.

- Sort values with the “sort_values” method.

- Use assign to create a new column.

- Use the lambda function to create the value of the new column.

- Use “style.format()” for creating the format and style of the new created column. (“coverage”)

You can see the result below.

“Mean” column has “three more decimal points” after the “,” because we have used “mean:{:.3f}” And, we have more than 10 rows because we didn’t use the “head(10)” method and argument.

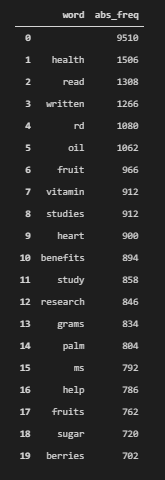

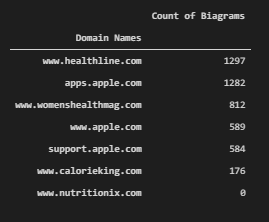

Conclusions from the Topical Coverage and Topical Authority measurement.

- Healthline has the best second topical coverage.

- Healthline has the best average ranking.

- Healthline has ranked 432 of the queries.

- MyFitnessPal has the best topical coverage.

- MyFitnessPal has the best second average ranking.

- MyFitnessPal 504 of the queries.

- We have “nutritionvalue”, “calorieking”, “fatsecret” as alternative competitors.

- We also see that Facebook and Wikipedia have coverage.

- We see that “WebMD” also on the competitor list.

Now, we can check which queries are being ranked by Healthline while MyFitnessPal is not ranking, or which queries exist that don’t include any of the main competitors. What are the differences between Healthline and MyFitnessPal in terms of content structure, or response size? We will examine lots of things in the context of SEO and Data Science.

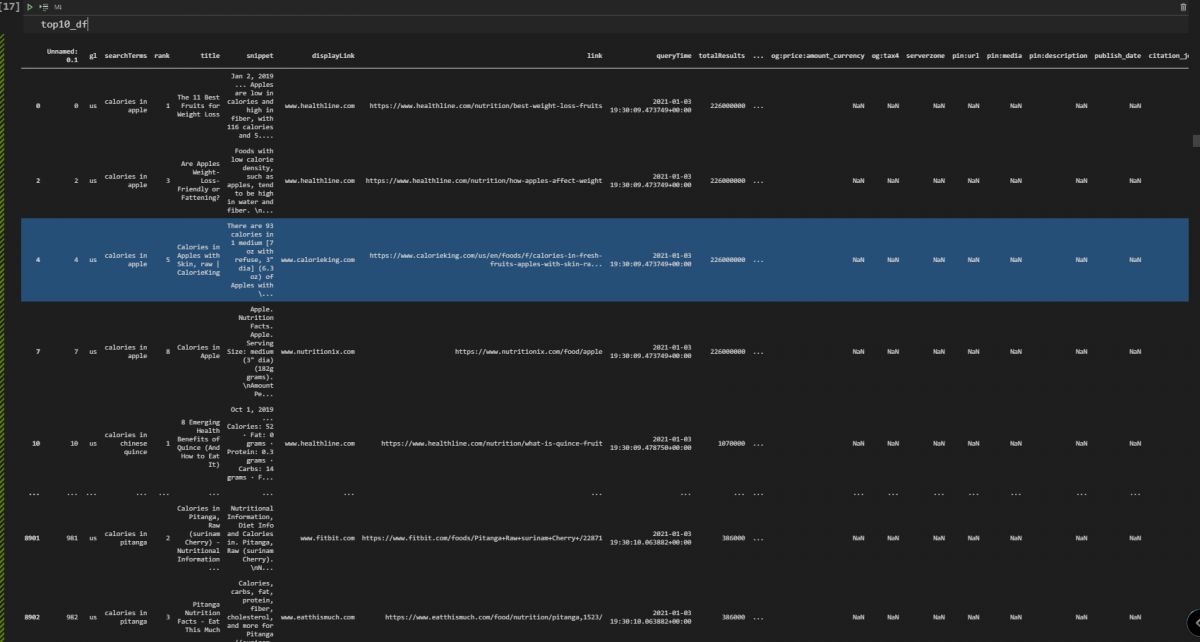

How to Extract Best 10 Domain from SERP Data for Thousands of Queries?

Since we acquired a lot of information, we can start visualizing some of the important aspects. To extract the best top 10 domains from the SERP Data, you can use the code block below. The purpose of this section is below.

- Taking the first 10 Domains

- Filtering the serp_df for these domains.

top10_domains = serp_df.displayLink.value_counts()[:10].index

top10_df = serp_df[serp_df['displayLink'].isin(top10_domains)] - We have created a variable with the name of “top10_domains” and we have taken the most used values in the “displayLink” section. We have taken the first 10 indexes.

- We have filtered the “serp_df” with the “isin” method to take only the rows that include the top 10 domains in their “displayLink” column.

Below, you will see the related output.

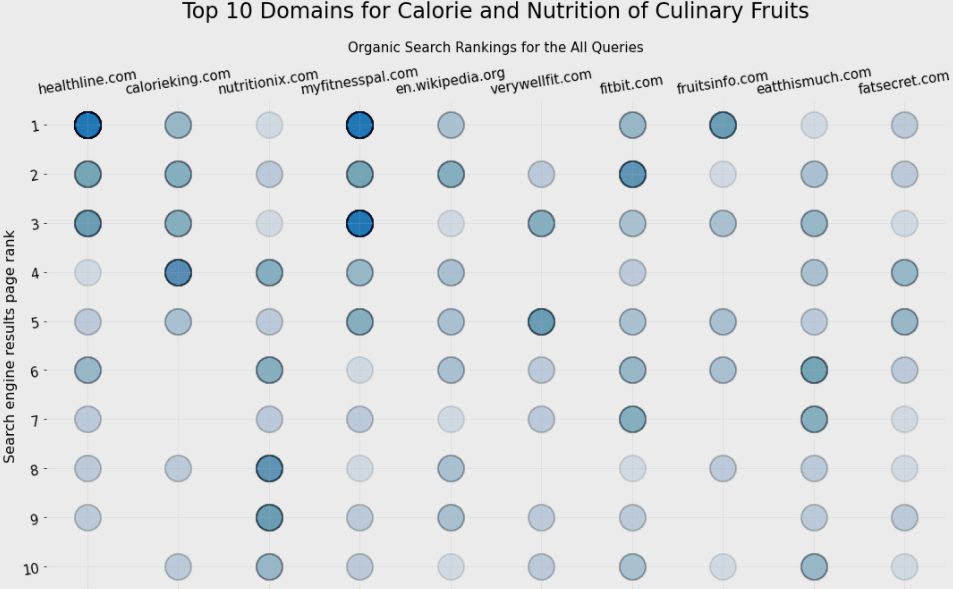

Visualization of the Top 10 Domains’ Organic Search Performance on the SERP with Matplotlib and Plotly

After extracting the top 10 domains and their organic search performance data with Pandas filtering methods, we can visualize the general situation. First, we will use Matplotlib for visualization.

fig, ax = plt.subplots(facecolor='#ebebeb')

fig.set_size_inches(18, 10)

ax.set_frame_on(False)

ax.scatter(top10_df['displayLink'].str.replace('www.', ''), top10_df['rank'], s=850, alpha=0.01, edgecolor='k', lw=2)

ax.grid(alpha=0.25)

ax.invert_yaxis()

ax.yaxis.set_ticks(range(1, 11))

ax.tick_params(labelsize=15, rotation=9, labeltop=True, labelbottom=False)

ax.set_ylabel('Search engine results page rank', fontsize=16)

ax.set_title('Top 10 Domains for Calorie and Nutrition of Culinary Fruits', pad=95, fontsize=24)

ax.text(4.5, -0.5, 'Organic Search Rankings for the All Queries',

ha='center', fontsize=15)

fig.savefig(ax.get_title() + '.png',

facecolor='#eeeeee', dpi=150, bbox_inches='tight')

plt.show()A summary of the code block above is below.

- In the first line, we have created a subplot, determined the face color of the plot.

- We have determined the resolution of the plot.

- We have determined that the color of the frame and the color of the borders of the frame will be the same

- We have used “ax.scatter” for creating a scatter plot. Since we have used multiple columns, the number of the “ax” is being determined by the count of the “displayLink” unique value.

- We have inverted the “Y-Axis”.

- We have set the range for the y axis labels.

- We have used “tick_params” for styling.

- We have used “set_ylabel” for putting a label to the y axis.

- We have used “set_title” to create a plot title.

- We have used the “ax.text()” method for putting a custom text to the determined place.

- We have saved the figure with its name.

- We have called our result.

You can see the result below.

The graph above shows which domain has more results at which rank. It uses “intensity of the bubbles” as the corresponding value count. And, we can see that “Healthline” and “MyFitnesPal” are competing with each other for the first three rankings.

Visualizing the Top Domains’ SEO Performance with Plotly Express

Instead of using a static table, we can use interactive visuals in Python. Interactive visuals and graphs from Plotly can give more opportunities the examine and grasp the value of the data. It also gives an opportunity for hovering on data and examine more dimensions. Below, you will see an example.

top10_df["size_of_values"] = 35 ## Giving size to the "bubbles"

fig = px.scatter(top10_df, x='displayLink', y="rank", hover_name=top10_df['displayLink'].str.replace("www.",""), title = "Top 10 Domains Average Ranking for Culinary Fruits", width=1000, height=700, opacity=0.01, labels={"displayLink": "Ranked Domain Name", "rank":"Ranking"},template="simple_white", size="size_of_values", hover_data=["searchTerms"])

fig['layout']['yaxis']['autorange'] = "reversed"

fig.update_yaxes(showgrid=True)

fig.update_xaxes(showgrid=True)

fig.update_layout(font_family="OpenSans", font=dict(size=13, color="Green"))

fig.layout.yaxis.tickvals=[1,2,3,4,5,6,7,8,9,10]

fig.show()Explanation of the methodology is below.

- We have created a new column with the name “size_of_values”. It is for the sizes of the values on the plot.

- We have used “px.scatter” to create a scatter plot. We have used domain names at the “x-axis”.

- We have used “rank” values in the y axis.

- We have used domains’ names as the hover name.

- We have set a title, determine the opacity, height, width values.

- We have changed the names of the labels.

- We have determined the color palette which is “simple_white”.

- We have chosen “searchTerms” as the hover data.

- We have reversed the Y-Axis.

- We have used “grids” on both axes.

- We have updated the font family, size, and color.

- We have put the “y-axis ticks”.

- We have called our graph.

This methodology includes less code and more data. It is similar to an Unique Mentor’s quote, “Do more, with less”. You can see the result below.

In the next section, we will take and compare the relevant page count of the sources.

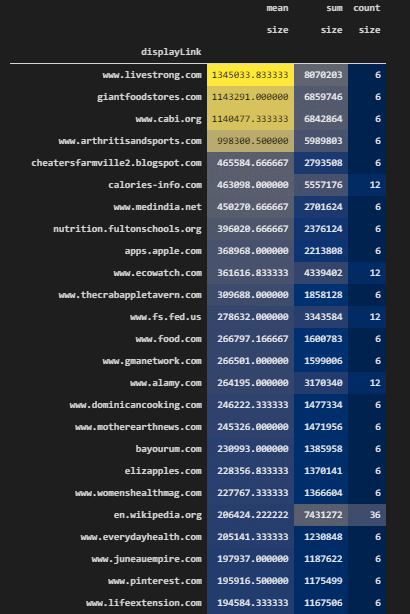

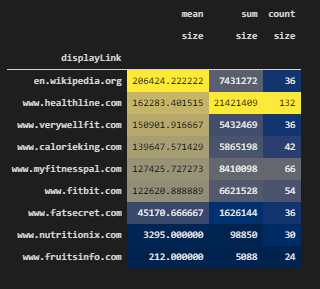

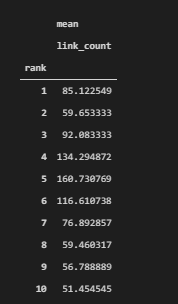

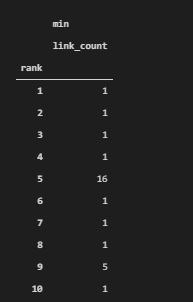

Taking Relevant Total Result Count per Top 10 Domains and Queries

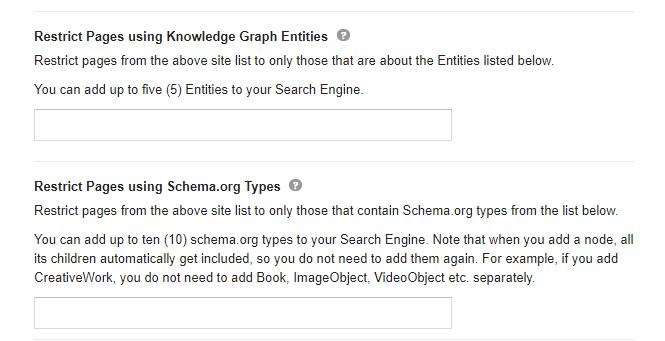

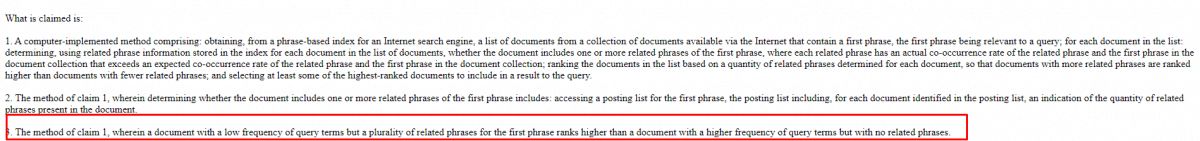

Total result count per domain is a signal for the overall relevance and prominence of the source for a specific topic or phrase. If you search for “apple” while filtering search results for only “Apple (Company)”, you will see an important amount of search results that talk about a specific entity. Thus, if we filter search results per domain with per query, and sum them all, we can see how well these sources are relevant to these semantic queries.

In other words, the total Result Count per query and domain can show how well a domain focuses on a specific topic. If the total result count is high, it means that they have more related search results and better overall relevancy for the topic.

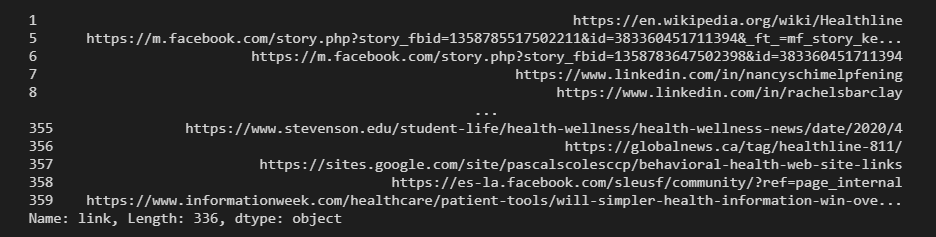

resultperdomain = adv.serp_goog(cx=cse_id, key=api_key, q=culinary_fruits_queries[:6], siteSearch=top10_df['displayLink'][:10].tolist(), siteSearchFilter="i")We have created a new variable with the name “resultperdomain”. We have used “siteSearchFilter” to filter the results per domain, we have used the “i” value with this parameter which means “include”. We have used multiple values for the “siteSearch” parameter which is the best 10 domain. Thus, we have acquired the best 10 domains’ results only for the first 6 queries from our semantic search query network.

resultperdomain.to_csv("resultperdomain_full.csv")

resultperdomain = pd.read_csv("resultperdomain.csv")

Above, we have exported and read the document so that we can use it in the future without performing the same query.

cm = sns.light_palette("blue", as_cmap=True)

pd.set_option("display.max_colwidth",30)

resultperdomain[["displayLink","title","snippet","totalResults","searchTerms"]].head(40).sort_values("totalResults", ascending=False).style.background_gradient(cmap=cm)We have used “sns.light_palette()” method to highlight the related column. We have changed the maximum “colwidth”, and we have taken the most important columns. Below, you can see the relevant result page count per domain.

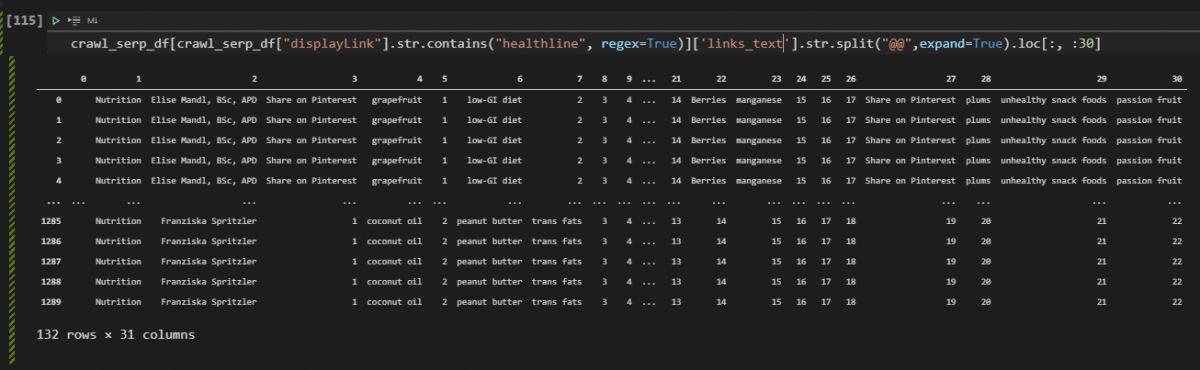

Taking Related Result Examples for Only One Domain

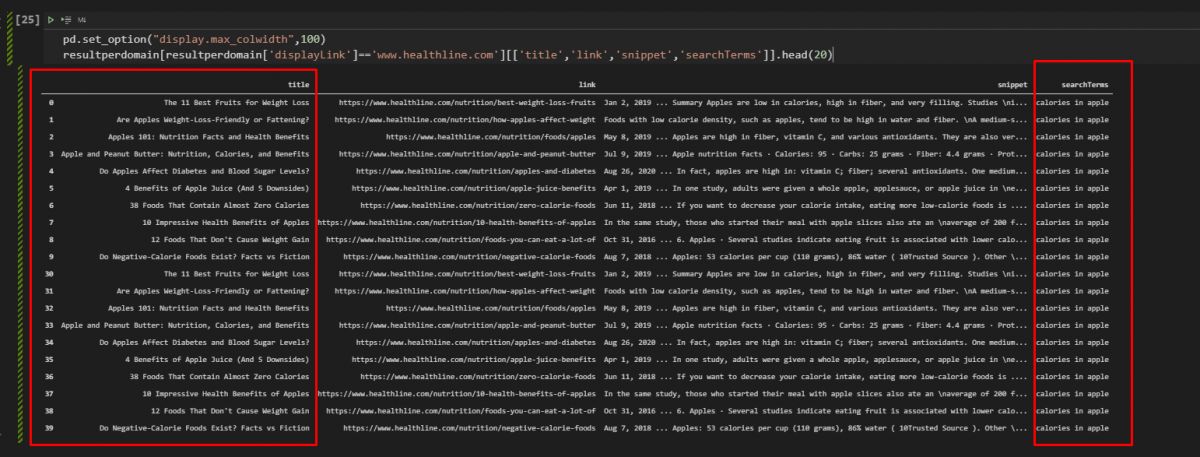

We also can take related results only for one source to see how many different pages they have, and how they approach to the specific topic.

pd.set_option("display.max_colwidth",100)

resultperdomain[resultperdomain['displayLink']=='www.healthline.com'][['title','link','snippet','searchTerms']].head(20)We can changed the maximum “coldiwth”.

We have filtered the data frame for only “healthline.com”, and thaken only the “title”, “link”, “snippet”, and “searchTerms” data. Below, you can see the result.

How to Visualize Total Result Count per Domain for a Semantic Search Query Network?

Visualization helps to understand the data in a better way. The same data can be explained with different sentences or different visuals. SEO Data Visualization is important to extract better and descriptive insights from SEO data frames. In this example, we will use “Treemaps” and “Bar plots” to compare domains’ result count for these semantic search queries. And, at the end of this visualization section, you will see that, there are multiple and effective ways for comparing different results.

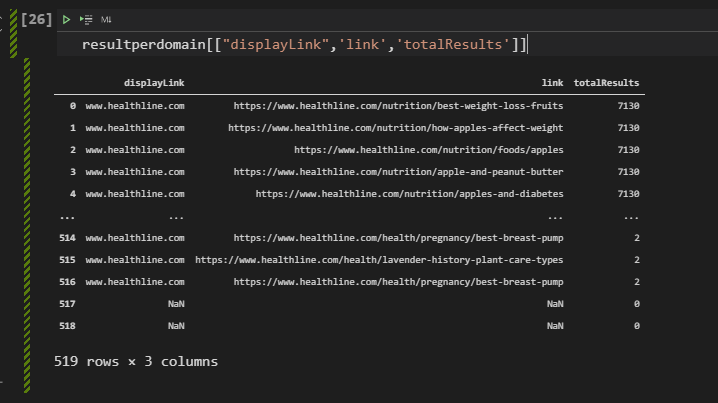

Filtering the SEO Performance Data for Visualization of Total Result Count Per Domain

To perform a successful SEO Data Visualizations, we should filter the data first. Below, you will see the relevant and necessary columns filtered.

resultperdomain[["displayLink",'link','totalResults']]

We have taken the “displayLink”, “link”, and “totalResults” columns while filtering results. Below, you will see that we are creating the necessary data frame for visualization.

top10_df[['displayLink','totalResults']].pivot_table("totalResults", "displayLink", aggfunc=np.sum).sort_values("totalResults",ascending=False)[:10]We have created a new pivot table from “totalResults” and “displayLink” columns while summing all of the total results with the “aggfunc=np.sum” parameter. We have sorted values according to the “totalResults” and sorting them in an ascending way.

Above, you will see the outcome of our code block.

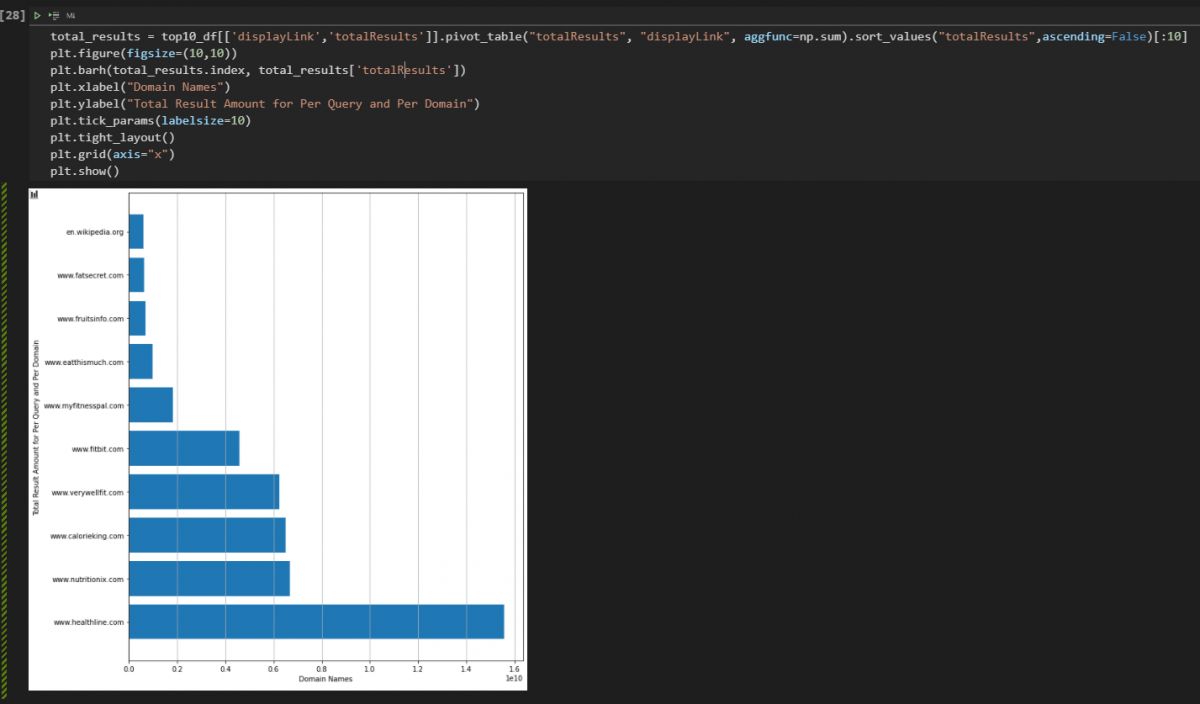

Visualization of Total Result Count Per Domain with Plotly

After filtering and aggregate the data, we can use matplotlib to visualize it. You will see a function blog below to visualize total result count per domain in a horizontal bar plot.

total_results = top10_df[['displayLink','totalResults']].pivot_table("totalResults", "displayLink", aggfunc=np.sum).sort_values("totalResults",ascending=False)[:10]

plt.figure(figsize=(10,10))

plt.barh(total_results.index, total_results['totalResults'])

plt.xlabel("Domain Names")

plt.ylabel("Total Result Amount for Per Query and Per Domain")

plt.tick_params(labelsize=10)

plt.tight_layout()

plt.grid(axis="x")

plt.show()Below, you can see all the related explanation for this matplotlib function call.

- Created a new variable, called “total_results”.

- Assigned it the first 10 results of our previously filtered data frame.

- Set figure size as “10×10”.

- Used “plt.barh” method for creating a horizontal bar plot.

- Put the x-axis label as “Domain Names”.

- Put the y-axis label as “Total Result Count for per Query and Per Domain”.

- Put the “xtick” values with “tick_params”.

- Used tight layout with “plt.tight_layout()”.

- Used grid design with “plt.grid(axis=”x”).

- Called the horizontal bar plot result.

You can see the relevant output and function call from the VSCode screenshot.

We see that the visual is consistent with the data that we have filtered and summed from our data frame.

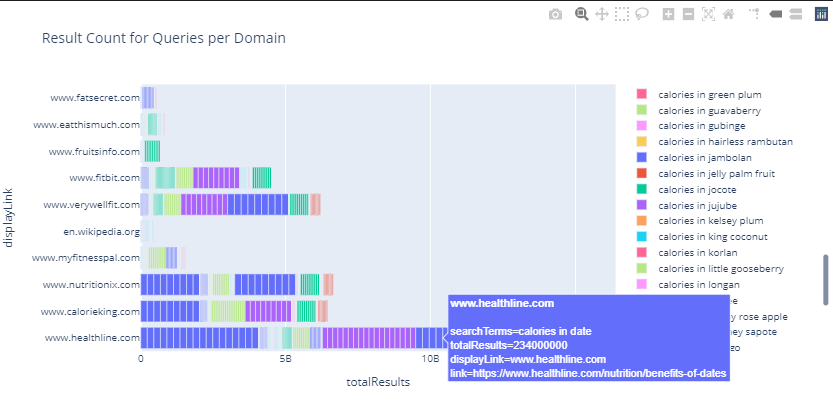

Visualization of the Relevant Result Count for per Queries and Top 10 Domains with Plotly

Visualization of the relevant result count can be done as interactive way with Plotly. You will see that we have less code with a better descriptive SEO data visualization below.

fig = px.bar(top10_df, x="totalResults", y="displayLink", orientation='h',color="searchTerms", hover_data=["totalResults", "displayLink","link","searchTerms"], hover_name="displayLink", title="Result Count for Queries per Domain", height=500, width=1000)

fig.show()There are only two lines of code here for doing the same task. Explanation is below.

- We have created a variable which is “fig”, assigned it to the outcome of “px.bar()” method, we have chosen our data frame, x and y-axis values, the orientation of the plot.

- We have colored the plot while categorizing every output with different queries thanks to “color=’searchTerms'”, we have chosen our data to be shown after the hover effect along with the name to be shown.

- We have put a title and called our interactive total result count per domain and query chart.

You can see the result below.

To show the outcome in a more descriptive way, we have put an image and video at the same time. As you can see we can check every query, result count for a specific URL from a domain, total result count, and search queries’ importance.

We see that the most result count belongs to the Healthline as source, and “calories in apple” as the query. Again, this shows the prominence of the source and query at the same time.

Visualization of Total Relevant Ranked Page Count per Domain

Page count is an indicator that shows how much content a source has for a specific topic. It basically shows how well different domains focus on the same topic, or how well they structure their content for these sub-topics. You can see the methodology to extract the page count for these queries per source (domain).

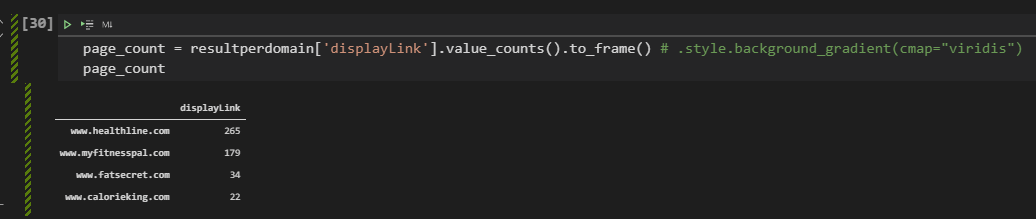

page_count = resultperdomain['displayLink'].value_counts().to_frame() # .style.background_gradient(cmap="viridis")

page_countYou can see the explanation of the code block below.

- We have created a variable, called “page_count”. We have assigned our “resultperdomain” dataframe’s “displayLink” columns’ values’ summed version within a data frame to it.

- We have called the data frame.

You can see the result below.

To visualize the total page count for these queries from every source, using a pie chart is the best option. Pie chart is usually used for showing the share of different sources or competitors over a market or area of industry. In this case, it will show the sources’ page count, and the page count will show the possible content granularity and sources’ size for SEOs. Below, you will see a simple pie chart creation example with Plotly Express.

fig = px.pie(page_count, values='displayLink', names=page_count.index, title='Relevant Page Count Per Domain')

fig.show()The explanation of this code block is below.

- We have created a variable, “fig”.

- We have assigned the “px.pie()” outcome to it.

- We have chosen the data frame, “page_count” data frame.

- We have used the “displayLink” as values for the pie chart.

- We have chosen the domain names as the labels with the “page_count.index”.

- We have chosen the title of the pie chart which is “Relevant Page Count Per Domain”.

- We have called the pie chart.

Below, you can see the pie chart example that shows the page count of sources for “the culinary fruit queries”.

We see that “healthline” has more pages than its competitors. Result count, page count, topical coverage and average ranking has a correlation with each other. It doesn’t mean that the “more pages” is better. It means that more semantic pages for semantic queries is better. But, in the future sections of this SEO Data Science tutorial, you will see that “Healthline” and “MyFitnessPal” have strategic differences in their websites. Let’s continue.

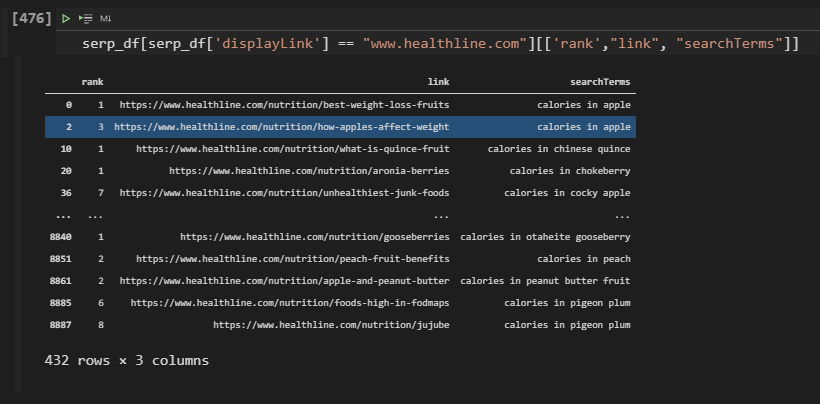

Checking and Visualizing First Three Results for a Specific Domain with Pandas and Plotly

We can check which domain has how many rankings in the first three results. Below, you will see a simpledata filtering example for the necessary visualization.

serp_df[serp_df['displayLink'] == "www.healthline.com"][['rank',"link", "searchTerms"]]We have filtered the “rank”, “link”, “searchTerm” columns for only the “healthline.com”. You can see the result below.

Besides filtering the results for only one domain, we can filter all the sources according to a specific Search Engine Results Page ranking range. In this example, we will create a new variable to encapsulate all the results within the first three ranks.

top_3_results = resultperdomain[resultperdomain['rank'] <= 3]

px.bar(top_3_results, x='displayLink', y='rank', hover_name="link", hover_data=["searchTerms","link","title"], color="searchTerms", title="Visualization of Rankings per Query for Competitors: Only for the First Three Ranks",labels={"rank":"Rank", "displayLink":"Domain Names","searchTerms": "Query", "totalResults":"Result Count","displayLink":"Domain Name","link":"URL", "title":"Title"})

#color is optionalYou can find the explanation below.

- Created a variable, “top_3_results”.

- Assigned the rows that include a result within the first three ranks with filtering the “rank” column.

- We have called the “px.bar()” method.

- Determined the titles, labels, x, and y-axis values, hover data, and hover names.

You can see the result below in an interactive way.

We see that Healthline has more results within the first three rankings, the main reason for this is Healthline’s topical authority, topical coverage, content detail level, and content count for the related queries.

Checking and Visualizing 4-10 Results for a Specific Domain with Pandas and Plotly

We also can check the last 4-10 results for comparing the general situation of the rankings for different domains with Python and data science. And, you can indirectly calculate how much percentage of a domain’s content is in the first 3 ranks or not.

_4_10_results = resultperdomain[resultperdomain['rank'] >= 3]

px.bar(_4_10_results, x='displayLink', y='rank', hover_name="link", hover_data=["searchTerms","link","title"], color="searchTerms",title="Visualization of Rankings per Query for Competitors: Only for Last Seven",labels={"rank":"Rank", "displayLink":"Domain Names","searchTerms": "Query", "totalResults":"Result Count","displayLink":"Domain Name","link":"URL", "title":"Title"},height=600, width=1000)Below, you can see the interactive results with Plotly Express. The only difference of this code block is the character “>” in the filtering code line.

We see that Healthline has nearly 1200 content in the 4-10 rankings. And, Calorieking.com’s content is usually in the 1-3 rankings, more than 4-10. It might worth to look at their content since their success rate is a little bit better for these queries despite their low topical coverage.

Checking and Visualizing Rankings for Only One Domain

We can check and visualize all of the rankings for only one domain with Plotly Express and Data Science. Below, you will see the all the rankings with URL and Query information for only “Healthline.com”.

healthline_results = resultperdomain[resultperdomain['displayLink'] == "www.healthline.com"]

fig = px.bar(healthline_results, x="displayLink", y="rank", facet_col="rank", template="plotly_white", height=700, width=1200,hover_name="link", hover_data=["searchTerms","link","title"],

color="searchTerms", labels={"rank":"Rank", "displayLink":"Domain Names","searchTerms": "Query", "totalResults":"Result Count","displayLink":"Domain Name","link":"URL", "title":"Title"})

fig.update_layout(

font_family="Open Sans",

font_color="black",

title_font_family="Times New Roman",

title_font_color="red",

legend_title_font_color="green",

font=dict(size=10)

)

fig.show()To visualize a domain’s ranking data, the explanation is below.

- Create a new variable.

- Assign the filtered search results to the variable.

- Create a “figure variable” and assign the “px.bar()” method’s output to that variable.

- Determine the data frame to be visualized, x-y axes values, hover data-name, color categorization, labels, and title.

- Determine font family, color, title font, legend title with “fig.update_layout()”.

- We have determined the “facet_col” as “rank” so that we can categorize the output for different rankings.

- We have used “searchTerms” for the “color” parameter so that we can compare the different SERPs’ situations.

- We have used “plotly_white” as the color theme of the entire plot.

- We have used “query”, “link” and “title” to examine the hover effect.

- We have used the “labels” parameter within a dictionary to change the value parameters’ names.

- Call the figure with “fig.show()”.

We see that Healthline actually has more content at the first three results. Instead of focusing on the height of the bar, you should check the count of the horizontal lines. In the future section, you will see a density heatmap example for a better SEO data visualization example.

Visualizing All Ranking Performance for Top 10 Domains with Colors and Bars

To visualize the all domains’ and websites’ performance, we can use the same methodology as below.

top10_df.sort_values('rank', ascending=True, inplace=True)

fig = px.bar(top10_df, x="displayLink", y="rank", color="rank", height=500, width=850, hover_name=top10_df["link"], hover_data=["searchTerms","link","title"],labels={"rank":"Rank", "displayLink":"Domain Names","searchTerms": "Query", "totalResults":"Result Count","displayLink":"Domain Name","link":"URL", "title":"Title"})

fig.update_layout(title="Top 10 Domains and their Rankings",xaxis_title="Domain Names", yaxis_title="Ranking of Results Based on Domains with Colors", height=400, width=1200)

fig.show()

#density_heatmap (Optional)The explanation for visualizing the ranking situation of every domain on the SERP for certain queries is below with a simplified version.

- We have sorted the “top10_df” according to the “rank” column, as ascending.

- Determined the data frame, columns, colors, labels, titles, hover data and hover name.

Below you can see all rankings for the best performing top 10 domains for the culinary fruits queries in the Google Search and their performance. We see that Healthline is better but “MyFitnessPal” has a higher bar. It is because, we are using a bar chart, and for me examining the entire SERP is easier within a bar chart, despite this handicap.

Because, when I hover the mouse over a section of a bar, I can see the query, rank data, and URL of the landing page. And, in this example we have used “rank” for the “color” parameter, that’s why we have a kind of color map. The deep purple means the “1” and the clear yellow color means the 10. Thus, we also can check the rankings, but in this example, we don’t have different colors for different queries. That’s why while planning a chart, determining the purpose of the data is important.

The example above is not detailed enough because it is only for the first 6 queries. Below, you will see another example for all of the queries within our semantic search queries example.

As you can see, we have a bigger darker color for the Healthline and MyFitnessPal again, it is because their general ranking situation is between 1-3. And, we have more “horizontal lines” within their bars, which is a signal for the content count. And, it is a good example to show how data amount can affect the overall aspect of the general situation.

Using Density Map for Organic Search Performance Comparison between Different Domains

If you don’t want to use the bar chart for the general situation of the SERP. You can use a density heatmap. The density heatmap will change its color scale according to the intensity and characters of the data points. In this example, if a domain has more ranked results, it will have a clear color, if it has a fewer count, the color will be darker. You can see the related code block below.

top10_df.sort_values('rank', ascending=True, inplace=True)

fig = px.density_heatmap(top10_df, x="displayLink", y="rank", height=500, width=850, hover_name=top10_df["link"], hover_data=["searchTerms","link","title"],labels={"rank":"Rank", "displayLink":"Domain Names","searchTerms": "Query", "totalResults":"Result Count","displayLink":"Domain Name","link":"URL", "title":"Title"})

fig.update_layout(title="Top 10 Domains and their Rankings",xaxis_title="Domain Names", yaxis_title="Ranking of Results Based on Domains with Colors", height=400, width=1200)

fig.show()

To create a desnity heatmap with Python, you can use the instructions below.

- Sort the data frame according to the visualized column (“rank”).

- Create a variable (fig).

- Use “px.density_heatmap()” method.

- Determine the data frame (“top_10df”).

- Determine the x and y values (“displayLink”, “rank”)

- Determine the height and width parameter values.

- Determine the information that will be shown after the hover effect (“SearchTerms”, “link”, “title”)

- Determine the name that will be shown after the hover effect (“link”).

- Determine the labels with the “label” parameter, and title with the “fig.update_layout()” method.

- Call the figure, with the “fig.show()”.

You can see the interactive result below.

You can see that the overall results are similar to the results only for the first 6 queries. That’s why all the SERPs is semantic. Because Topical Authority can be felt from the first query to the last one.

You can see that, Healthline and MyFitnessPal has the best possible color balance in terms of organic search performance.

Visualization for All Domains via Treemap

Treemaps can show the overall situation of the SERP in a “shared aspect”. In other words, every attribute will have a different size according to the quantity that they have. In this example, we will use “Treemap Data Visualization” for visualizing the total result count and ranked query count of all domains and result count for all these domains.

average_df = serp_df.pivot_table("rank", "displayLink", aggfunc=["count", "mean"]).sort_values([("count", "rank"), ("mean", "rank")], ascending=[False,True])

average_df“average_df” is the variable that we created for aggregating the total ranked query count and average ranking. You can see it below.

Below, you will see the same example with all the data.

ranked_query_count = pd.DataFrame(list(average_df[('count', 'rank')]), index=average_df.index)

column_name = "Ranked_Count"

ranked_query_count.columns = [column_name]

ranked_query_countWe have used the “pd.DataFrame()” method with a “list()” method for a specific column of the created data frame so that we can avoid the Data Frame Constructor error. Below, you can see the created data frame.

Below, you will see a more simple data frame for the data visualization.

ranked_query_count = pd.DataFrame(list(average_df[('count', 'rank')]), index=average_df.index)

column_name = "Ranked_Count"

ranked_query_count.columns = [column_name]

ranked_query_countWe have just the domain names and ranked query counts. We have used the index of the “average_df” data frame that we have created at the previous code block. You can see the result below.

After creating the right data frame, we can visualize the total result count per domain to see their topical coverage in terms of SEO.

fig = px.treemap(ranked_query_count, path=[ranked_query_count.index], values="Ranked_Count", height=800, width=1500, title="Ranked Query Count for the Queries per Domain")

fig.show()We have used the “px.treemap()” method from Plotly Express. To create the treemap visualization with plotly, the most important two parameters are “values” and “path”. So that Plotly can know what to show in the name section, and what to show for values. Values’ size will affect the treemap sections’ sizes. Below, you can see the result.

Visualization of Ranked Query Count for the Queries Per Domain as a Treemap

Like the visualization of the ranked query count, we can visualize the total result per domain. You can see the methodology below.

fig = px.treemap(serp_df, path=["displayLink"], values="totalResults", height=800, width=1500, title="Result Count for the Queries per Domain" )

fig.show()To visualize the total result count per domain, we have used the “ranked_query_count.index” value for the “path” parameter. Because all of the values for the domain names were an index of the “ranked_query_count” data frame’s index. You can see the related result below.

Visualization of Total Results and Domain Coverage Side by Side

This is one of the most complicated code blocks in the Data Science and Visualization for SEO tutorial. It uses plotly graph objects and also the “make_subplots” method. If you read every code block, I am sure that you will understand. Visualizing the two different data points side by side helps an SEO to understand better the SEO-related data. Until now, we have visualized the total result count and total ranked query count one by one. Below, you will see how to visualize them side by side.

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import numpy as np

# Creating two subplots

fig = make_subplots(rows=1, cols=3, shared_xaxes=True,

shared_yaxes=False, vertical_spacing=0.001)

fig.append_trace(go.Bar(

x=top10_df['totalResults'],

y=top10_df['displayLink'],

marker=dict(

color='rgba(50, 171, 96, 0.6)',

line=dict(

color='rgba(50, 171, 96, 1.0)',

width=1),

),

name='Total Relevant Result Count for the Biggest Competitors',

orientation='h',

), 1, 1)

fig.append_trace(go.Scatter(

x=ranked_query_count['Ranked_Count'], y=ranked_query_count.index[:11],

mode='lines+markers',

line_color='rgb(128, 0, 128)',

name='Best Domain Coverage with Ranked Query Count',), 1, 2)

fig.update_layout(

title='Domains with Most Relevant Result Count and Domains with Better Coverage',

yaxis=dict(

showgrid=False,

showline=False,

showticklabels=True,

domain=[0, 0.85],

),

yaxis2=dict(

showgrid=False,

showline=True,

showticklabels=False,

linecolor='rgba(102, 102, 102, 0.8)',

linewidth=2,

domain=[0, 0.85],

),

xaxis=dict(

zeroline=False,

showline=False,

showticklabels=True,

showgrid=True,

domain=[0, 0.42],

),

xaxis2=dict(

zeroline=False,

showline=False,

showticklabels=True,

showgrid=True,

domain=[0.47, 1],

side='top',

dtick=200,

),

legend=dict(x=0.029, y=1.038, font_size=10),

margin=dict(l=100, r=20, t=70, b=70),

paper_bgcolor='rgb(248, 248, 255)',

plot_bgcolor='rgb(248, 248, 255)',

)

fig.show()A brief and simple explanation for this code block is below.

- We have created a subplot with two rows.

- We have used “append_trace” to append the different graphs to the same subplot.

- We have used “go.Bar()” for creating a different data frame with a bar plot.

- We have used “go.Scatter()” for creating a line plot.

- We have determined the positions of two different plots.

- We haev used “fig.update_layout()” to determine the layout changes.

- We have called the visualization.

You can see the result below.

In the next section, we will try to understand the correlation of the words in the title tags and their rankings.

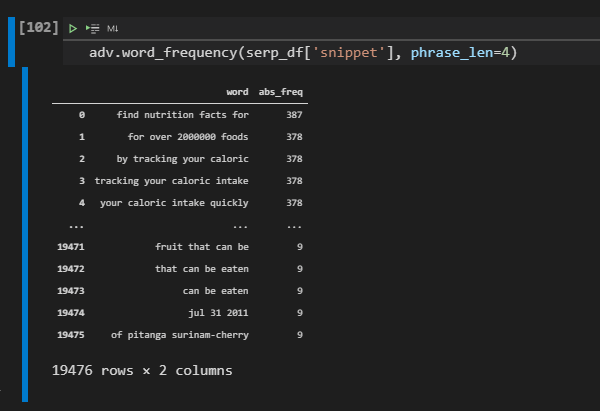

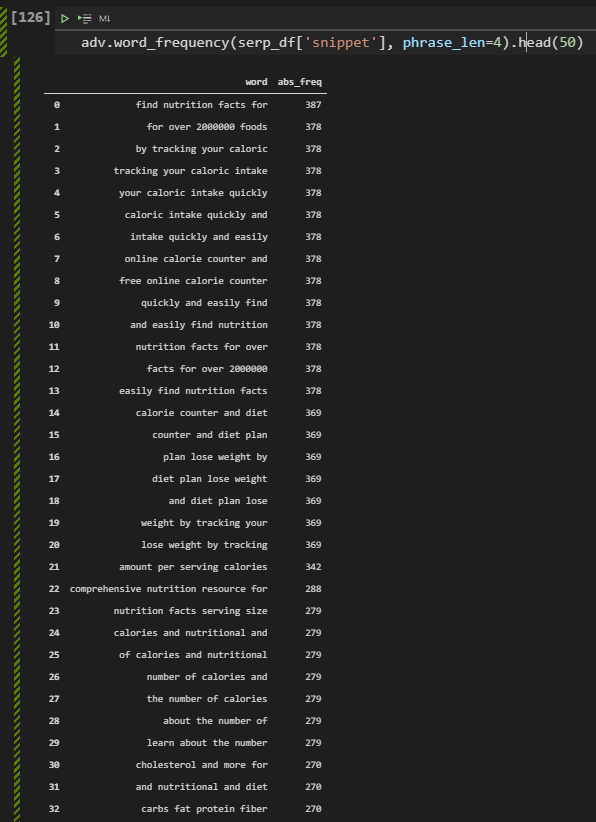

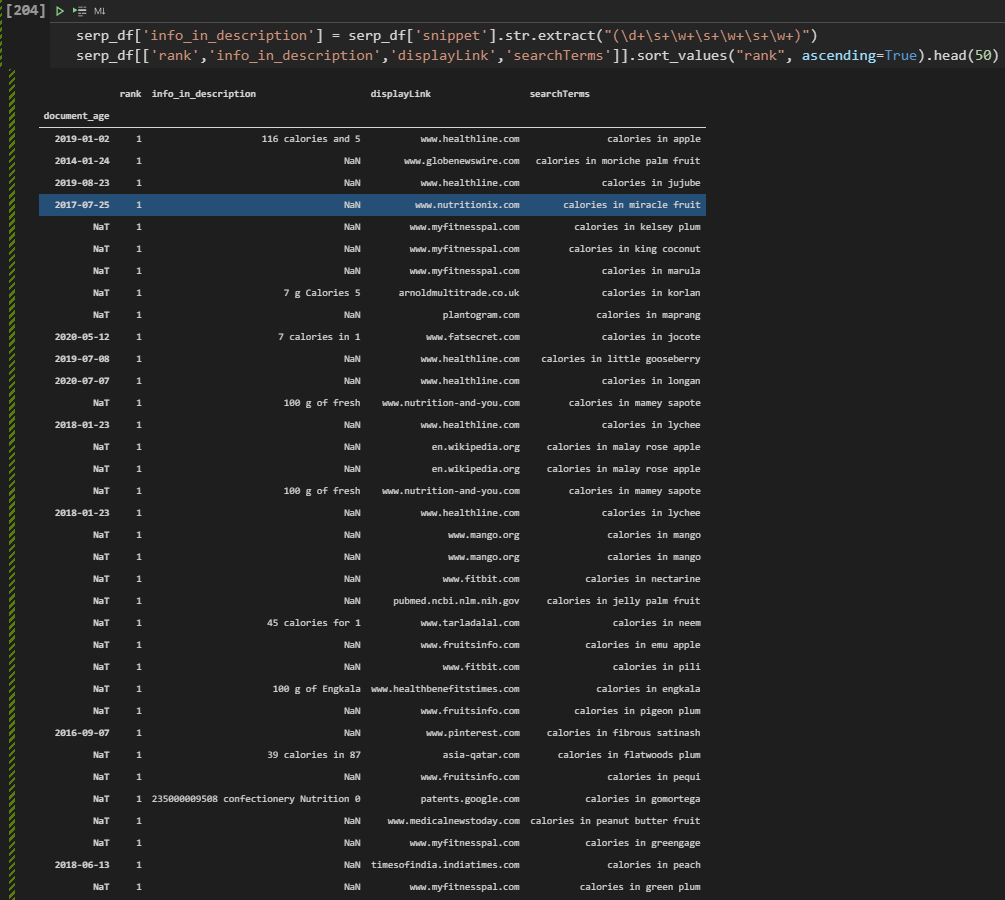

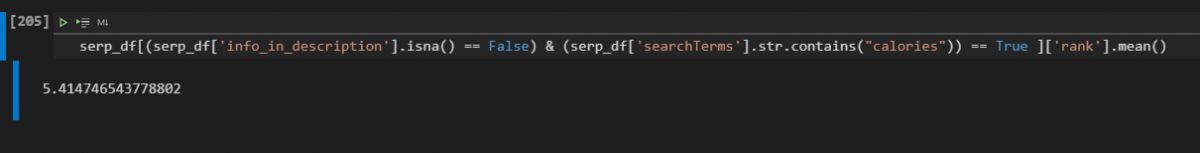

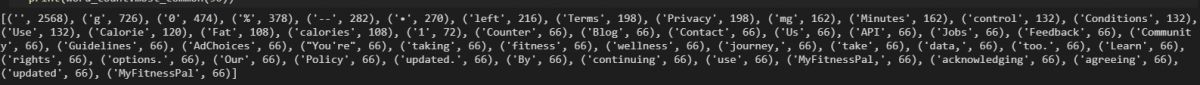

Analysing the SERP Dimensions with Data Science in a Deeper Level

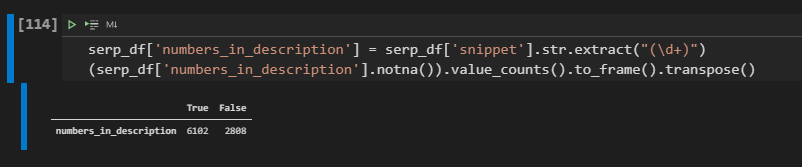

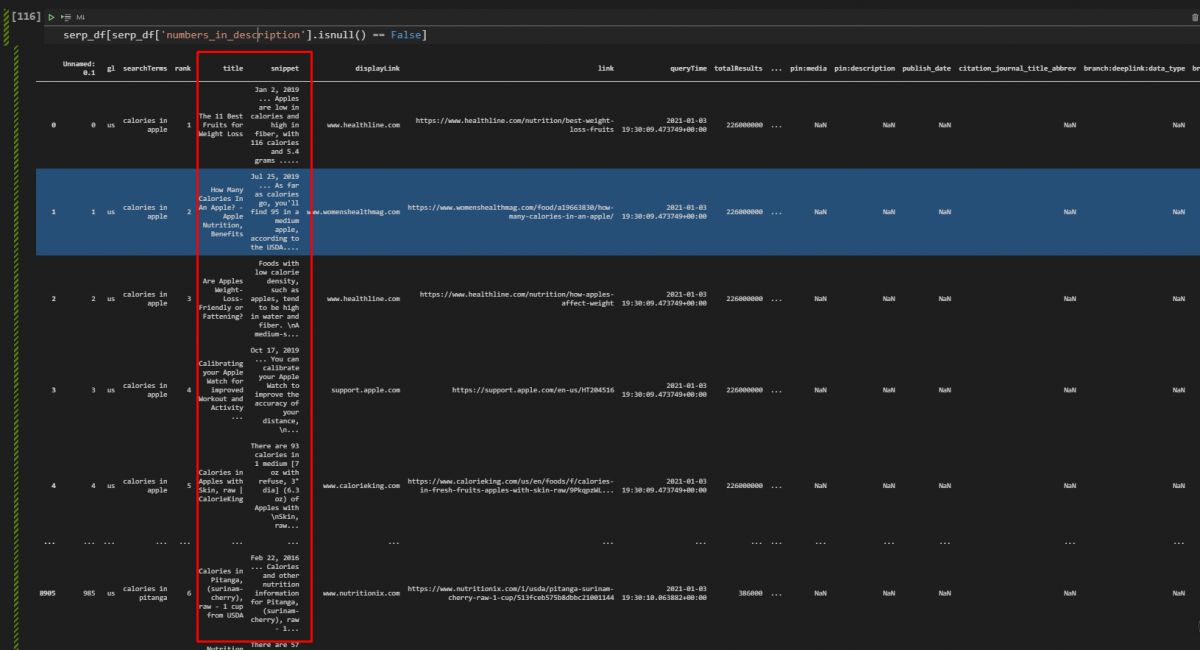

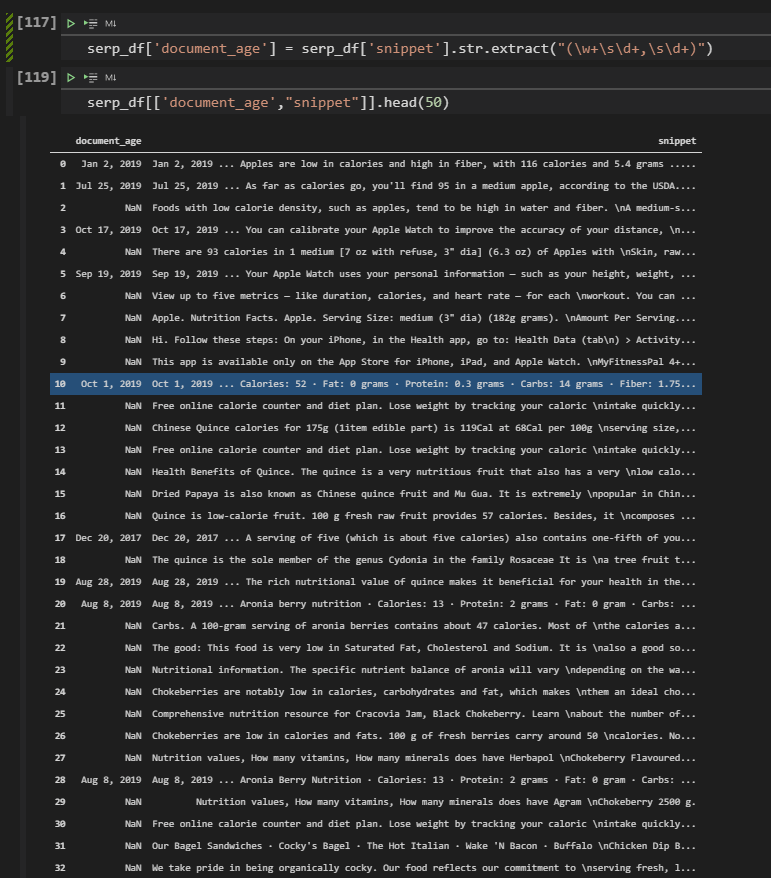

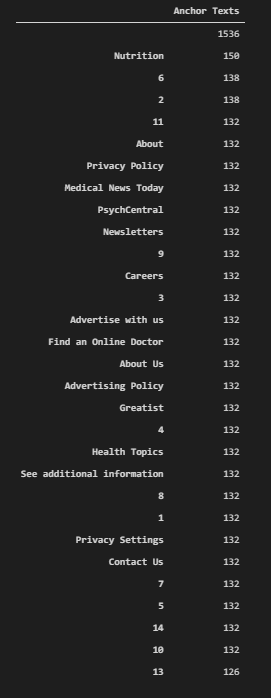

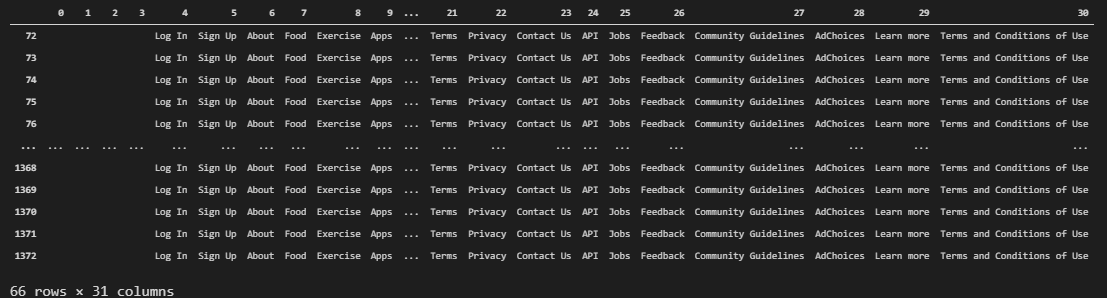

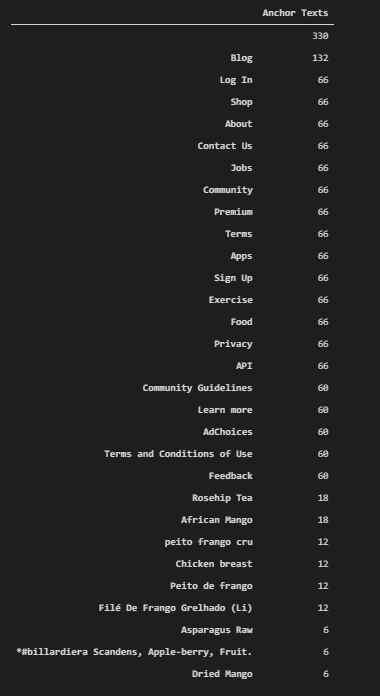

Search Engine Results Pages data can be gathered from Google and can be used with Data Science to understand the On-Page SEO Elements. For instance, we can check which words are being more used in title tags, descriptions, URLs. You will find a list of things to be examined in this section below.

- Most used anchor texts

- Most used words in title, front-title, and end-title.

- Most used words in descriptions, snippets.

- First used words in the content.

- Entity count within the landing pages’ content.

- Entity types within the landing pages’ content.

There will be more things to cover during this section, you will see them too.

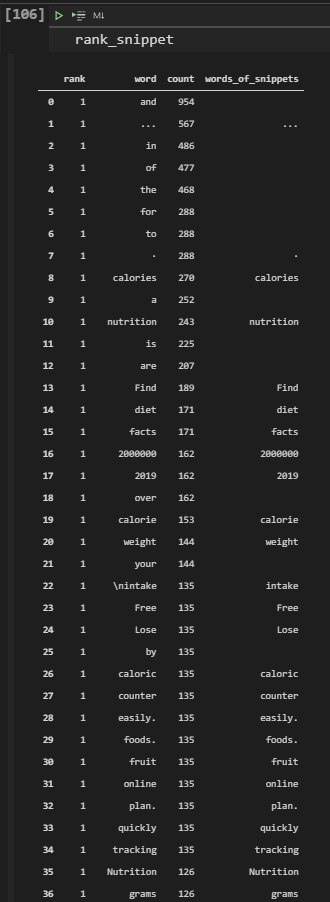

Analyzing Title Tags on the SERP for Understanding Word and Ranking Correlations

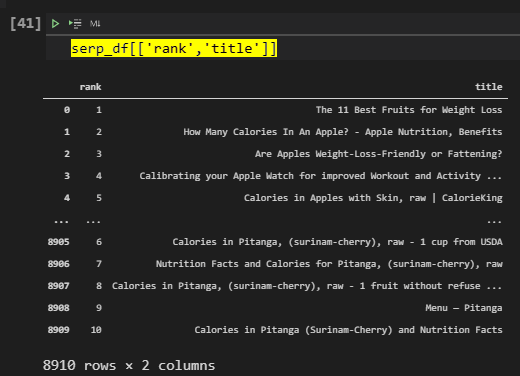

Title tags have different types, characters according to the their industry differences, or purposes. They can have dates, numbers, currencies, exact answers, power words within them. They can be eye-catching or they can be descriptive or they can include questions. Below, you will see the most used words within the title tags for all of these queries and domains.

serp_df[['rank','title']]We have filtered our data frame to check the titles and row count generally.

Below, you will see how to calculate the most used words and their ranks.

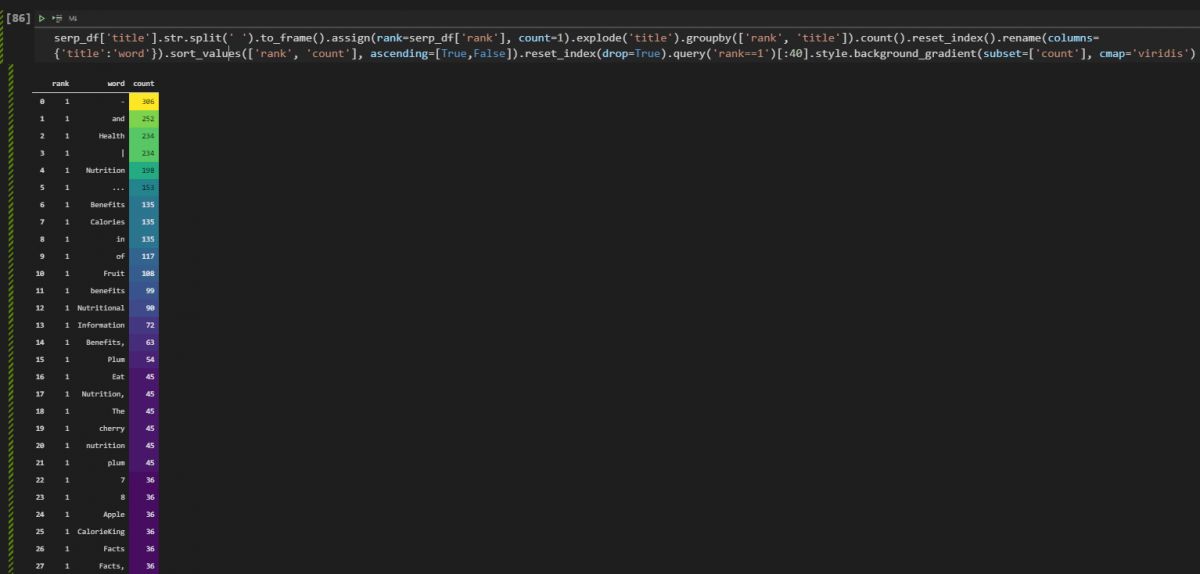

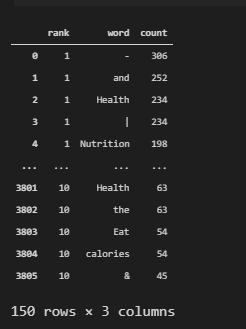

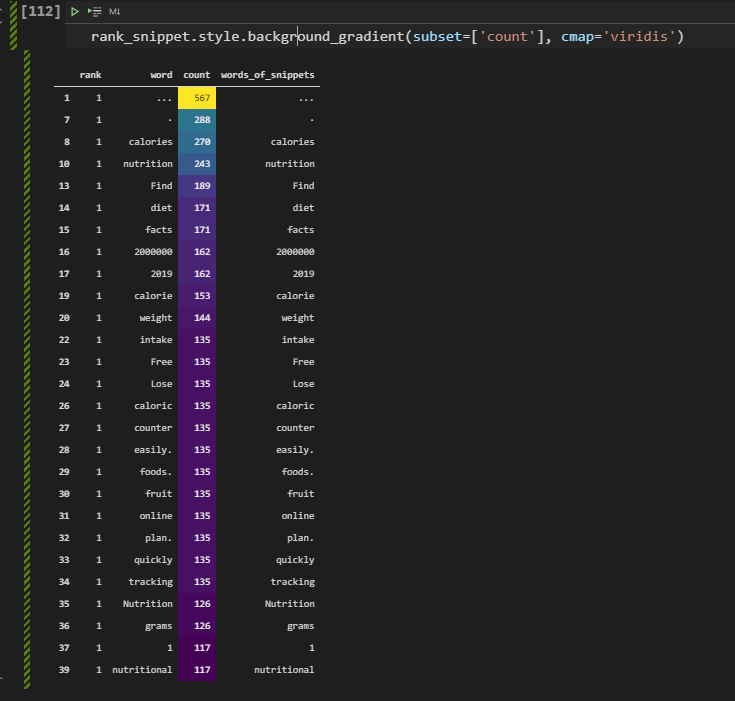

serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==1')[:40].style.background_gradient(subset=['count'], cmap='viridis')Explanation of this code block is below.

- Chose a column.

- Use the “str” method with the “split” method.

- Put it into a frame so that we can create a new column with “assign”.

- Create another column to calculate the “counts”.

- Create a new column and use the explode method to explode every word with their indexes.

- Use “groupby()” and “count()” methods to group the data frame based on “rank” data and the used words within titles.

- Change the column names and drop the index, sort the values again.

- Use the “query” methods for taking only the first rank results.

- Style the background of the data frame.

You can see the result below.

As you can see we have stop words and punctuations here, we will need to clean them. But, before cleaning them you can see that at the first rank for all of these queries, the word “Health, Nutrition, Benefits, Fruit” were the most used words.

Extracting the Most Used Words in Titles with Counts and Rankings for the Top 10 Results

Below, you will see how to extract the most used words within the title tags of landing pages with Pandas for the top 10 search results.

rank1 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==1')[:15] #.style.background_gradient(subset=['count'], cmap='viridis')

rank2 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==2')[:15]

rank3 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==3')[:15]

rank4 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==4')[:15]

rank5 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==5')[:15]

rank6 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==6')[:15]

rank7 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==7')[:15]

rank8 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==8')[:15]

rank9 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==9')[:15]

rank10 = serp_df['title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('title').groupby(['rank', 'title']).count().reset_index().rename(columns={'title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==10')[:15]

united_rank = [rank1,rank2,rank3,rank4,rank5,rank6,rank7,rank8,rank9,rank10]

united_rank_df = pd.concat(united_rank, axis=0, ignore_index=False, join="inner")

united_rank_df

We have extracted the most used words within the titles for the first 10 results of the SERP. United all the results for every ranking with the “pd.concat()” method and called the data frame as below.

In the next section we will see how to visualize this data.

Visualization of the Most Used Words in the Titles for the first 10 Results

Below, you will see how to visualize the most used words within the title tags for the top 10 results with Plotly Express.

fig = px.bar(united_rank_df, united_rank_df['count'], united_rank_df['word'], orientation="h", width=1100,height=2000, color="rank", template="plotly_white", facet_row="rank", labels={"word":"Words", "count":"Count for the Words in the Title"}, title="Words and Their Count per Ranking")

fig.update_layout(coloraxis_showscale=False)

fig.update_yaxes(matches=None, categoryorder="total ascending")We have created a bar chart with “px.bar()” method, used “orientation=”h”” method for horizontal bar creation. Determined the color scheme, weight height, and “faceol_row” as the “rank” so that we can visualize every data for different ranking separately. We have used “update_layout”

We see that the most important and general words are being used in the titles in the first three ranks more and in the last 7 ranks, the stop words and some separators are used more. And, to see this change in a more characteristic way, we can check the position of the words within the title tags.

First 4 Words in the Title Tags according to Their Rankings

The beginning section of a title and the end section of a title can be different from each other. Thus, we can use the same method for different ranking results and create ten different variables to encapsulate these data within them. Below, you will see that we take all the most used words within the titles and extract the first 4 of them.

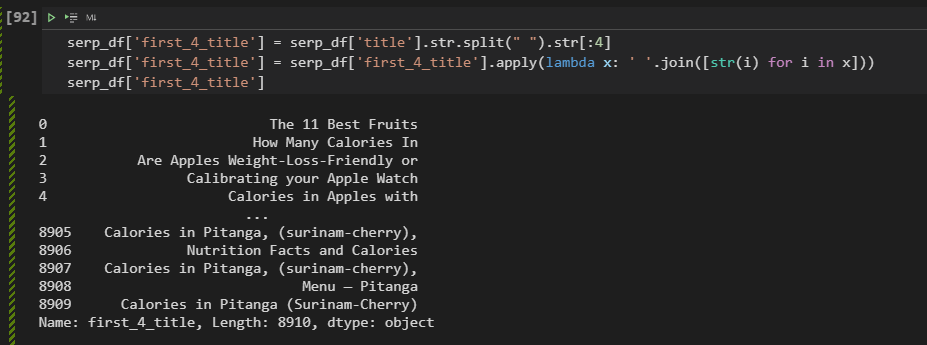

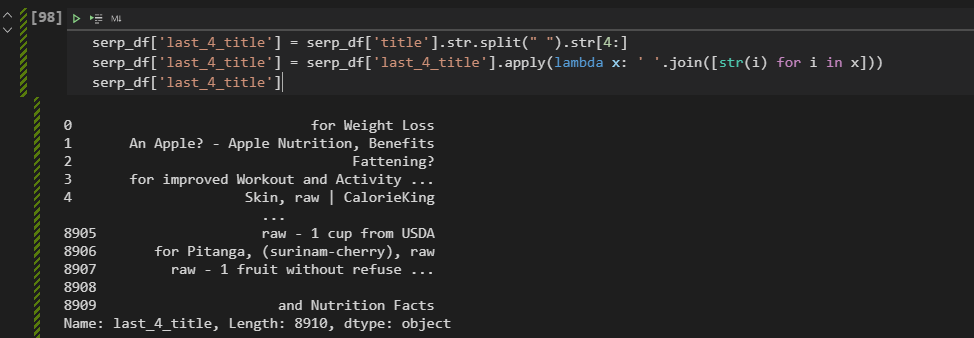

serp_df['first_4_title'] = serp_df['title'].str.split(" ").str[:4]

serp_df['first_4_title'] = serp_df['first_4_title'].apply(lambda x: ' '.join([str(i) for i in x]))

serp_df['first_4_title']We have taken the titles of every landing page on the SERP, used the “str” and “split” method to chose the first four words of the titles. We have used a lambda function with the “join()” method and list comprehension so that we can create a new column with the last four words of the titles. You can see the result below.

Taking Frequencies of the Most Used Words in Titles with Their Rankings

After taking all of the last 4 words of the title tags of the landing pages, we can check the frequency of these words according to the ranking of their landing pages as below.

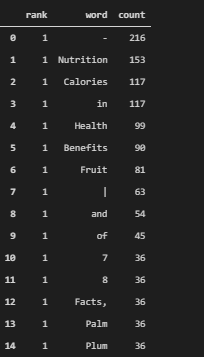

serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==1')[:15]The methodology here is actually the same as the one before. Basically, we are taking all of the last 4 words of our title tags and put them into another data frame with the “to_frame()” option, we create a new column that shows the count values and their rankings with the “assign()” method. Then, we group by the data frame according to the “rank” and “first_4_tityle” values, count all of the values, and then we are changing the column names, resetting the index, and sorting the values.

Below, you can see the result.

As we see that the most used words in the title tag’s beginning section and the most used words in the title tags in the first three results are correlative with each other. Because these words have better relevance for these semantic queries. As below, we can visualize the first four words of the title tags, as below.

Visualization of the First Four Words in the Titles with their Rankings

Visualization of the First Four Words in the title tags can be done as before. We will create “rank1_first_4” variables for every rank on the SERP. Then, we will use all these variables for the visualization by concatenating them within a data frame.

rank1_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==1')[:15]

rank2_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==2')[:15]

rank3_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==3')[:15]

rank4_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==4')[:15]

rank5_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==5')[:15]

rank6_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==6')[:15]

rank7_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==7')[:15]

rank8_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==8')[:15]

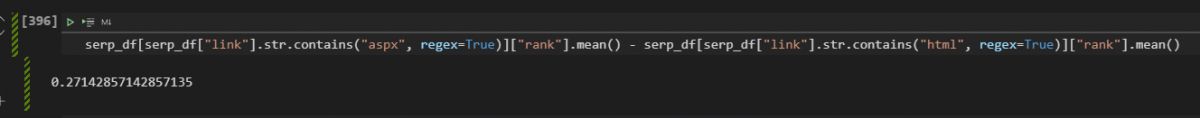

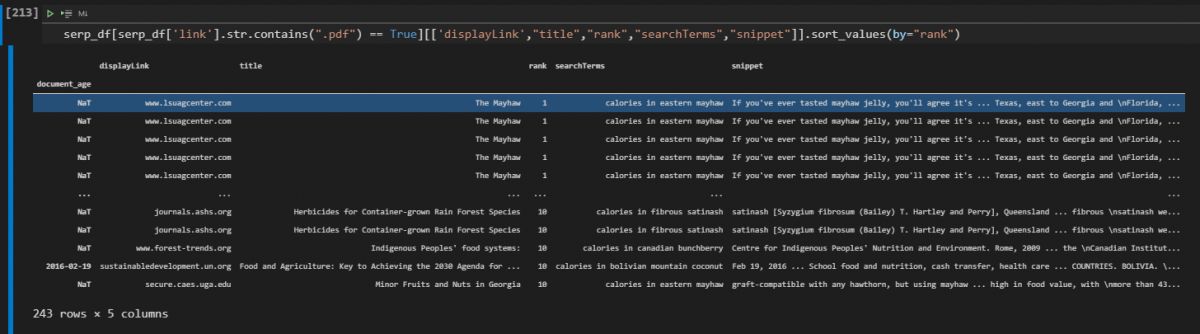

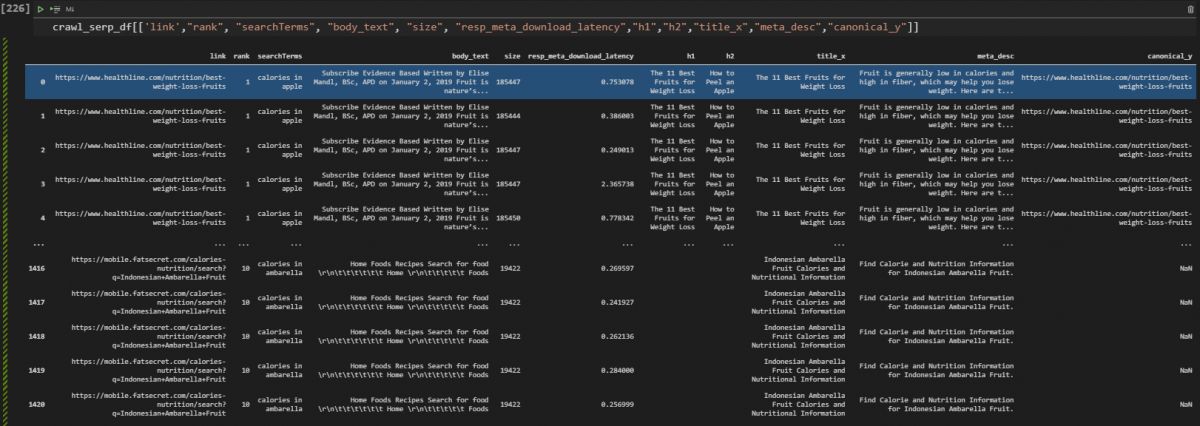

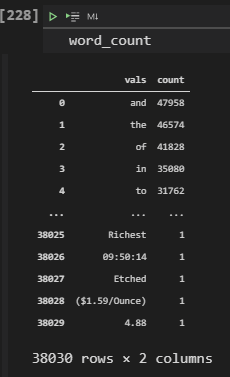

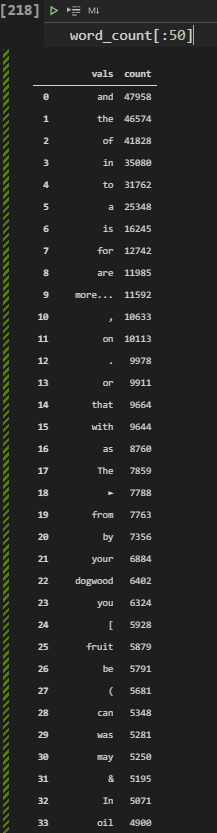

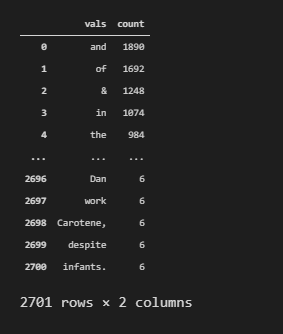

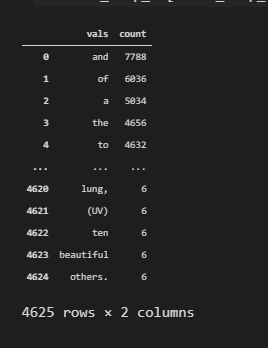

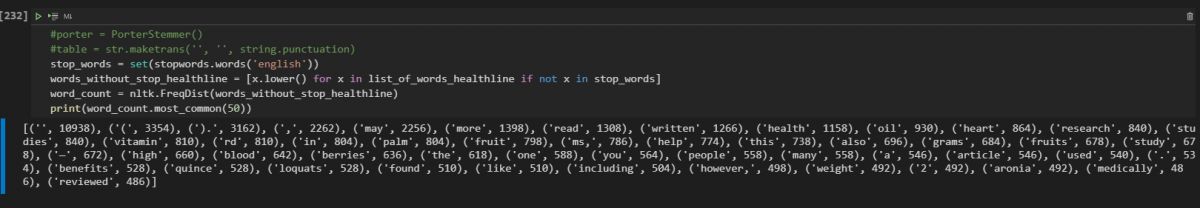

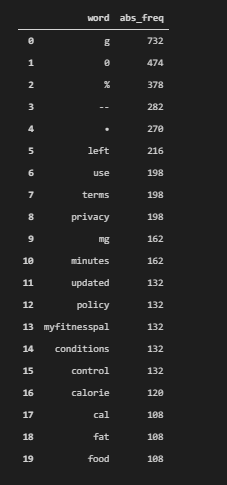

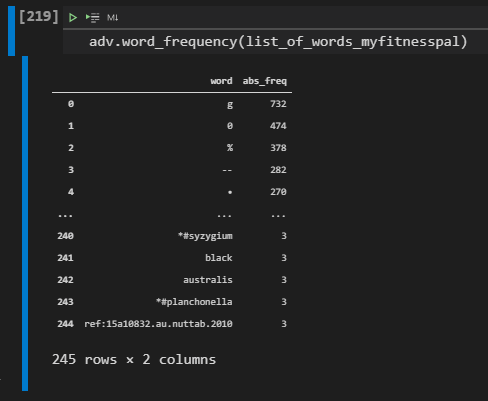

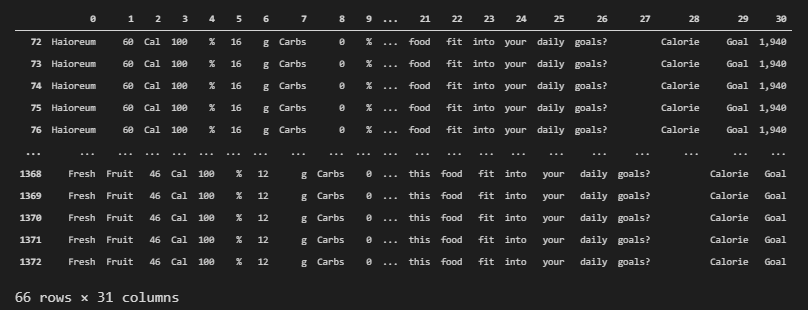

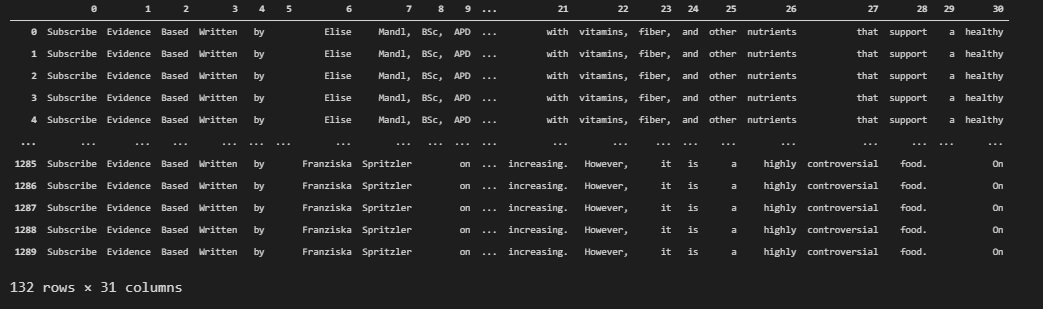

rank9_first_4 = serp_df['first_4_title'].str.split(' ').to_frame().assign(rank=serp_df['rank'], count=1).explode('first_4_title').groupby(['rank', 'first_4_title']).count().reset_index().rename(columns={'first_4_title':'word'}).sort_values(['rank', 'count'], ascending=[True,False]).reset_index(drop=True).query('rank==9')[:15]