OnCrawl is the world’s most prestigious Technical SEO Crawler that scans a website with a single click, reports technical SEO errors and needs in a data-driven manner, visualizes and provides insights needed by SEO Projects, and provides live tracking with Data Studio. Read the most detailed Technical SEO Crawler Review as it has ever been written.

During this review, we also will focus on Technical SEO Elements along with On-Page SEO Elements and their definitions, importance, and functions through OnCrawl’s visualizations and data extracting systems. We will perform our review over one of the biggest Insurance Aggregators of Turkey, which lost the 6 May Core Algorithm update 6 months ago in 2020. Focusing on a website that has lost a core algorithm update can be more useful for our review’s educational side in terms of Technical SEO, since the core algorithm updates and Technical SEO are connected to each other on so many levels.

Thus, if you are not familiar with Technical SEO Terms and Google’s Algorithmic Decision-trees, examining OnCrawl’s site crawling and understanding methodology can be a good start point for every level of SEO enthusiasts.

Let’s begin our first crawl with an example and OnCrawl’s detailed Crawl Configuration that gives a chance for adjusting crawl style and purpose according to the unique SEO Cases.

How to Configure a Crawl in OnCrawl’s Crawl Configuration Settings and Options

To configure crawl settings in OnCrawl, there are multiple options from the crawl speed to the integrating log files along with custom extractions.

Some of the OnCrawl Crawl Settings and Options are below.

- Start URL

- Crawl Limits

- Crawl Bot

- Max Crawl Speed

- Crawler Behavior

- URL With Parameters

- Subdomains

- Sitemaps

- Crawler IP Address

- Virtual Robots.txt

- Crawl JS

- HTTP Headers

- DNS Override

- Authentication

- SEO Impact Report

- Ranking Report

- Backlink Report

- Crawl over crawl

- Scraping

- Data Ingestion

- Export to

Before proceeding more, we can give some details about crawl configuration options to make them more clear.

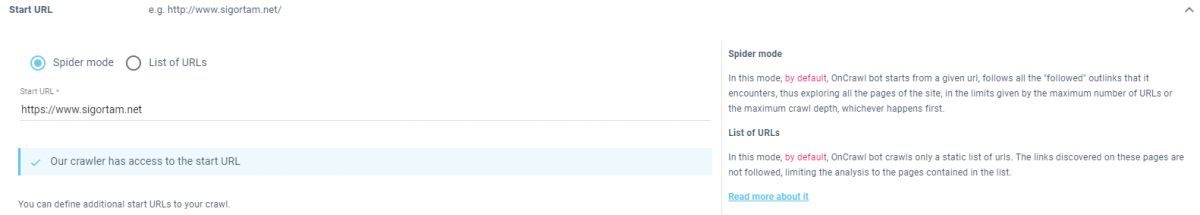

OnCrawl Crawl Configuration Options: Start URL

Start URL is determining the starting URL of the crawl. In the Start URL Section, you may choose to crawl only a listed URL from a domain or you may choose to explore, extract, and crawl every URL on a domain.

Why is “Start URL” important?

Choosing which URLs to be crawled and where to begin crawling can give the opportunity to crawl for a specific purpose and it can save time. You can crawl only for “product pages” or only for “blog” pages with a specific string within it.

OnCrawl Crawl Configuration Options: Crawl Limits

Crawl Limits is for determining the limit of URL amount along with their distance from the homepage to be crawled. It might vary from package to package that you have, but all packages of OnCrawl have enough URL Limit for managing an SEO Project.

Why is “Crawl Limits” important?

Choosing URL Depth and URL Amount as a limit can save time and also give the possibility to make target-oriented crawls. To see a quick summary of a category and its general problems, a certain amount of URL from a certain depth can be enough.

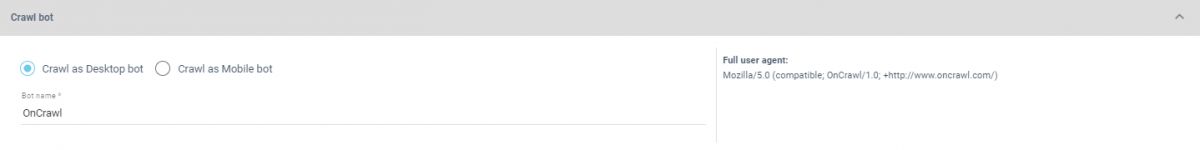

OnCrawl Crawl Configuration Options: Crawl Bots

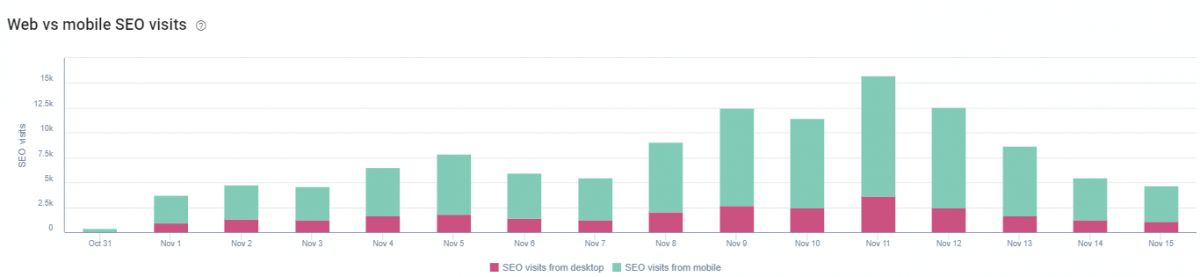

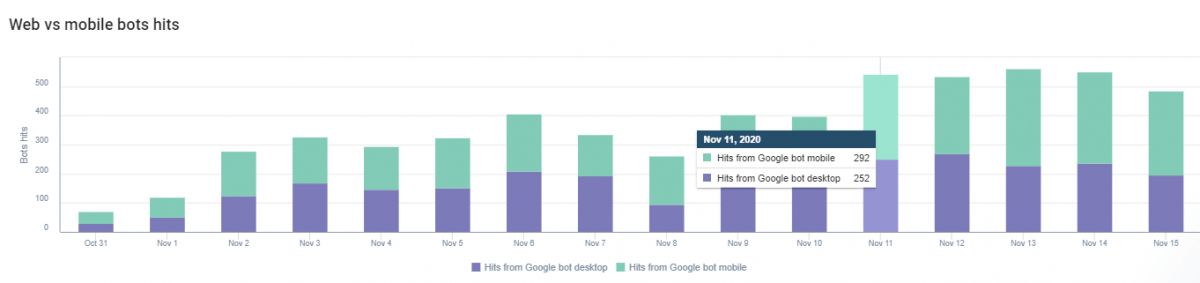

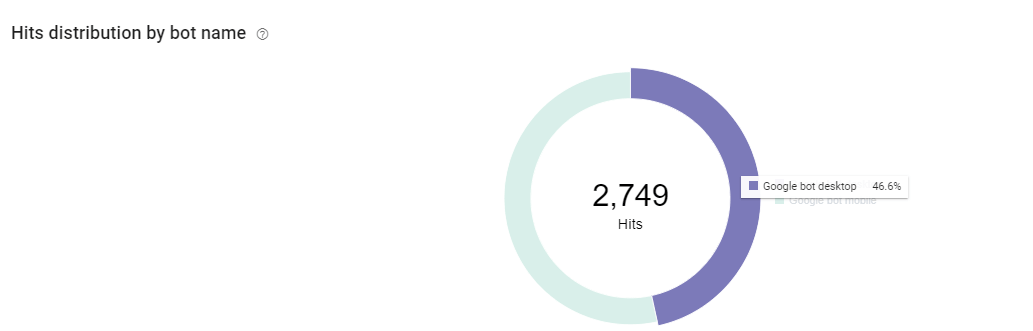

Crawl Bots is the configuration for determining the crawler’s character according to the device type. Since, next year in March 2021, Google will start to use “mobile-only indexing” instead of “mobile-first” indexing, the option for “Crawl as Mobile Bot” here is more important for technical SEO.

On the right side of the image, you may see the user-agent that will be used by OnCrawl during the crawling of the URL List or Domain that you have chosen.

Why is “Crawl Bots” important?

According to the device type, the viewport of the screen will change. Having both desktop and mobile bot options is important because internal links, page speed, status codes, the content amount can vary according to the device types. Being able to see and compare device-based differences is an advantage for SEO.

Also to make log analysis easier, you may change the bot’s name in the user agent.

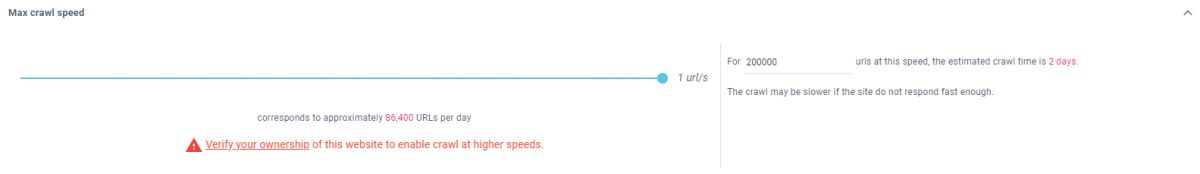

OnCrawl Crawl Configuration Options: Max Crawl Speed

Max Crawl Speed is actually a rare configuration option for a Technical SEO crawler. Most of the SEO Crawlers do not care so much about the website’s server capacity, but OnCrawl tries to crawl one URL per second. It also has a calculator that says that crawling X amount of URL would take X amount of time.

To make OnCrawl’s crawl speed faster, you should validate that you are the owner of the domain so that the server’s situation can be your own responsibility during the crawl.

You also can make OnCrawl crawl one URL per 5 seconds. Having a fast crawler is an important trait, but having a responsible crawler is too.

Why is “Max Crawl Speed” important?

Max Crawl Speed is a metric that helps to protect the server’s health to respond to the actual users. Googlebot also has a similar configuration on the Google Search Console and Bingbot has another type of configuration for the timelines in the day.

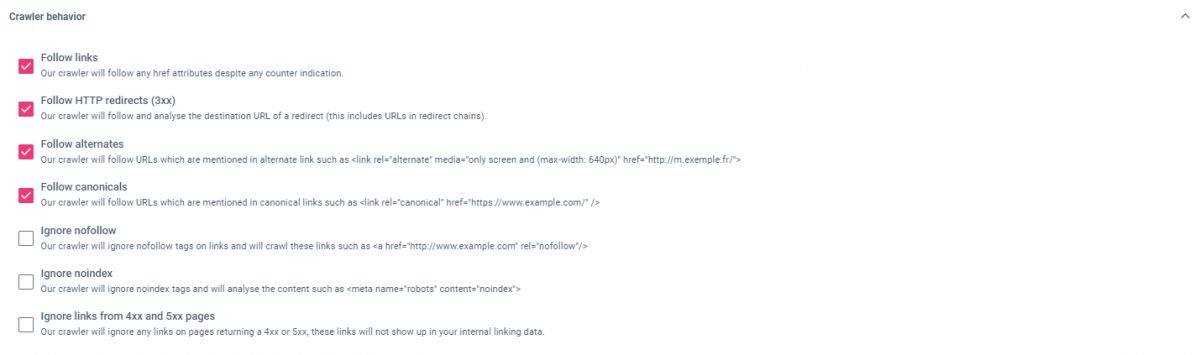

OnCrawl Crawl Configuration Options: Crawler Behavior

Crawler Behavior Configuration Options are for determining the crawl launch’s personality, purpose, and style. Different crawler behaviors can be used for different types of technical SEO Analysis.

The crawl behavior configuration options in OnCrawl are below.

- Follow links

- Follow HTTP redirects (3xx)

- Follow Alternates

- Follow Canonicals

- Ignore Nofollow

- Follow Canonicals

- Ignore Nofollow

- Ignore Noindex

- Ignore Links from 4xx and 5xx pages

These crawler behavior options are easy to guess but we can explain them simply.

- Follow Links: This option is for extracting and following any link in the after-DOM of the web page.

- Follow HTTP Redirects (3xx): Googlebot stops after the 6th 301 redirects to follow the redirect chain. I am not sure what is the limit for OnCrawl’s crawler but according to the documentation, OnCrawl continues every redirection on the web page by including every 3xx variation of HTTP Status Codes.

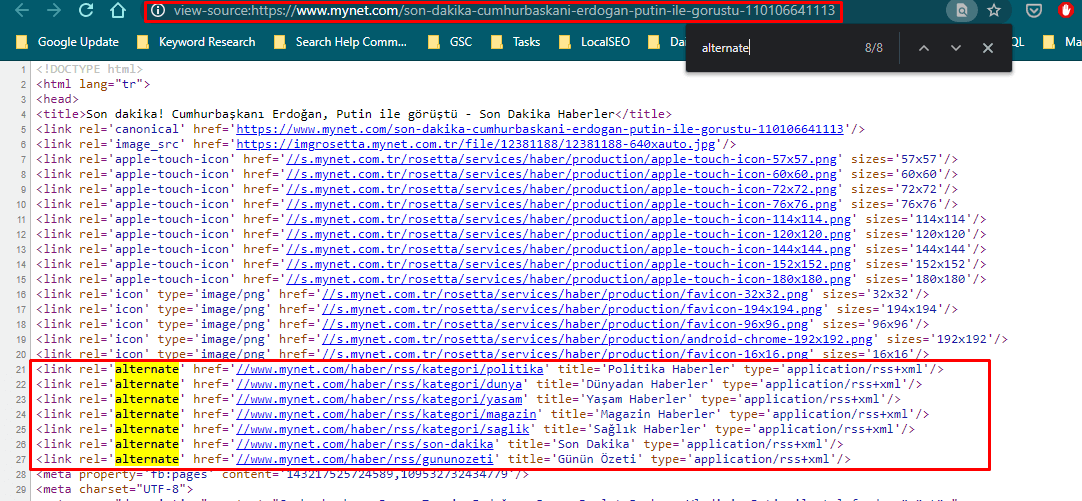

- Follow Alternates: Every link with the “rel=’alternate’” attribute will also be followed. Especially in the old news and e-commerce sites, an SEO can come across these types of old link attributes such as “rel=’alternate’ media=’handheld’”. In such cases, OnCrawl can cover SEO’s inexperiences that causes time differences.

- Follow Canonicals: Every URL in the canonical tag will be followed by the OnCrawl’s crawler.

- Ignore Nofollow: It means that the OnCrawl’s crawler will crawl every URL with the “nofollow” attribute. Googlebot doesn’t listen to the “nofollow” attribute if the nofollowed link belongs to the same domain and some websites still use the “nofollow” attribute for internal links, for extracting those links and pages that include them, this can be a useful feature.

- Ignore Noindex: this option will make the OnCrawl’s crawler follow and crawl every noindexed page with their own label. This is important because noindexed pages are not important for Googlebot, it even doesn’t render javascript on those pages. Thus, if there are important internal links that point to noindexed pages, you can explore these.

- Ignore Links from 4xx and 5xx Pages: It means that OnCrawl’s crawler will ignore those links for the output of the crawl process. It is important because 4xx Pages and some 5xx pages can defy the output data, and most of the 4xx pages can be created because of a CMS error or site migration, etc… Keeping the valid situation’s data clean, it is a necessary option.

OnCrawl Crawl Configuration Options: URL With Parameters

URL Parameters are the addons in the URLs for special tasks such as searching, filtering, and grouping the data including “?s=term” or “?size=L”. URL Parameters can be used for grouping products, services, blog posts, and URL Parameters help users to find the information they seek easier thanks to the search bar or faceted navigation.

Why are URL Parameters important for SEO based Web Site Crawling?

Extracting these URL Parameters and grouping them or filtering them can help an SEO to understand the web pages’ characters and functions. Imagine you can compare two web site’s parameters, which one has more parameters for the “shoe sizes” or “shoe prices” along with “shoe brands”?

Also, unnecessary URL Parameters can dirty the output data of the crawl process. And, you may want to learn how to categorize and interpret URL Parameters with Python.

OnCrawl Crawl Configuration Options: Subdomains

The subdomain crawling configuration option lets the crawler for crawling the subdomains of the targeted domain. A website can have multiple subdomains that may not be explored and encountered with a glimpse, OnCrawl’s crawler can extract these and integrate their general performance to the output data.

Why are “Subdomains” important?

During my 5 years of SEO Experience, I have seen customers that they forget about their subdomains. I also know that some “shady” persons can create a subdomain for linking other websites from a domain without the permission of the website owner. Also, a subdomain can affect the main domain’s crawl budget, crawl quota, or general organic search performance. Exploring and grouping them according to their purposes for a Technical SEO is important.

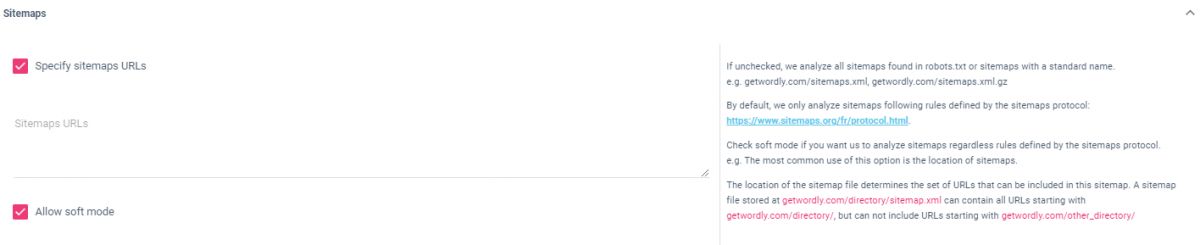

OnCrawl Crawl Configuration Options: Sitemaps

A sitemap is one of the most important elements of Technical SEO. Sitemaps are being used for storing the URLs that are meant to be indexed by the Search Engine. A Sitemap should only contain the “canonical” URLs with 200 HTTP Status Code.

Why is “Sitemaps” important?

Canonical Tags are also hints, not commands for Googlebot. So, using canonical tags in a correct way will prevent the Mixed Signals for crawling, indexing, and ranking algorithms of the Search Engine, every Technical SEO should check whether URLs in a sitemap have 200 Status Code, canonicalized and it is being indexed or not.

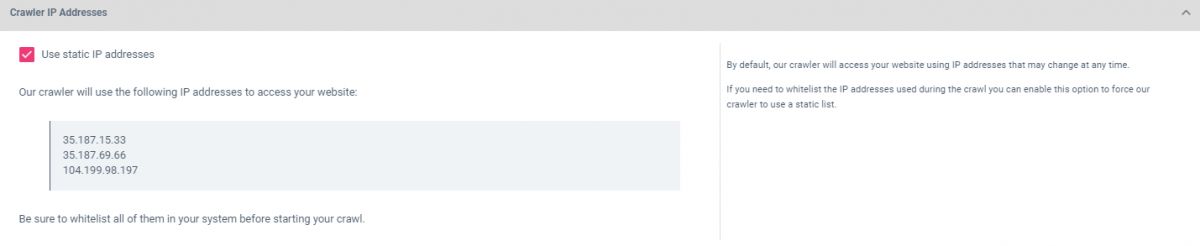

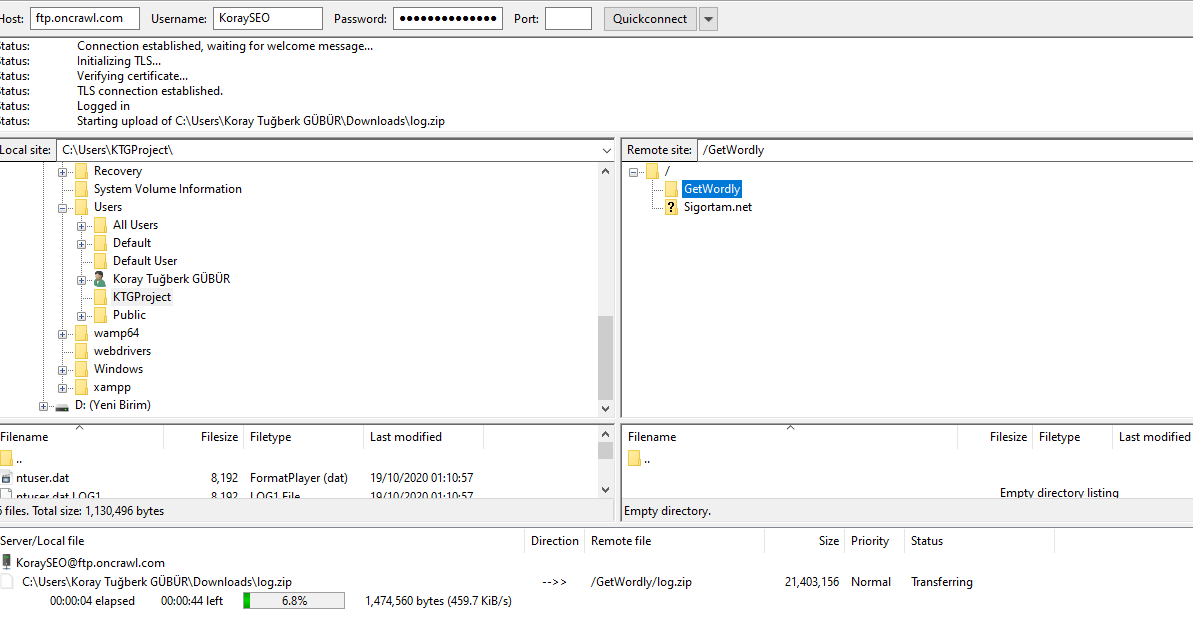

OnCrawl Crawl Configuration Options: Crawler IP Address

Crawler IP Address means the IP Address that OnCrawl’s crawler will be using during the crawling process of the targeted site. These IP Addresses are as below:

- 35.187.15.33

- 35.187.69.66

- 104.199.98.197

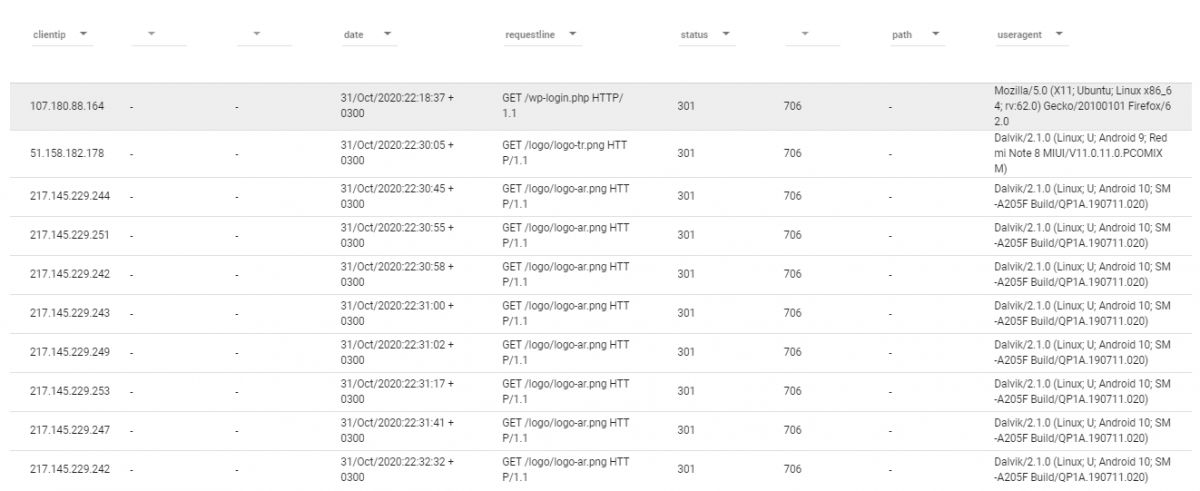

Why is “Crawler IP Address” important?

It will help to identify the OnCrawler’s crawlers’ hit during the crawl process in the log analysis. Also, some servers may block these IP Addresses during the crawling process as a security measurement. Thus, whitelisting these IP Addresses will secure the crawling process’s accurate output.

OnCrawl Crawl Configuration Options: Virtual Robots.txt

Every SEO Crawler is also a kind of “scraper” and scraping a website without its consent can be “unethical”. Because of this important point, OnCrawl has some Extra Settings and Virtual Robots.txt is the first of these special features. Virtual Robots.txt is for crawling the web pages that are being disallowed by the actual robots.txt. To use it the website ownership should be verified like in the “Max Crawl Speed” section.

Why is Virtual Robots.txt is Important

In my experience, the “virtual robots.txt” crawler configuration is a unique feature for OnCrawl. It is important because any Technical SEO may want to see the character, potential, and situation of the disallowed URLs. Also, in Robots.txt files, “crawl rate” and “crawl delay” can be specified. These configurations can be changed for the OnCrawl by virtual Robots.txt

And, Virtual Robots.txt can be used also for limiting the OnCrawl’s crawlers for a specified site section so that the crawling time can be shortened while the crawl report can be focused on a specified target. This is especially useful for international sites such as “root-domain/tr/” and “root-domain/en/”.

As a related guideline, you may want to read the “How to verify test Robots.txt file with Python” guideline.

OnCrawl Crawl Configuration Options: Crawl Javascript

Until two years ago, Googlebot couldn’t render Javascript, which led Google SERP to become only non-Javascript oriented. Content including links and images that were being drawn by the Javascript couldn’t be seen by Googlebot in case you don’t use “dynamic rendering”. Today, Googlebot is evergreen and can render Javascript with the updated version of Chromium. This setting is for determining the Javascript should or shouldn’t render during the crawl process.

Why is “Crawl Javascript” Important?

If you want to understand Googlebot’s vision, you will need an SEO Crawler that can render Javascript and measure its effect on the site. You can crawl a website first with Javascript enabled then with Javascript disabled versions so that you may see the effect of Javascript on the page’s content, navigation, links, and crawl time.

Thus, you may calculate your “Crawl Budget” and “Rendering-dependency” for SEO Performance.

OnCrawl Crawl Configuration Options: HTTP Headers

HTTP Headers are one of the other important aspects of Search Engine Optimization. HTTP Headers can determine a web page’s content’s variation to be fetched and also it can affect the security along with the web page loading time.

Why is “HTTP Headers” Important?

Determining different Request Headers for a Crawler is an advantage and this is also a rare special. By using Request Headers, you may try to see different behavior patterns of a website. You may use “cache-control”, “vary” or “accept-encoding” to see and measure the total crawl time of a website.

OnCrawl Crawl Configuration Options: DNS Override

By writing a DNS Override, crawling different servers of the same website can be possible.

Why is “DNS Override” Important?

It is important because a website’s content can change from server to server. Imagine you have five different robots.txt files for every server or imagine you don’t have some products and categories for some of the servers. This also helps to crawl different servers for the same website sections for the different locations.

OnCrawl Crawl Configuration Options: HTTP Authentication

HTTP Authentication configuration option is for enabling the OnCrawl’s crawler to crawl a pre-production version of the website such as a test website or re-design version of a website.

Why is HTTP Authentication important?

Sometimes crawling a website for logged-in users to scrape “stock information”, “prices”, “page speed” and “recommended products” can be useful. Also, crawling a test site and a face-lift version of a website can be useful for SEO.

OnCrawl Crawl Configuration Options: SEO Impact Report

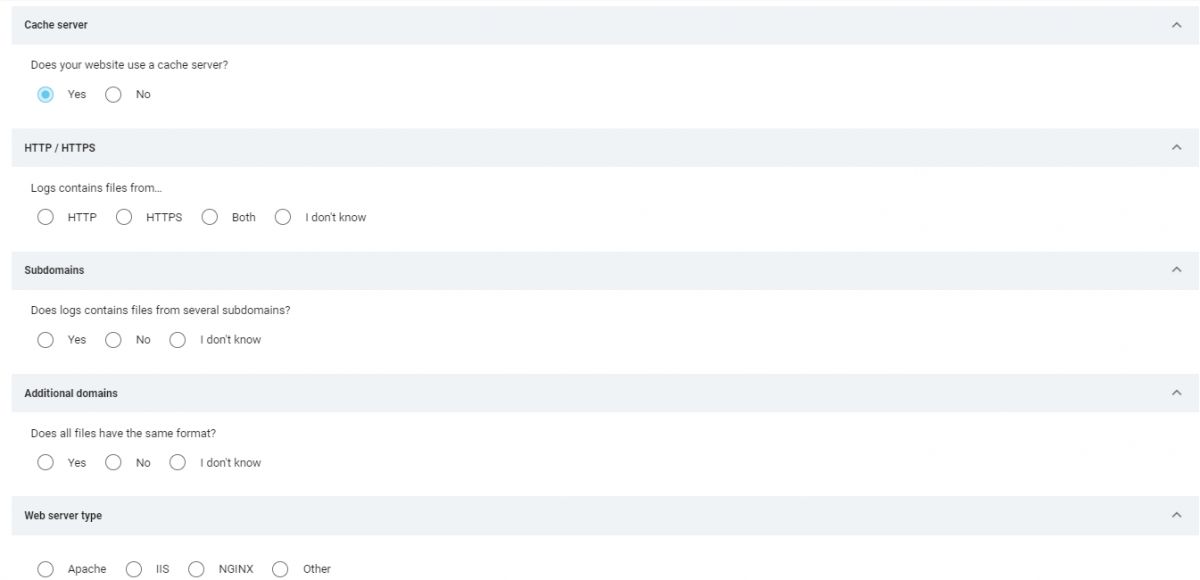

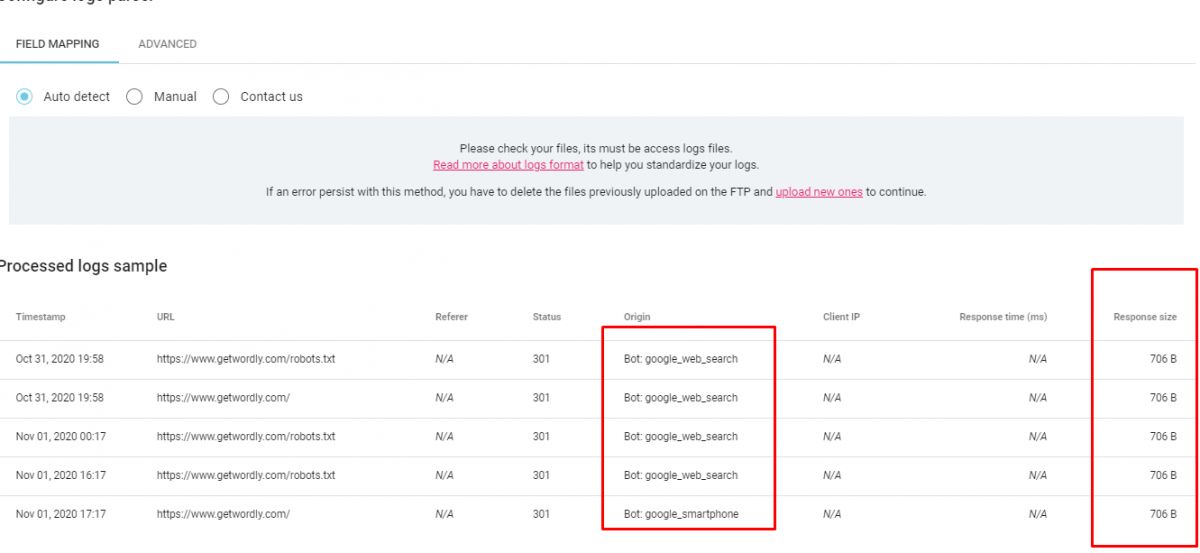

SEO Impact Report is another rare and important feature for OnCrawl’s crawling technology and SEO Analysis. Crawling a website is important but also combining Google Analytics, Googlebot Hit Log Information, AT Internet Analytics and Adobe Analytics.

Why is SEO Impact Report Important?

This is an important task for a Crawler. The pages with zero click for a month, pages with zero Googlebot hit, pages with most internal links with less organic traffic potential or pages with high bounce rate and slow page speed, etc. Every kind of data combination and correlation related to the SEO and UX can be extracted via the “SEO Impact Report” on OnCrawl.

And, a “crawl-based” audit only can see a website via its own elements, in other words, site-related elements. SEO Impact Report lets us use every possible data point for SEO to understand a website’s situation in terms of SEO.

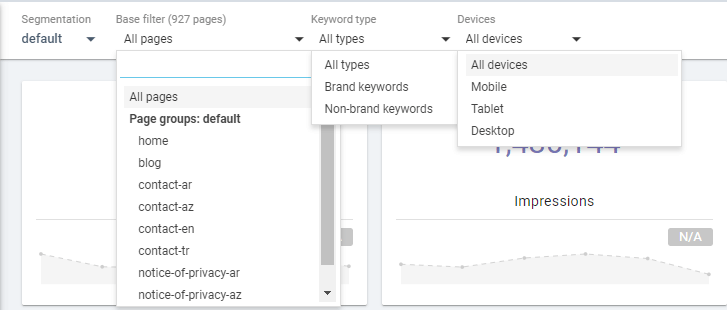

OnCrawl Crawl Configuration Options: Ranking Report

Similar to SEO Impact Report, Ranking Report is for combining the Google Search Console Data with the crawl process. It gives the possibility for filtering websites, web site categories, determining branded queries, and also filtering the countries.

Why is the “Ranking Report” Important?

Combining crawl data, log file analysis, structured data, and its effect on CTR, Google Analytics, and Google Search Console with “branded” queries for targeted geographies and directories or subdomains is useful. To see Google’s algorithms’ decision tree and their semantic ranking behaviors, create a better user-friendly website, and compare your website with competitors’ websites can be a more efficient way thanks to the SEO Ranking configuration option. In the Ranking Report, you can observe any kind of SEO-based possible data correlation, causation, and signal related to the Organic Search Algorithms. For instance, the effect of structured data can vary according to the web page types or query types, and you might think to change them by observing their effect with a more detailed vision, via OnCrawl.

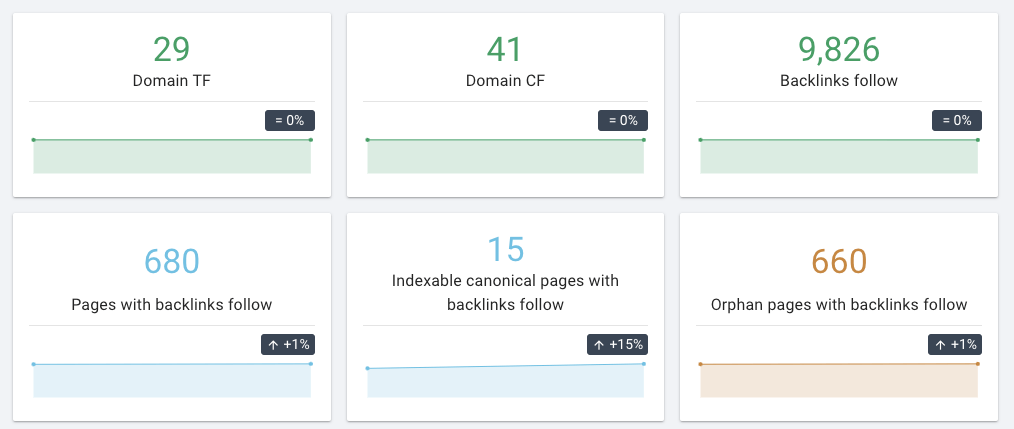

OnCrawl Crawl Configuration Options: Backlink Report

OnCrawl has the ability to combine and consolidate the off-page profile of a website with the internal technical and non-technical aspects of a website to create more SEO Insights. For now, it works with Majestic’s API. If you have a Majestic subscription, you may take advantage of this rare option.

Why is the “Backlink Report” Important?

Backlink Report is for combining the Off-page and Branding profile of a website with the internal SEO elements and SEO Performance data. Thus, understanding a website’s situation holistically is more possible.

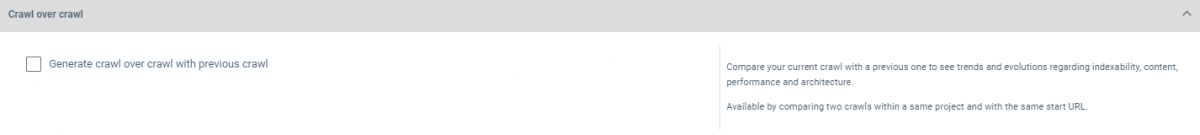

OnCrawl Crawl Configuration Options: Crawl over Crawl

The “crawl over crawl” option is for determining a past crawl as the base version of the website so that the new crawl can create more insight.

Why is the “Crawl over Crawl” Important?

To see the differences, improvements, and decline in the different verticals and factors of SEO in terms of both Technical and non-Technical SEO, this option has the golden value. Seeing content is being crawled more frequently by Googlebot after taking a link from Homepage can be possible and also you may check a web page’s keyword profile difference after changing its meta title and anchor texts of links that target itself. And, it is also one of the rare features for SEO Crawlers.

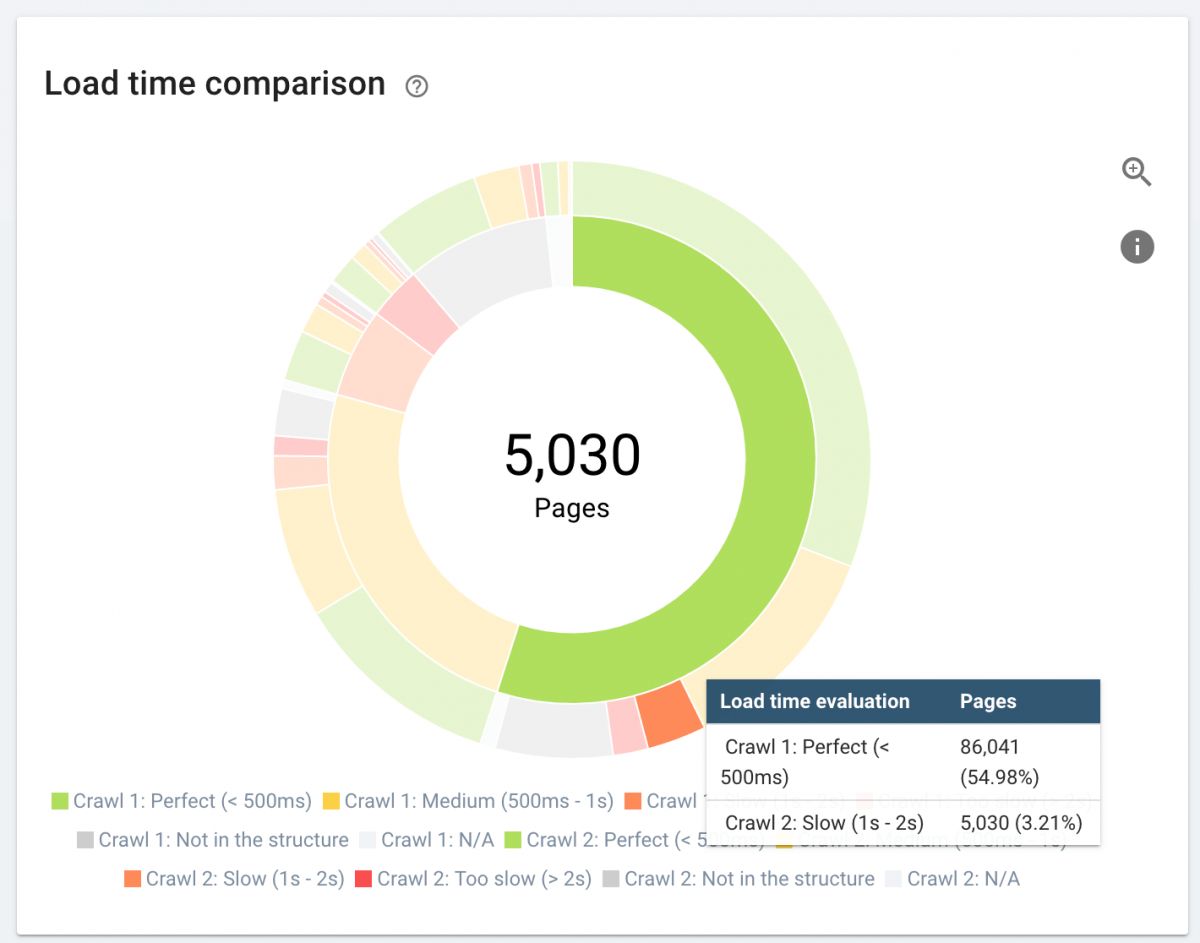

Below, you will see an example for the “Crawl over Crawl” comparison for the “load time evaluation”. You may see the difference between load time distribution for web pages and their performance change for different crawl reports.

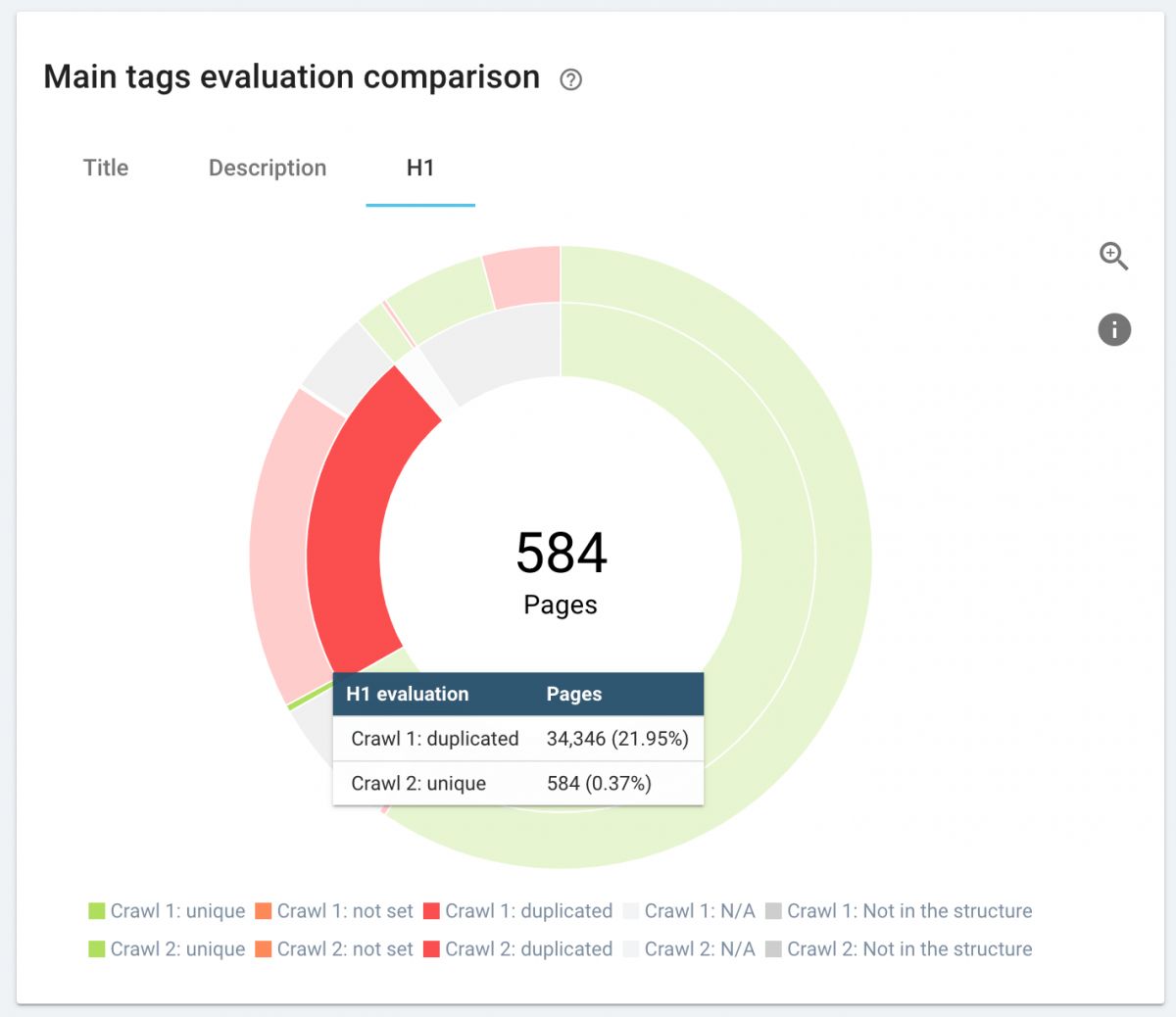

Below, you will see another and similar example, for the “Missing” and “Duplicated” H1 Tags.

OnCrawl also tries to show the differences between different crawls in every aspect of the website so that the webmaster can understand what they did differently and why Google’s preferences can be changed.

OnCrawl Crawl Configuration Options: Scraping

Web scraping means extracting information from web pages via CSS and XPath selectors. Extracting links, texts, image source URLs, any kind of component on a web page with a special and custom metric is possible.

If you want to crawl a website with Python, I recommend you to read our related guidelines.

Why is “Scraping” Important?

Thanks to custom scraping, creating a custom metric for Ranking Performance or checking the stock information, or comparing the price differences or category and filtering differences between e-commerce sites or the “comment, review rate” differences is possible.

As I remember thanks to Rebecca Berbel, it also offers a very important opportunity to check “publish and update” dates for articles or blog posts.

OnCrawl Crawl Configuration Options: Data Ingestion

Data Ingestion gives the possibility to use JSON and CSV files for integrating the data of Google Search Console, Google Analytics, Adobe Analytics, AT Internet Analytics and also Log Files.

You can also add data from SEMRush, Ahrefs, Moz, or any other source to your SEO Scan and Analysis project so that you can create your own SEO Metrics and Analysis Template via OnCrawl’s Data Ingestion Option.

Why is “Data Ingestion” Important?

Data Ingestion can increase the privacy of websites since only the determined amount of information is shared with the toot. It also gives a chance for the “non-authorized” sites’ be able to audit without any GSC, GA, or Adobe Analytics user access via the CSV, JSON files in a ZIP archive.

And, since it lets you add any data from any data resource, you can create a more “creative” SEO Analysis based on your own guesses and compare them to see which one gives the more consistent results for the project.

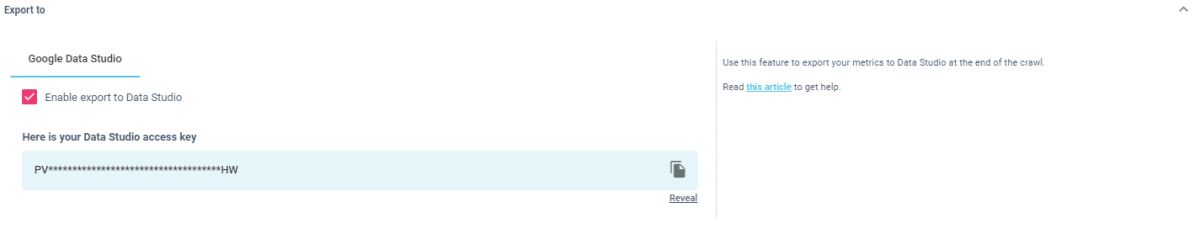

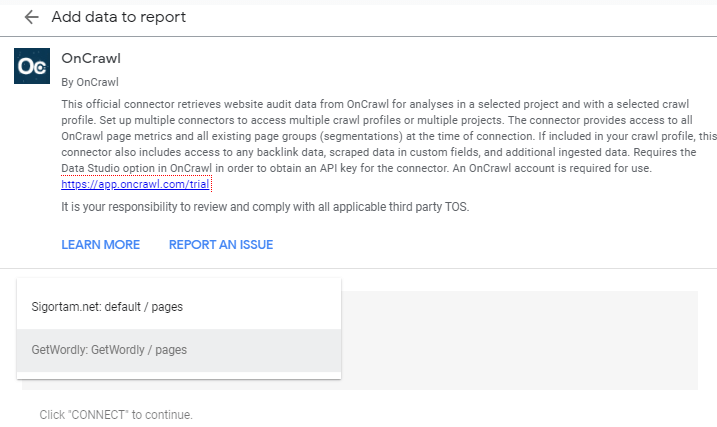

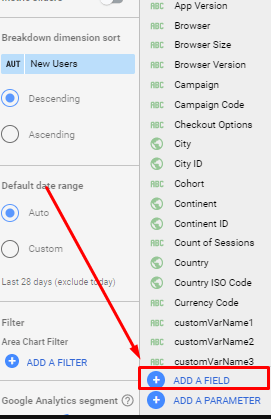

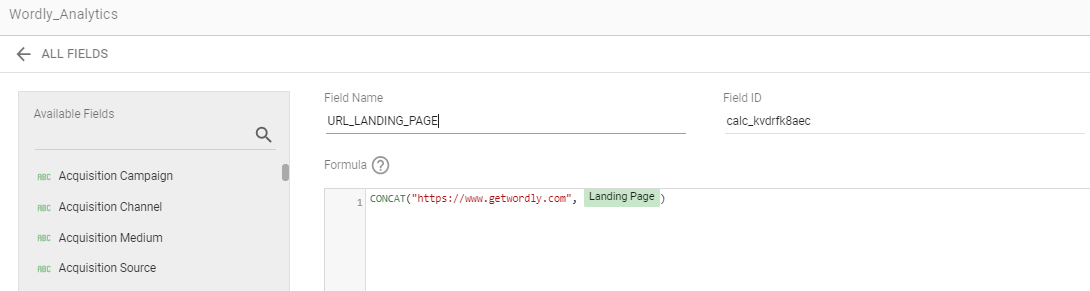

OnCrawl Crawl Configuration Options: Export to

The “Export to” option is determining the crawl process’ output whether will flow into the Data Studio or not.

Why is “Export to” Important?

“Export to” is our latest OnCrawl Configuration option. It is useful for using OnCrawl’s Data Studio Connector for creating better-visualized charts that contain SEO Insights with the customer. Instead of just showing a PDF file, showing them a paginated Data Studio report that can be customizable and unitable with other data files is way much more effective.

These were all of the OnCrawl’s crawl configurations. We have walked through every option with its advantages and benefits while giving some important notes about Googlebot and the Technical SEO Audits.

Now, let’s see how this information will be gathered and visualized by OnCrawl.

What are the Insights and Sections of an Example Crawl of OnCrawl for a Technical SEO?

When our crawl has been finished, we will see an output summary on our main project screen as above. Crawl duration, amount of URLs that are crawled, whether the file is being exported to the data studio or not, and the “SHOW ANALYSIS” button will be available.

We will have three main sections of our OnCrawl crawl report as below.

- Crawl Report

- Ranking Report

- Tools

We will focus on these three main sections, their sub-sections, and their micro-sections during our review, we will take their essence and try to understand why they are important and why they should be in the SEO report.

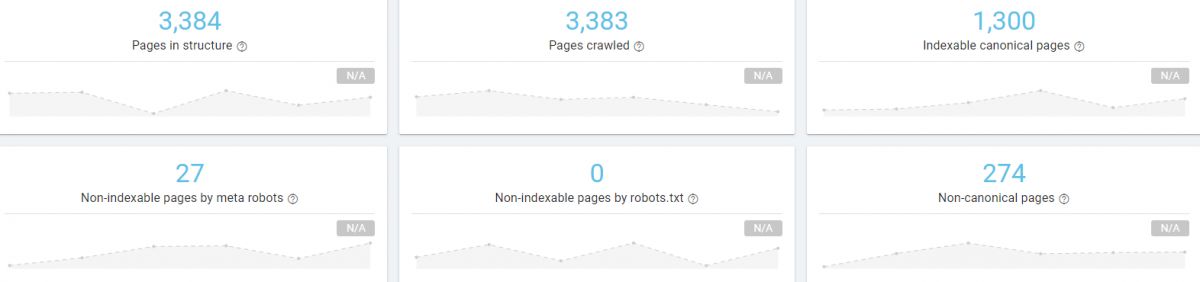

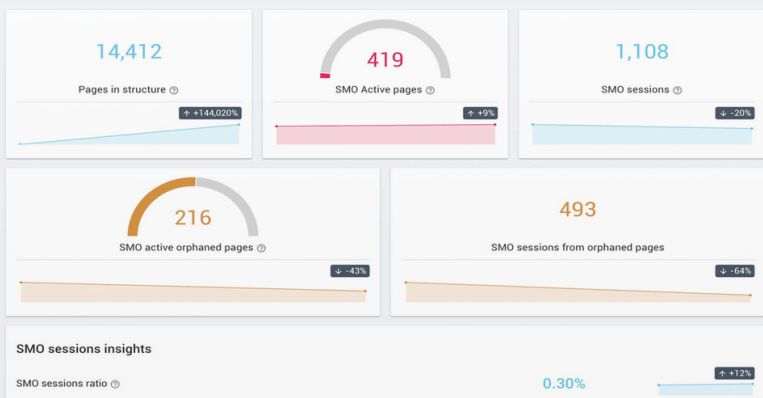

Summary of Crawl Report

The summary section of our Crawl Report is the first section of OnCrawl’s crawl output. It gives the general data we need, you will see an example below.

A quick summary of our crawl output is below.

- During our crawling, we have crawled more than 3.000 Pages.

- 1.300 of these URLs were the canonical URLs that means that the URLs we want to make the index.

- We also have 27 “Non-indexable” pages with meta robots commands.

- We have 274 “non-canonical” pages from our crawling, it might mean that we have some unnecessary pages with wrong canonicals.

- We didn’t encounter any URLs that can’t be indexed because of robots.txt

When you hold your mouse over a section here, you will see that OnCrawl invites you for a new crawl to see if there is a change on this metric so that you can extract more insights.

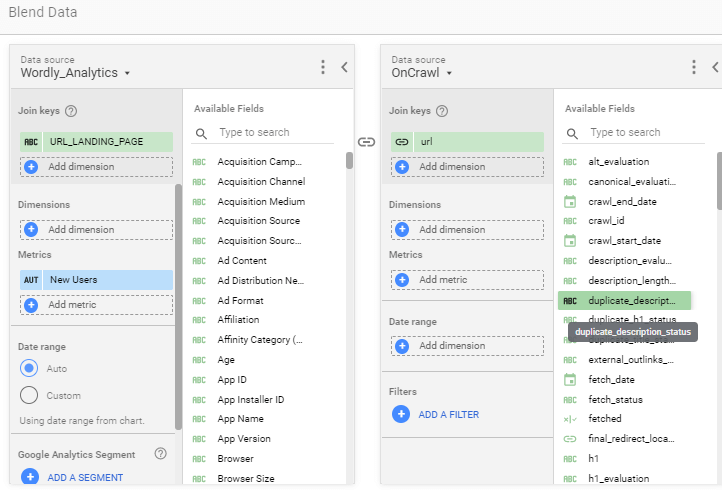

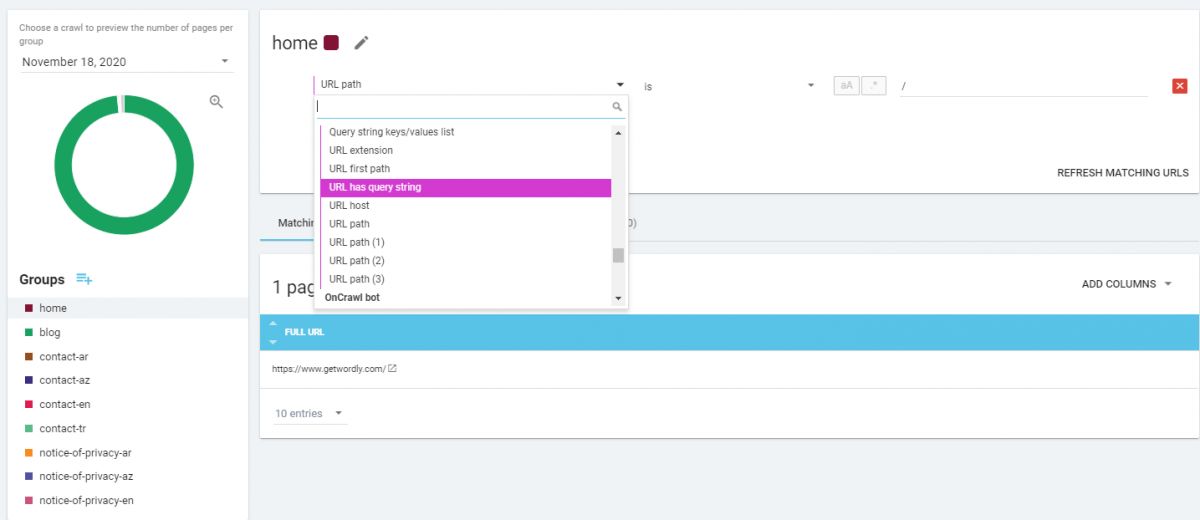

Grouping Pages According to Their Content and Target

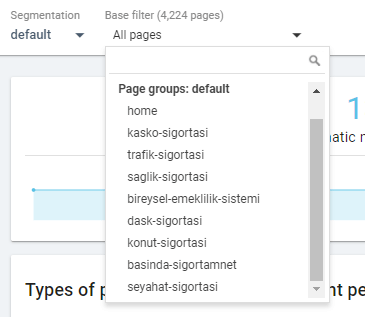

OnCrawl, during the crawling process, can differentiate the web pages from each other and create page groups according to their content/purpose. Thus, analyzing a website as different segments can be very effective. A section of a website can be very effective while one of the other sections can’t satisfy the user. A problem on a website section also can harm the performance of other sections. So, grouping those pages and controlling them in a semantic manner is important.

Below, you will see our segmentation for our crawl example.

We have 9 different segments here, according to their mutual points, I can see that these segments include the biggest “folders” of the website as “categories”.

And also, OnCrawl follows the URL structure for categorizing websites instead of Breadcrumbs since the Breadcrumbs usually have non-correct internal link schemes, I can say that this is a safer and consistent methodology.

According to the OnCrawl, our page segmentation is as below:

| Page Segments | Page Number |

| Homepage (Naturally) | 1 |

| Car Accident Insurance | 436 |

| Traffic Insurance | 139 |

| Health Insurance | 72 |

| Personal Pension | 89 |

| House Insurance | 42 |

| Press for the Brand | 0 |

| Earthquake Insurance | 31 |

| Travel Insurance | 53 |

| Other | 437 |

You also can extract all these data to a CSV or a PNG while turning this graphic into a logarithmic scale. About the Data Extraction, OnCrawl has a detailed, creative, and limitless system for your SEO Crawl Analysis and its Export. It will be told with deeper details in the following sections.

You also may want to check the OnCrawl’s API Reference’s Data Export section for more detail: http://developer.oncrawl.com/#Export-Queries

This option is also a universal feature for all of the other graphics of OnCrawl’s SEO Crawl report.

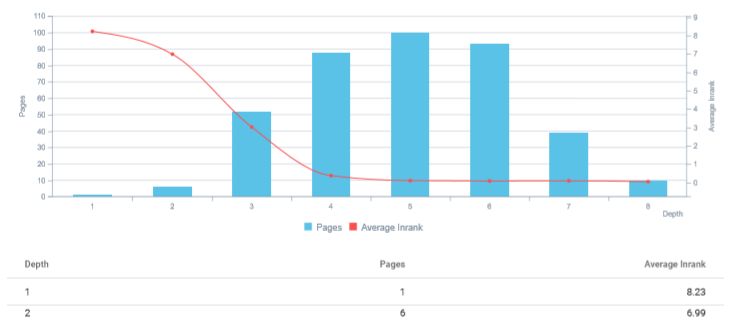

Page Groups by Depth

Pages by Group section is for grouping the page segments and analyzing their distance from the Start URL (the URL that you have started your SEO Crawl). The homepage is the most authoritative and important page of a website. Googlebot, after the Robots.txt file, starts its crawl from the Homepage. Thus the URLs that can be found easily from the home page are more important. Linking the main categories and main revenue-creator web pages from the homepage is important.

Below, you will see our Click Depth and Homepage Distance for every website segment.

Average Inrank by Depth

Average Inrank by depth shows the different possible “PageRank” and “Link Juice” like a ranking signal that is caused by the internal link structure. The term “like PageRank or Link Juice” is a subjective term here.

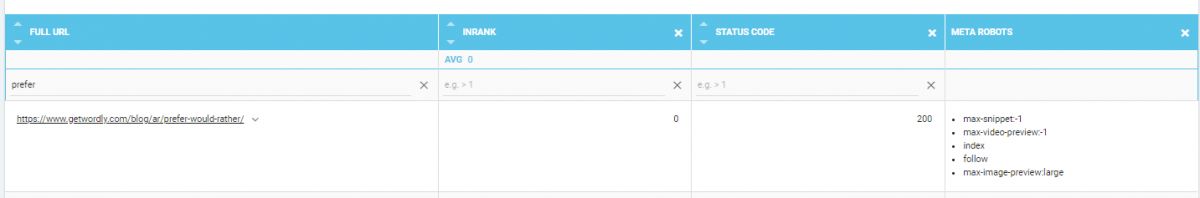

Inrank is the special formula and metric of OnCrawl that has been developed by OnCrawl engineers to understand a website according to its internal link structure. Thus, its purpose is to show the importance of a URL and Page Segment for internal navigation.

When I learned that OnCrawl’s “InRank” metric is actually based on PageRank’s original formula and evolved with millions of SERP and Ranking Data by time to reflect Google’s most updated PageRank perception. Many thanks to Rebecca Berbel for her contribution here. I only remember “Inlink Rank” from SEO PowerSuite but it isn’t inspired or adjusted for PageRank. So, I can say that this is another unique feature for OnCrawl.

Below, you may see our example site’s web pages’ average Inrank by depth.

Status Codes

In the internal link structure, every URL should give a “200” status code. If they are not 200 Status Code URLs, they should be removed from the internal atmosphere of the website. This can change for A/B tests for a brief time period but Status Code Cleaning is actually an important thing.

Internal 301 Links and 301 Redirect Chains make a web page’s crawl time longer and the crawling process harder for Search Engine Crawlers.

404 URLs also should be removed from the internal navigation while being turned into 410 Status Code.

Seeing these types of differences in a website on a scale is useful for a Technical SEO.

Below, you can see our status code profile for our crawl output.

As a reminder of the SEOs for Education charity campaign of OnCrawl.

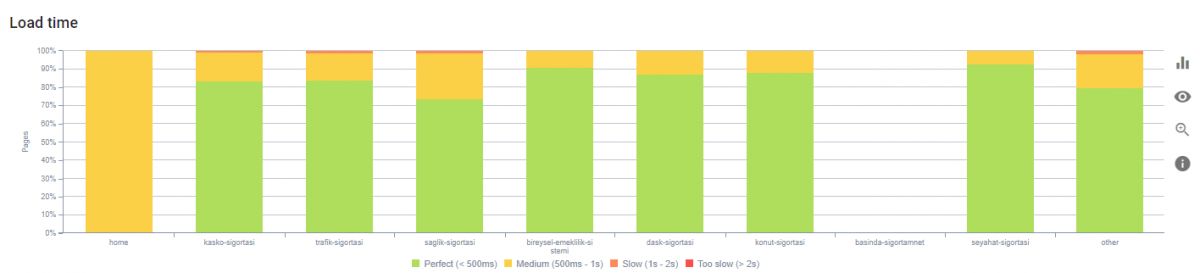

Load Time

Load Time is another important metric for SEO. Seeing the problematic URL amount in terms of loading time and its proportion to the sum of URLs is useful.

With a single click, you may see all the slow-loading pages with their internal link profile, InRank, Status Code, link attributes, and status codes that they point to these URLs.

Also, you may see our example crawl’s loading page information below.

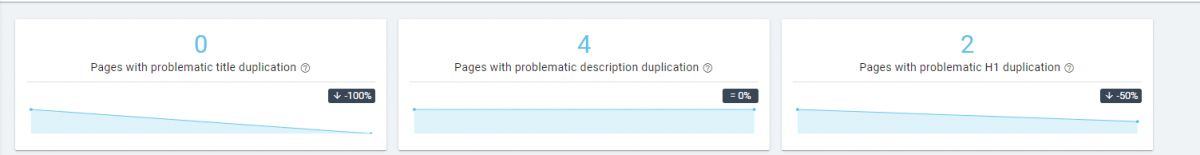

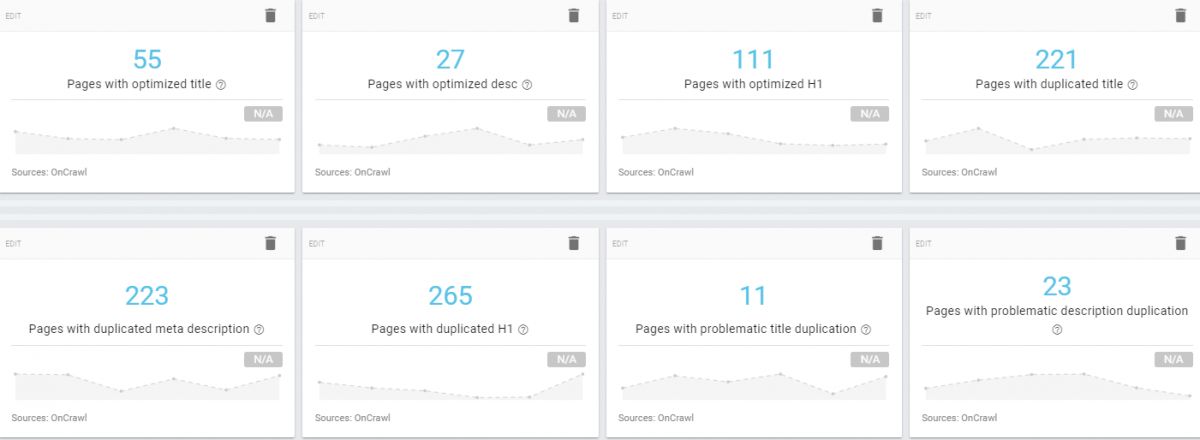

SEO Tag Duplication

In our SEO Crawl Report’s SEO Tag Duplication section we can see the duplicated SEO Meta Tags and their portions compared to the sum of the URLs.

This section includes Title Tag, Description, and H1 Tag. OnCrawl also distinguishes the “Problematic Duplication” and the “Managed Duplication” from each other. Two web pages with the same canonical URL within their canonical tag can use a duplicate title tag, this is being assumed as managed duplication. On the other hand, competing web pages with each other for the same query group with duplicated SEO Tags are the real problematic ones.

Also, missing and unique title tags can be seen.

You may see our example output for the given website.

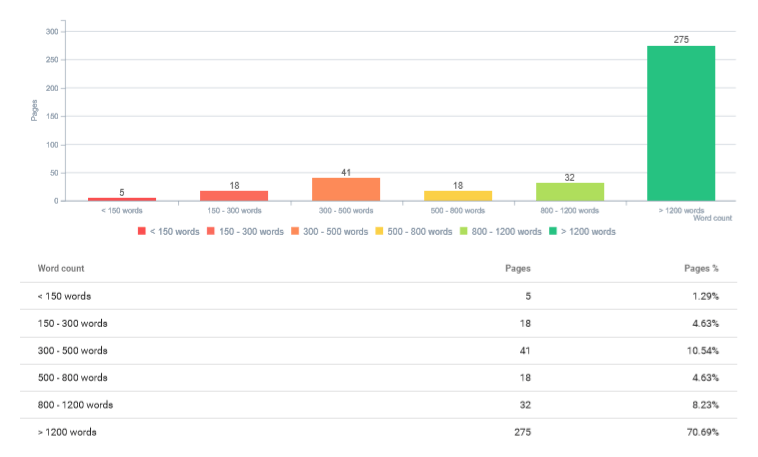

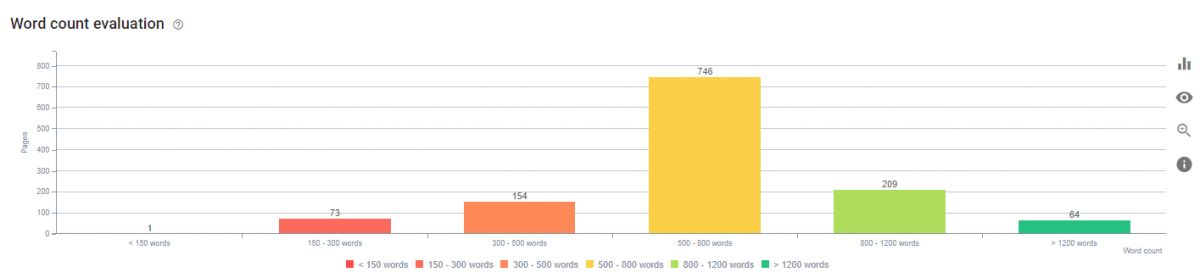

Word Count Evaluation

Word Count Evaluation evaluates the web pages according to the words that they include within it. It is useful for detecting thin content. Also, the Word Count Evaluation process works along with different page segmentations, so you may see success rate differences for different website segments along with their word counts. And, you may want to learn “absolute and weighted word frequency calculation with Python” for a better word evaluation.

Duplicate Content Detection

Problematic Duplicate Content Per Page and Site-wide Duplicate Content Types are two different metrics for OnCrawl’s SEO Crawl reports. You simply detect the web pages that are duplicated by their content or its SEO-related HTML Tags. You also compare these pages’ percentages to the sum up of all URLs.

Indexability Section of SEO Reports on OnCrawl

Indexability, Crawlability, and Render Cost… These are the main terms for Technical SEO. If it is not Googlebot friendly, it may not be rendered, if it is not crawlable, it may not be indexable, if there are wrong or mixed signals for indexing, it can get indexed while it shouldn’t be indexed.

We have five main metrics for indexability.

These are Indexable Pages, Indexable Canonical Pages, Non-indexable pages by meta robots, Non-indexable pages by robots.txt, Non-canonical pages.

At above, you will see “-3%” or “-%4” numbers at the right corner of the information boxes. It means that the “Indexable Pages” amount or “Indexable Canonical Pages” amount changed since the last crawl. Because, at this point, I have refreshed our crawl, and OnCrawl shows the changed data since the latest crawl time for the example website.

Pages by state of Indexation

Pages by State of Indexation section shows the Indexable and Non-indexable pages’ situation and amount in an interactive graphic.

This graphic here shows also the 404 and 301 Status Code Pages. If you click one of these bars, it will take you to the Data Explorer section of the tool as below. As we said before, Data Explorer is a unique and general tool-wide technology that can help get more detail for any kind of vertical combination.

Indexability Breakdown of Pages

As you remember, OnCrawl has segmented all of the pages according to their categories and mutual points. Indexability Breakdown of Pages shows the web pages’ status distribution in terms of indexability. You may see an example below.

You may see all of the Indexability Status for different types of URL Segments and site sections. Also, the same graphic exists for “No-index” pages and “Non-canonical” pages. Thus, you may see the main indexable content group for any website, and also you may check the non-indexable pages for any website section as below.

Clicking any section of these stacked bars, you may see the URLs that OnCrawl has filtered for you. Data Explorer, filtering data with logical operands and some unique OnCrawl page segmentation will be detailly told along with its unique sides.

For instance, for this example, we see that most of these URLs have 301 Status Code but still, they can be explored via an internal crawling system.

Rel Alternate URLs in a Web Site

Rel Alternate URL extraction and comparison is another unique metric for OnCrawl, as I know. Most of the websites don’t care about their “rel” attribute as much as they should be. For instance, you may see lots of “rel alternate” attributes for a URL without any accuracy. This situation creates a “mixed signal” for the Search Engine crawlers since the “rel alternate” actually means the “alternative of this URL” in a certain perspective. You will see an example below.

In this example, we see that a Turkish News Site has lots of “alternate” videos for its news articles without any accuracy with “RSS” type attributes. In fact, this information on the source code is not correct.

OnCrawl can extract these “rel=’alternate’” URLs and also can segment and categorize them.

We have “matching” and “non-matching” values for our “rel=’alternate’” links. It measures whether the canonical URLs and the “rel=’alternate’” URLs are matching or not. We also see that our “rel=’alternate’” values are not changed since our last crawl.

We have metrics below for our “rel=’alternate’” section.

- Pages with canonical: Shows the consistent canonical URLs with the “rel=’alternate’” URLs.

- Pages linked in pagination (prev / next): shows the URLs with “link rel=’prev’” or “link rel=’next’” URLs.

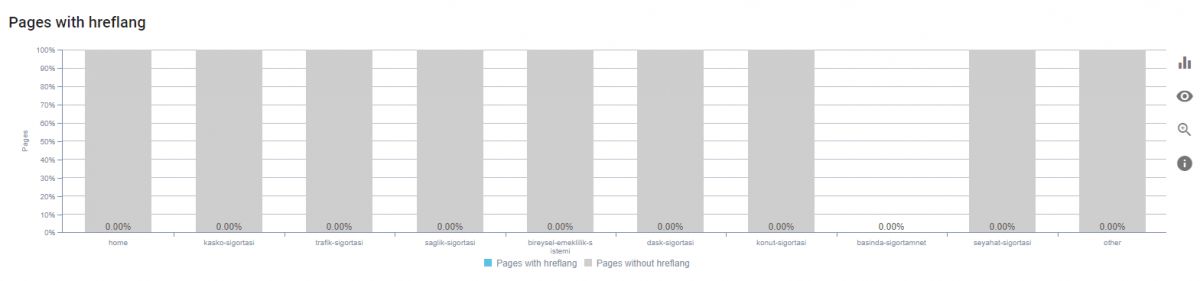

- Pages with hreflang: shows the URLs in hreflang values.

- Non-indexable pages declared as hreflang: Since, hreflang URLs also have canonical effect, their indexing status is important.

- Pages with missing hreflang declarations: Shows the missing or non-mutual hreflang declarations.

- Non-canonical pages: Showing Non-canonical pages that have the “rel=’alternate’” URLs that point them.

- Content duplicated pages with canonical issue: Showing the URLs with content duplication problem and also rel=’alternate’ URLs that point them.

Canonical Evaluation

This section shows the URLs with Canonical and rel=’alternate’ attribute profile according to the website’s segmented sections as below.

We see that our “other” section has lots of URLs that don’t match with its own canonical URLs. You may see them below as an example.

We also have special filters and dimensions thanks to OnCrawl’s highly customizable Data Explorer section.

Pages Linked in Pagination (next / prev)

This section shows the rel=’next’ and rel=’prev’ URLs in the source code of the URLs. Google doesn’t use the rel=’next’ and rel=’prev’ URLs but Bing and Yandex use them for following the paginated URLs and clustering them together. So, if you think SEO in a holistic vision, this information still matters.

Below, you may see how OnCrawl extracts and visualize this data for you.

In the example above, you can’t see an actual count since our example website doesn’t use “link rel next or prev”. Also, Google stopped using pagination URL attributes in the source code since most of the web sites implement them in a non-correct way, they figured out that they spend more resources because of this attribute.

Hreflang URLs and Their Data Visualization in OnCrawl

Hreflang tags are being used to symmetrically declare the same content’s different geographic and language versions so that Search Engine clusters and evaluate these content together. Also, it makes finding other targeted site sections easier.

OnCrawl has the metrics below for Hreflang Issues that matter for International SEO Projects.

- Missing declarations: Missing hreflang tags for multi-language and multi-regional contents.

- Missing self declaration: Missing hreflang tags for the content itself.

- Duplicated hreflang declarations: Repeatedly used hreflang tags for the same URL.

- Conflicting x-default declarations: Conflicting declarations for the default version of the same content.

- Incorrect language code: Unsuitable Language code to the ISO 639-1 Format.

- Pages with too many hreflang: Usually the pages’ size too much or the hreflang URLs are irrelevant.

- Pages are part of a cluster containing pages declared as hreflang that couldn’t be found in the crawl: Non-existing or non-found URLs in the hreflang attributes.This is the case if you are using “subdomains set up for language-based differences”. If you crawl only one subdomain, OnCrawl will report that the specified hreflang attribute was not found, as other subdomains cannot be discovered during the crawl, because of the crawl configuration.

OnCrawl has shown the hreflang URLs and their errors as below.

Also, the URLs with these errors and non-indexable hreflang URLs can be found easily in the same section.

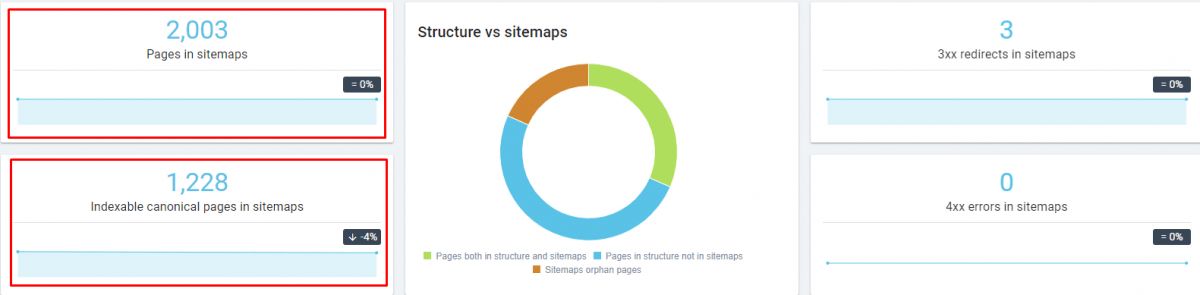

Sitemaps Section of the OnCrawl’s Crawl Output

The sitemaps are one of the basic elements of Technical SEO. Every URL in the sitemap should be the canonical URL that is consistent with the “hreflang” and other “alternate” URLs with 200 Status Code. They should be indexable and they shouldn’t be cannibalized with too much similar or duplicate content.

Above we see that we have 2,003 URLs in our Sitemap but only 1,228 of them are actually indexable. We also have 3 redirected URLs in our sitemaps. We also can rate the URLs and their situation in terms of canonicalization and indexability with the interactive visualization from OnCrawl’s crawl reports as below.

Sitemap Types

There are different sitemaps such as image sitemap, video sitemap, a news sitemap, and XML Sitemap. OnCrawl can differentiate these sitemap types from each other and show their situation as below.

Since we don’t have any of these sitemap types for our current crawl, the numbers are 0. The “= 0%” sections show the change amount between different crawl reports from the same crawl profile.

Sitemap and Structure Data

Structured Data is one of the best ways to tell a web page’s purpose and their segments’ purposes and contribution to the web page’s general situation. The URLs in the sitemap can be used with structured data to show their purpose in a better way. OnCrawl can detect these structured data features in the URLs in the sitemaps and can show them to you.

Since we don’t have any video sitemap or news sitemap, the numbers are 0.

Pages in Sitemaps

This section is also important. It shows the missing sections in the sitemaps. How many pages from which segment is missing from the sitemap. You may see the interactive visualization below.

We see that more than half of the website and their web pages are not in the sitemaps. You also can correlate this information with the log files and Google Search Console to see if there is any connection between the performance of the URLs in the sitemap or not.

Sitemaps orphan pages

This section shows the web pages that are in the sitemap but not in the internal website navigation. If you don’t create a proper internal link structure that shows the importance level of your site sections and webpages, the orphaned web pages that are in the sitemap may also be ignored by Google. “Signal Collection” is a process that is being performed to understand a website by a Search Engine and the Internal Link amount is one of them.

So, if you say Google that “index those pages” because they are in the sitemap but also if you don’t link them enough, Google will think that these pages are not important even if they are in the sitemap. And, we call this “Mixed-Signal”. Send Search Engine, always “meaningful” and “consistent” signals.

Thus, you may see the web pages that don’t have enough internal links to be crawled, evaluated, and explored by the Search Engine Crawlers.

Pages in sitemaps by state of indexation

This section shows the URLs’ indexability situation in the sitemap. If a URL is not indexable, it shouldn’t be in the sitemap. By clicking any of these bars, you may see the specified vertical and its URLs to clean and increase the effectiveness of the sitemap.

Status Codes Report of the OnCrawl

Status Codes show the URLs’ responses to the crawler. If during the crawl process, a crawler encounters 301, 404, 410, 302, 307 status codes more than Response Codes with 200, it might be a problematic situation. Imagine you have a Search Engine, you want to crawl a website so that you can find useful content for some queries of users but to crawl that website you need to tolerate lots of unnecessary URLs and you need to waste your crawling resources. Would you try to continue to try to understand that website?

That’s why a clean status code structure is important in a website. Let’s examine our example website that lost the 6 May Core Algorithm update for its status codes.

We see that it has more than non-200 URLs from the 200 URLs in its internal navigation. 179 4xx pages along with 1569 3xx pages. Such a response code distribution for a website will create problems for crawl efficiency.

OnCrawl also categorizes the 3xx URLs with the following criteria:

- Pages with single 3xx redirect to final target

- Pages in 3xx chains

- Pages in 3xx chains with too many redirects

- Pages inside a 3xx loop

- Pages in crawl that are 3xx final targets

Googlebot doesn’t follow a 301 Redirect Loop after the 6th redirect chain. OnCrawl also gives a Number of Follow and No Follow link amount for these criteria. You may see them below.

- We see that our example web site has 1279 single redirects but 17.191 times these URLs are being used in our web pages.

- We have internal nofollow links for these redirect chains.

- We have 10 Redirect Loops and 170 times we linked these loops.

- We have 323 pages that are finalized via 301 Redirect with 48,784 internal links.

- We have 280 redirect chain with 578 follow internal link

- We didn’t have redirect chains with “too many” repetitive redirects.

By clicking any of these numbers, you may see the URLs with their own internal link structure and other SEO-related features.

Target status of 3xx pages

This section shows an interactive visualization of our 301 Redirection Chains and their targeted URLs.

Status Codes Breakdown According to the Site Segmentation

This section shows the status code distribution for the website’s different segmentations. You may see an example below.

In this example, we have completely redirected the category for the “press” section. We also have a 404 pages cluster in our “Car Accident Insurance” category. Most of the “health insurance” section is being redirected to somewhere else.

This tableau means that crawling of this website for some sections is harder according to the other sections and the crawl health varies for these…

Status Codes Breakdown According to the Distance from Homepage

This section shows the status code distribution according to the click depth. Thus, you may understand the problematic sections of a website in a better way.

According to our depth, our website has more problematic source codes from the “second depth”. It is at the maximum level at the fifth level. Also, we know that from the 4th level on, Googlebot will crawl these pages 2x times less.

Link Flow and Internal Link Structure in OnCrawl’s Crawl Reports

This section is about the internal and external link profile of the crawled website and has the most unique and insightful graphs according to most of the industry. OnCrawl is using the InRank metric which is developed by themselves to show the popularity of a URL in the internal structure of the website.

Below, you will see the Average InRank distribution for a website according to the depth.

We see that we have 286 Pages in the second depth. They share 2.86 Inrank. It means that we can use the second depth for more special pages with the organic revenue potential. Also, in the fourth rank, we have 329 pages and their InRank is 0.89. You may compare these values with your competitors to see their situation and correlate the Search Rankings to understand the best balance.

Page Groups by Depth

This section shows the internal page groups’ distribution according to the distance from the homepage, a.k.a click depth.

We see that most of the second depth URLs are from the “other” section which consists of blog posts. Using the second depth of URLs with products and services which have actual conversion value can be better while collecting all of the informational content under the “blog” section. So that the InRank and page segmentation can be calculated easily between the transactional and informational pages.

InRank Distribution

In this section, we can see the InRank distribution from 0 to 10 for different page segments. Let’s start.

We see that the “other” section has the same importance as much as the homepage. Also, the pages with the 9 Inrank consist solely of the Other URLs. This means that the page segmentation is not being done according to the web pages’ purpose, users’ visit intent and the URLs of the web pages are not being structured well enough along with their internal link structure. Also, the “other” segment can occur if you specify multiple Start URLs with fewer pages with lots of subfolders for your SEO Crawl. Since they won’t be enough for creating a segment with their own size, they will be grouped as “other” against other website segments.

InRank Flow

This chart is one of the best graphics that can show the Internal Link Popularity according to the links’ target direction.

It shows that most of our Internal Links are coming to the Other URL segment from the Other URL segment. It makes it harder to be understood.. Also, the most valuable pages in terms of conversion and organic traffic value don’t get enough links to be considered important by the search engine crawlers.

And, Dear Rebecca Berbel from OnCrawl taught me that another nice feature of the InRank Flow chart is that you can see how InRank Flow would dissipate if a particular segment of a website did not exist. So, with InRank and Internal Link Popularity metrics, you have the chance to better consolidate your website’s ranking power.

Average InRank by Depth

This section shows the average InRank values for the different page groups from the different click depths. In this example, we see that the most valuable pages in terms of transactional value are “Traffic Insurance” and “Health Insurance”. It might seem correct at the beginning of our analysis. But if you click one of these dots, you will see the information below.

We see that the pages with the most InRank value are actually the pages with blog content instead of the actual service pages with the real organic traffic value. We see that the “Traffic Penalties” content has more InRank value according to its parent “Traffic Insurance” web pages. To fix this situation, you can use OnCrawl’s Data Explorer as I did.

Average Link Facts for a Website in OnCrawl’s Reports

From the same section, we also can see the average values for Links in a website such as their status codes, average link per page, average external link per page, average followed a link by page segments, or click depths. This information may help you to optimize your website for better PageRank distribution, user-friendly navigation, and creating a more semantic, crawlable, and meaningful site tree.

We see that since our last crawl, our average external nofollow amount has increased by more than 140%. We have 2.34 external nofollow links per page. We also have 31 Internal Links per page. In some cases, Google said that between 100 and 150 Links on a web page can be acceptable, but also Google said that after a point, Googlebot stops crawling and evaluating links from web pages that have an excessive amount of links.

Thus, with Oncrawl you can see what are industry normals for Average Link Features for a website, and also you can optimize your website while auditing it with a more sensible internal link and external link structure.

Links Status Code Breakdown for a Web Site

With OnCrawl, we can have our Status Code Breakdown along with their target URLs status codes from the same report section.

Links Insights

Complementing the previous section, we can see some sharp features of links on a website.

- Pages with only 1 follow link

- Pages with less than 10 follow links

- Total number of follow links

- And their interactive visualization

At the breakdown, we can see the external nofollow links, internal nofollow links, and more.

Links Flow

In this section, we can see the actual numbers of the links and their targeted URL segments. The previous example is for the InRank Flow. The link flow and the InRank flow shouldn’t be confused with each other.

We see that most of the links are pointing to the “other” section from the minor product and service pages. Since the important sections of this website are actually the products and services, linking to the informational blog posts excessively can harm the website’s categorization and profiling process, it might be seen as an informational source more than an actual brand.

This might have led them to lose the 6th May Core Algorithm since mainly they lost traffic for their product and service pages, this fills the blanks in the SEO puzzle.

Average Followed Internal Links by Groups and Depth

This section shows the Average Internal Followed Links according to their web pages’ click depth and page groups.

We have 816.75 links for the homepage as average on every web page from this website while we have 66.37 pages on average for the “other” section. For the transactional and organic traffic pages, we have internal links between 6.81 and 12.12 on average. And most of these links are not targeting the actually important sections of these groups.

Don’t you think it clearly shows what the problem is?

Average Followed Outlinks for Every Web Page

This section shows the average external outgoing links for every web page group.

We have the results below.

- We have an average 94 outgoing internal following link for the homepage.

- 32.50 average followed outgoing internal link for Traffic Accident Insurance

- 25.34 average followed outgoing internal link for the Traffic Insurance

- 14.91 average followed outgoing internal link for the Health Insurance

- 25.34 average followed outgoing internal link for the Personal Pension

- 25.21 average followed outgoing internal link for the Earthquake Insurance

- 33.15 average followed outgoing internal link for the House Insurance

- 67.15 average followed outgoing internal link for the Travel Insurance

- 34.78 for the Other Group.

I will not write a single mathematical calculation here, but very simply, we see that the most important page groups get 5 or 6 links on average, while the less important ones get 30 to 67 links on average.

The “Other” section, which is less important and keeps the Click Depth Data away from being semantic for the Search Engine to a certain extent, receives 2 links for each link it sends to another website section. I leave both graphs below for a better understanding of the situation.

It is even now more clear.

HTML Tags in the OnCrawl’s SEO Reports

HTML Tags Report is actually the report for the SEO Related code snippets in the web pages’ source codes. OnCrawl’s main focus on these reports is actually the deduplication, missing tags, and their distribution data for the website. It mainly can be a problematic situation for old blogs with thousands of posts or e-commerce sites with too similar products.

We can see the duplicate title tags, description tags, and H1 tags along with their change percentage according to the latest crawl. This section shows the duplication problems and also their types with interactive visuals for every vertical.

“Managed with hreflangs”, “managed with canonicals”, “no management strategy”, “hreflang errors”, “all duplicate content” sections are the other options here. Seeing all the On-Page SEO Elements’ duplication problems according to their page segments is possible.

Also, we can see the distribution of the “too long”, “perfectly long”, “too short” meta tags’ distribution in an interactive way.

The H1 and H2 numbers for pages can also be visualized. This information is important because it shows the web pages’ appropriateness to the HTML Rules along with their granularity for informing the users. We can see the average numbers for every Heading Tag for all of the websites in OnCrawl reports.

We see that we have three H2 Tag per page, you may use this information and methodology for understanding the industry normals.

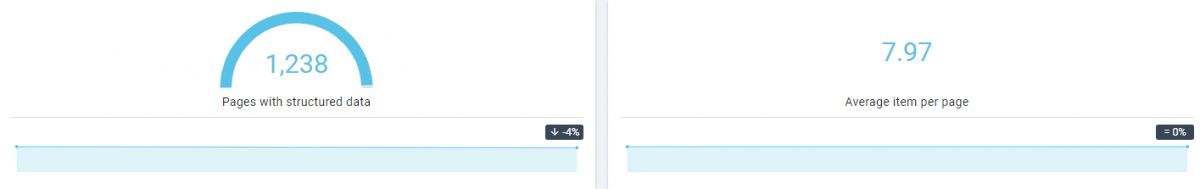

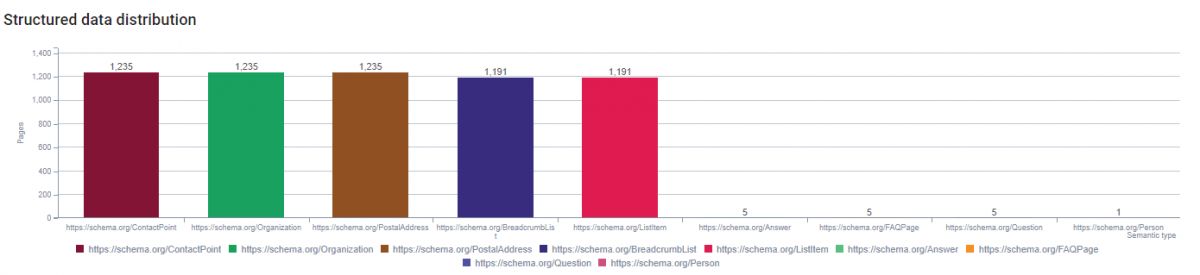

Structured Data Reports in OnCrawl’s Reports

OnCrawl also extracts the Structured Data from the web pages and visualizes them according to their amount and type through page segments and click depth. We will have the metrics below for the Structured Data report.

- Page Amount with Structured Data

- Average Item per page

- Structured Data Distribution by Type

- Pages with Structured Data According to the Site Sections

We see that our web site’s most of the web pages with 200 Status Code have the Structured Data and have 7.97 items within the Structured Data average. Below, you will see the Structured Data Distribution by type.

Also, we can see the Structured Data’s distribution by page segments.

You may use OnCrawl’s SEO Insights for determining the wrong structured data usage, examining the structured data profile per page and per website segment. We see that all of the web page segments have structured data with 100% except the “press” section which is completely redirected to the main category page.

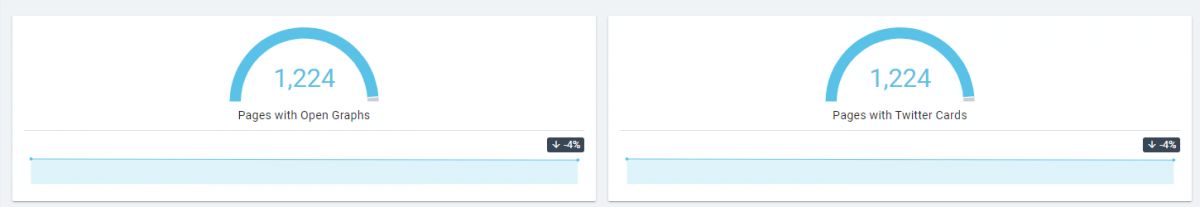

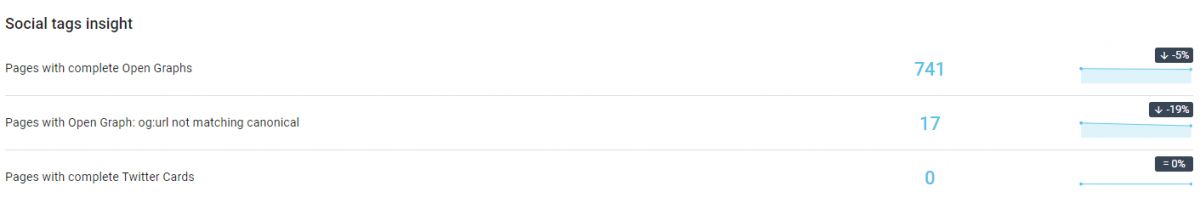

Social Tags in OnCrawl Reports

OnCrawl also extracts data for Social Tags such as Open Graphs and Twitter Cards. These tags are being used by the Facebook Crawlers and Twitter crawlers so that the posts can be seen in the correct form on the social share platforms. Also, Googlebot checks this information to see whether the general information on the web page and the information on the social tags are consistent or not.

We see that all of the indexable pages have both Twitter cards and Open Graphs.

“Pages with Open Graph: og:URL not matching canonical” section is an important Technical SEO detail here as I know this might be a unique feature in the industry. You also can see which Open Graph objects are being missed or not as below.

You also can check the Open Graph Types and optional metadata usage as below.

Checking the Open Graph Data Usage by page segments is also possible.

All of this information can be found also for the Twitter Cards and their own types via OnCrawl’s report methodology.

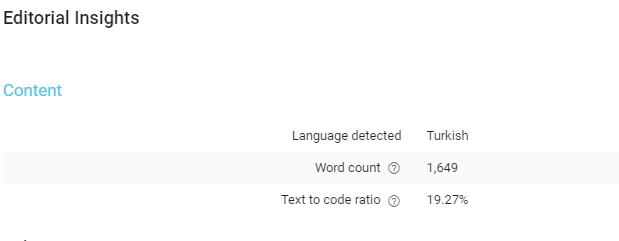

Content Audits with OnCrawl’s Crawl Systems

OnCrawl’s crawlers during the crawl process can extract the content of the web page for giving insights to the webmasters. It is a little bit surprising but I never saw an SEO Crawler before that can make N-Gram Analysis. OnCrawl also can make N-Gram Analysis…

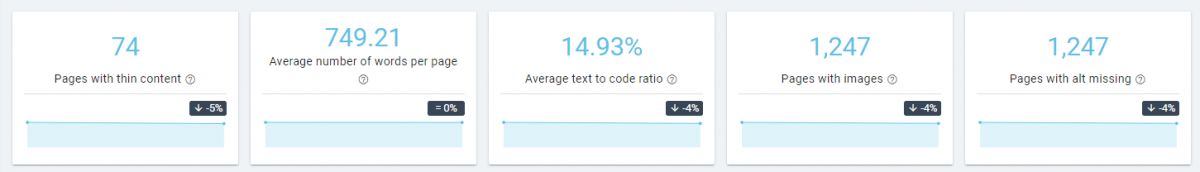

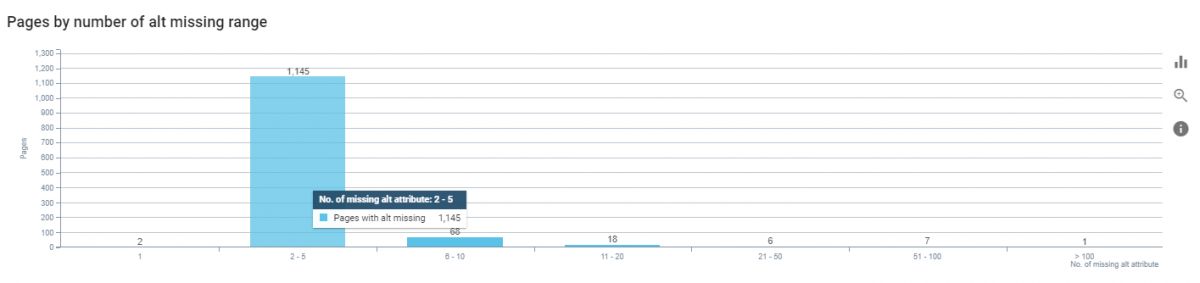

We can see the pages with thin content, average words per page, code/text ratio, pages at least one image, and pages with missing alt tags. Simply comparing this information with the main competitors like a Data Scientist, we can see the main differences between websites.

We also have the web page amount according to their content amount. For instance 73 of these web pages do not have enough content on them, they have less than 150 words. 154 of the web pages have a content-length between 300-500 words. You simply check these pages’ ranking and traffic performance against their competitors to see that the content detail level and content granularity can help. N-Gram Analysis also can help for detecting the keyword stuffing for an SEO so that he/she can fix the situation according to Google’s Quality Guidelines.

Also, remember that thanks to Data Explorer, you can mix all of this information in any way you want. It is completely up to you, imagine that OnCrawl is your Data Scientist, you just want to extract data to understand the users and Search Engine Algorithms.

Check the content length and word amount according to click depth.

Check the content length and word amount according to the page segments.

In short, we see that most of our content is between 500-800 words, and also we see that some of our page segments have lesser content according to the others. I believe that in an industry such as Insurance, web pages should have more depth and detailed content with multi-layered granularity along with sensible internal links.

And we see that most of the content actually doesn’t satisfy the industry and user demands in both cases, page groups, and by depth.

One importance of being able to analyze the length and depth of content according to different segments of a website appears in industries with versatile needs. For example, a news site may need investigative journalism articles to be longer than 1200 words, while “Breaking News” stories may need less than 500 words. It is important to see the responses of Search Engine algorithms according to such differences in needs. The ability of OnCrawl to show the effect of the content length on the Organic Performance according to the website segments is one of the rare tasks that an SEO Crawler can perform.

Many thanks to Dear Rebecca Berberl from OnCrawl for making me remember this subtle and important detail.

N-Gram Analysis of the OnCrawl

N-Gram Analysis is the analysis of N-elements from a text according to their sequence in a text. N2 charts, n3 charts, or n4 charts can be used in different analyses. OnCrawl is performing N-Gram analysis until the 6 words are in sequence. And it is really a unique and cool feature for an SEO Crawler. You can also understand what is content talking about instead of just looking at the average numbers by click depth or page groups.

You also can see which N-Words occur how many times site-wide. It helps you to understand the repetitive terms being used on the website and it is quite helpful to find unique terms by comparing the competitors.

We also see that there are repetitively used long-form sentences thousands of times without any optimized context. And, when you compare two different website segments or different websites for the same niche, you may see the expertise and detail differences that the publishers and service providers cover. If the content is not detailed, probably you will see more “stop words” in the N-Gram Analysis while you will see more “related concepts and details” in more informative content. Thus, N-Gram Analysis can be a sign of “expertise”. Don’t you agree? I recommend you to check at least the “Predicting Site Quality” Patent of Google which shows how they might use N-Gram Analysis for predicting the site quality.

You also can check the missing alt tags amount for different occurrences.

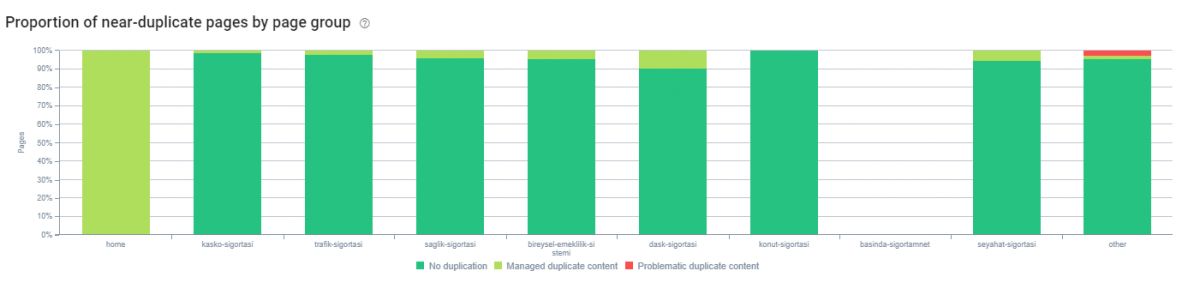

Duplicate Content Reports on OnCrawl

In OnCrawl, you can also use duplicate content reports to see the problematic sections of the website. You can use the filter to see different sections of a website for duplication problems.

You may see the quick statistics for duplication problems within a web page.

This section also has some similar report types with the “SEO Tags” section naturally. But I believe this section of OnCrawl reports fully focuses on the content instead of HTML Tags.

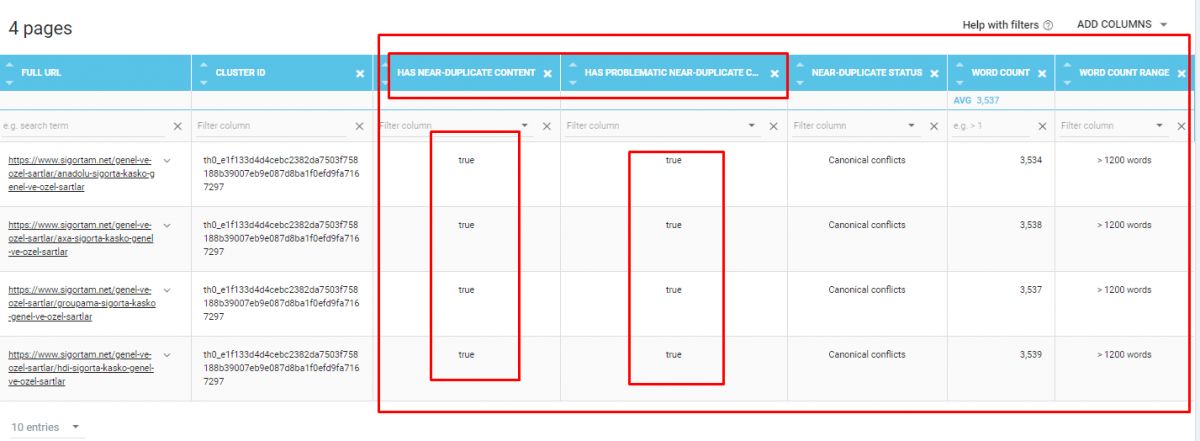

Also, in this section, we can see the duplication types on pages like above along with their occurrence level on the website. We also have duplicate content management reports that include “canonical conflict”, managed with canonicals” or “all duplicate content” metrics.

It seems that we have 42 completely duplicate content and also 13 canonical conflicts along with 29 duplicate content managed with canonicals. You may see the duplicate and near-duplicate content distribution as below.

We also have a similarity report for the duplicate contents.

It shows the duplicate content similarity and also duplicates content distribution for page groups. You may check these pages to decrease the web page similarity by repurposing these pages.

Content Duplication, Similarity, and Content Clusters on OnCrawl Reports

Google clusters contents that are similar to each other to find representative content through the canonicalization signals. OnCrawl has a similar Content Management Report for Duplication Problems.

You can change the cluster size and similarity percentage as you want to filter the duplicate contents. You may see how many URLs there are in the clusters. If you click one of these clusters, you will get the Data Explorer view below.

You may see the near duplicate and duplicate content problems with OnCrawl easily, also from the Data Explorer section, you may go to the URL Details by clicking one of these results as we will show in the end section of this review.

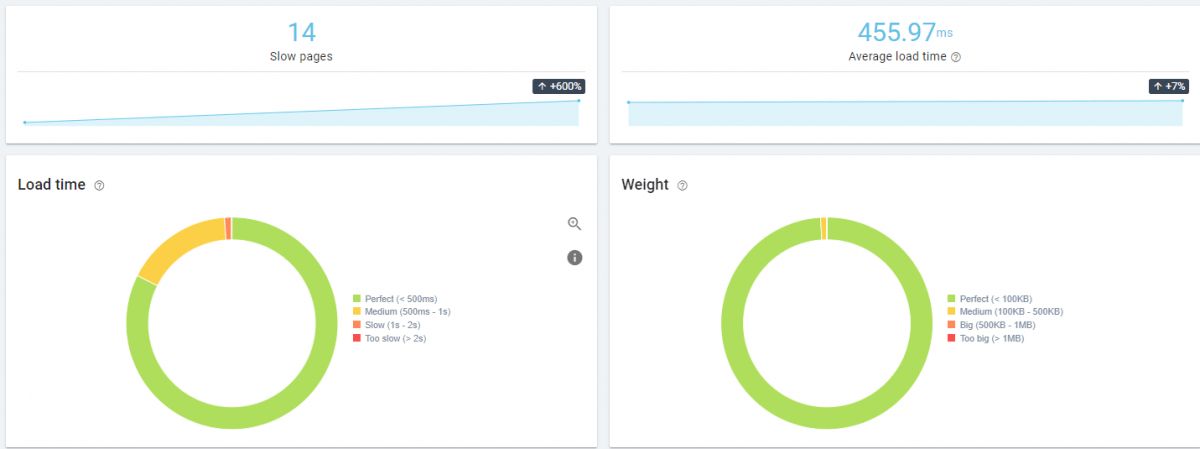

PayLoad Report of OnCrawl Crawl Process

Like in other sections, you may filter the web pages as you want to see their web page performance metrics. Thanks to OnCrawl, you can see the slow pages, the average size of web pages, and the average load time of web pages.

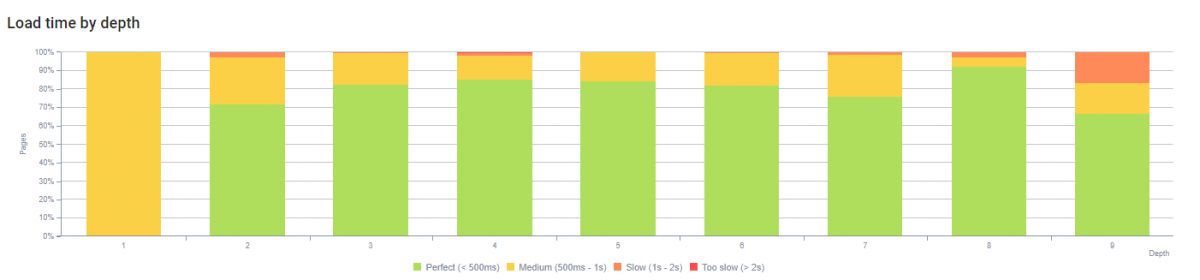

You may check the change trend and direction for the web page performance metrics with the Crawl over Crawl feature.

You can check the Load Time by page segments as below.

You can check load time by page groups.

You can check the load time by click depth.

You can check page weight or source size by click depth.

You can use OnCrawl as your own Web Page Performance Calculator and Data Scientist at the same time. Checking the industry normals or top-performing websites will be helpful enough to understand the general situation. You may check the 6 May Core Algorithm Update winner sites for the same niche and geography to see the differences to put a performance budget with the IT Team.

And, using the Crawl Over Crawl feature, you can check the development while filtering the problematic sites with the help of the Data Explorer and URL Details section on the OnCrawl.

You may want to read our Page Speed Optimization Guidelines so that you can understand SEO Crawl Reports’ Web Page Loading Performance Metrics Better.

- What is Largest Contentful Paint?

- How to Optimize Total Blocking Time?

- What is Cumulative Layout Shifting?

- How to Optimize Time to Interactive?

OnCrawl Ranking Report via Google Search Console Data

OnCrawl’s Ranking Report supports an SEO in the form of a Personal Data Scientist by combining unique charts and Google Search Console data. You can evaluate Google Search Console data by page groups, website sections, and click depth of a website. Thanks to URL Details and Data Explorer, you can directly see how many keywords a URL is ranked on, how these queries can be grouped, and related to Technical SEO issues, along with the log analysis of the URL.

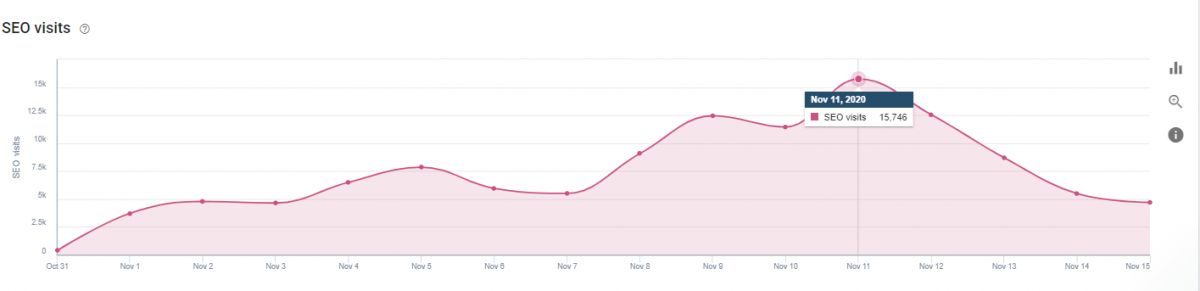

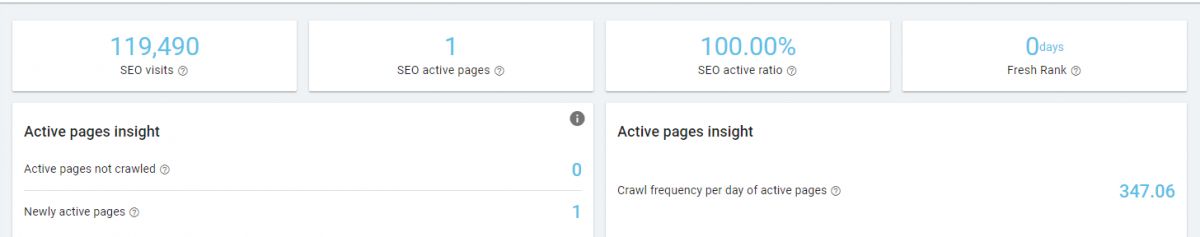

In the Ranking Report section, I will use a brand-new domain for 3 months to protect the information of the company we have used so far for review. The limit of 100,000 Organic Traffic has been reached in 3 months by using Semantic SEO principles with content added in a proper hierarchical structure and with contextual links, without any off-page, branding work for this domain.

You may also want to read guidelines and articles below about the domains so that you can understand better what we mean while saying a “brand-new domain” or just “domain”.

Thus, the importance of Technical SEO and Quality content can be seen in a better way.

Since this is a new domain, we see that our 242 pages still exist in SERP without getting a click. This means that with OnCrawl we can see how there are differences between web pages that receive and do not receive traffic. We also can optimize these pages with our Data Scientist OnCrawl.

We see that our total of 387 pages that we’re able to rank in Google have 1,430,144 Impressions and 50,238 Clicks.

OnCrawl can analyze the Google Search Console data according to our web pages ‘position data along with the queries’ N-Gram Profile

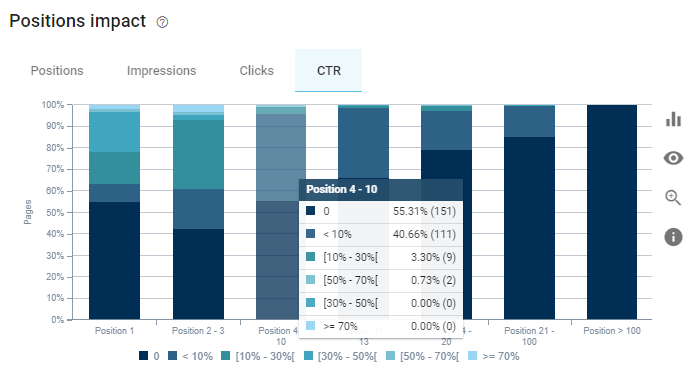

The first one is called Position Impact.

Position Impact categorizes the queries that the website has ranked according to their position and extracts the Click, Impression, CTR, and Amount of Keywords for us. We have 60 queries that we have the first rank but it generates only 20.000 Impressions. It means that most of them are “long-tail queries” with little competition.

We also can see that our main Impression Resource is coming from the queries at 4-10 rankings with over 1.000.000 Impression while the 2-3 ranking queries bring only the 114.897 impressions.

It means that our “Brand-new Domain” can be a new young active player in the market with a very efficient SEO Project but still it has ways to go and need more authority. When we check our Click Data, it also shows the potential.

We are getting more clicks with the 4-10 keywords than the Position 1 Queries. It means that if we boost our website a little bit more with better Topical Coverage and Semantic SEO Principles along with Technical SEO, it will show its real potential.

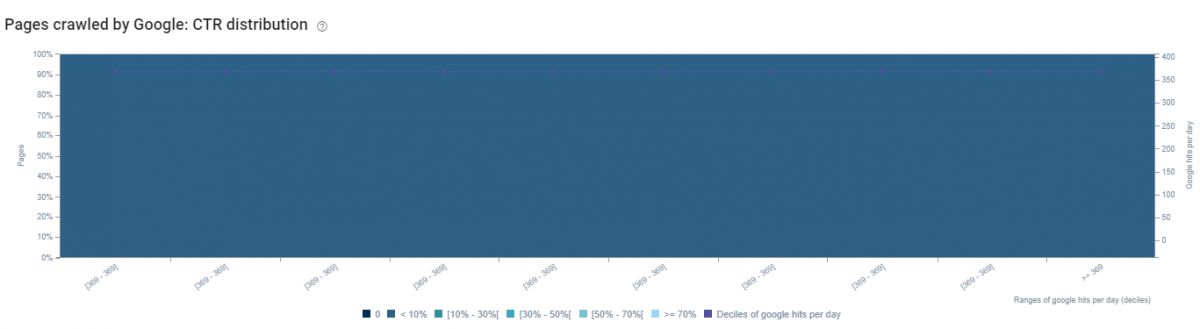

If we check our CTR Data, we can see that most of our web pages have a CTR of less than 10%. This shows a website where to focus on more and what to do for it. If you click any of these different colors on the bar plot, you will get the URLs behind this data.

You may see these URLs and their Positions easily. At the left of this screen, other verticals, columns also show complementary information.

Keyword Insights with N-Gram Analysis

Thanks to OnCrawl, you can combine Position Impact data with N-Gram Analysis and consolidate it with Google Search Console Data. Thus, you can see which types of queries bring more clicks, impressions, s and which types of queries you need to improve your website.

Also, you can unite N-Gram Analysis and Google Search Console Data Insights with the N-Gram Analysis of your website. Is there any correlation between site-wide N-Gram Analysis and GSC Performance? Or you can perform N-Gram Analysis with a site section to see whether there is a correlation between these terms and the performance on those words via GSC Data.

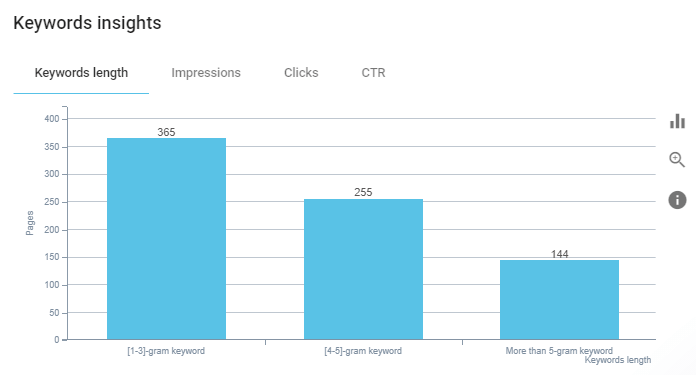

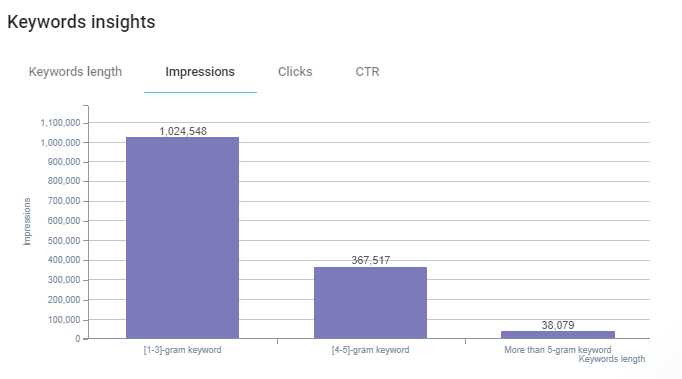

You may see the distribution of our queries according to their length and their N-Gram Analysis. This data shows that most of our queries are short-tail.

Also, our Impressions are coming from queries with 1-3 words mostly, queries with 4-5 words are following them. By clicking one of these bars, you also can check which URLs have which queries according to their length and what are their performance correlations with the length of queries.

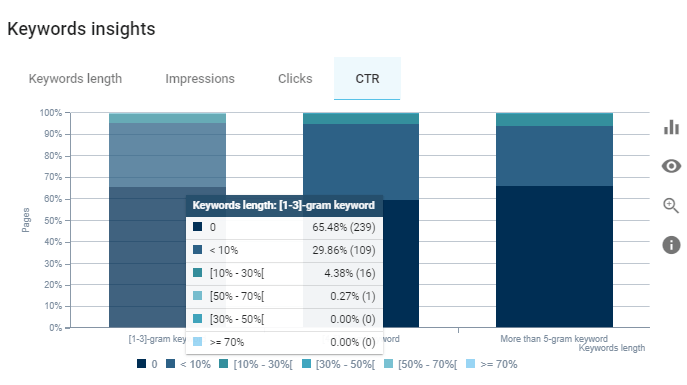

Click-Through Rate (CTR) data according to web site’s queries’ length can be found below.

And we see that our web site doesn’t cover so many long-tail queries. Usually queries with 5 words and more are being satisfied by the forum content or Question & Answer content but also a website can satisfy these types of queries with its comment section and multi-granular content.

For this Brand-new Domain, we can say that it is definitely successful since it doesn’t have any authority and historical data compared to its competitors. Also, we can say that it can have a big potential. You can analyze the most used words in 5-gram Keywords and URLs with 0% CTR to make them more responsive to the Search Engine Results Page. OnCrawl’s Data Assistantship gives enough opportunity for this task.

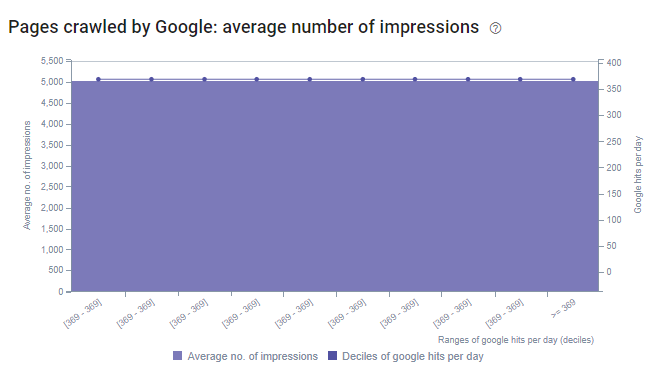

Average Number of Impressions and Clicks

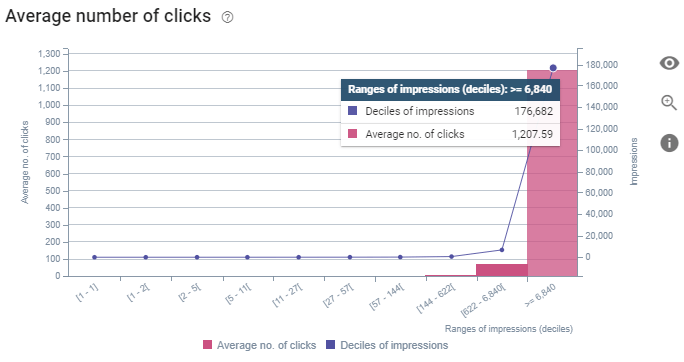

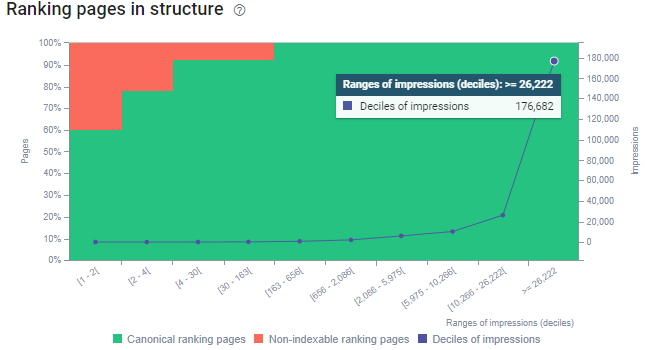

Average Number of Impression and Clicks Section shows the Organic Traffic Value per web page by generating a comparison between the web pages with high impression and click and the pages with low click and impression of a website.

We see that our average impression is 33.606 for the most successful pages in terms of impression. Below, you will see another example for the average clicks and deciles of impressions for the same segment.

In the graph here, each bar represents 10% of the total number of web pages. Grouping web pages by impression share rate is useful for seeing which part of the website gets more traffic.

For example, in the graphs here, we see that a very small part (30%) of the relevant website provides over 80% value for total impression and click. We find that the rest of the site is not as important. Thus, you might see the most productive section of your website, and also you can analyze these pages for finding patterns and differences.

Also, a healthy website and SEO Project should produce value with every segment of the website as much as possible. You might miss lots of SEO Potential in a graph such as this.

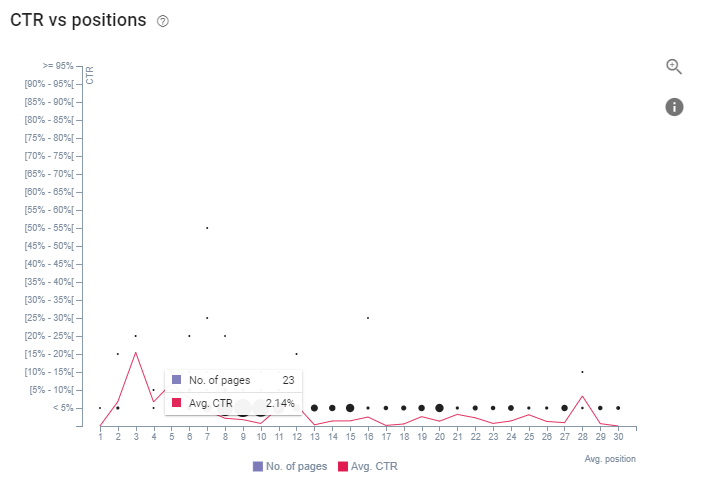

CTR and Position Correlations

CTR and Positions Correlation graphics from OnCrawl’s reports are another insightful chart that can lead you to focus on different sections of the website?

For a more granular view within rows and columns, you may use the graph below.

It shows the average CTR for the different URLs on different rankings along with below and above average CTR Pages. You may seek mutual points in terms of technical SEO and also CTR by filtering the URLs with a single click. Thus, you may see the difference between pages with efficient outcomes and inefficient outcomes.

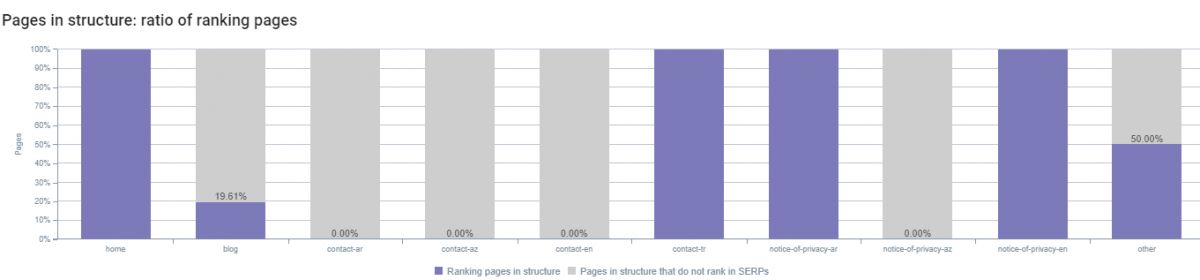

Average Position Distribution for Different Page Segments

While the topic is to find the difference between successful and unsuccessful URLs, successful and failed URLs may also be aggregated into specific website segments with specific profiles and features. And OnCrawl, not surprisingly, has a solution for this as well.

If we would use the previous website as an example, we could see in more detail the difference between the successful and unsuccessful sections of the website according to various types of insurance. However, since we only have segments like “blog” and “homepage” in this example, the graph might look a little shallow.

In such cases, OnCrawl offers enough opportunity to divide the website into sections with its Custom Segmentation feature.

According to the position information, clustering can also be done with Impression and Click data.

We see that we have more clicks from the pages 4-10 ranking range with the blog segment.

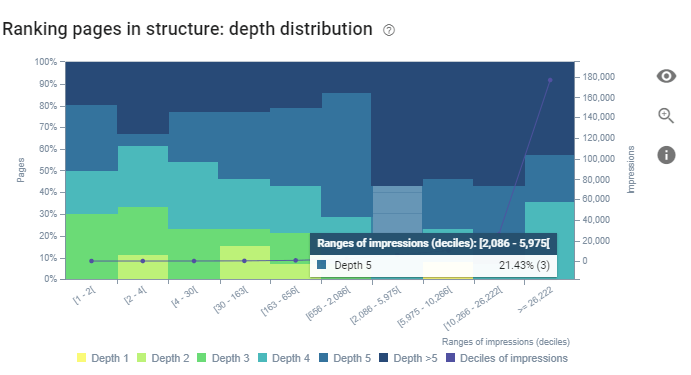

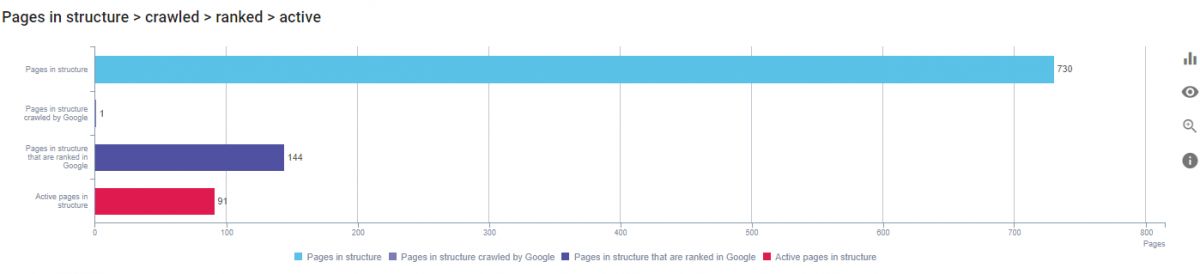

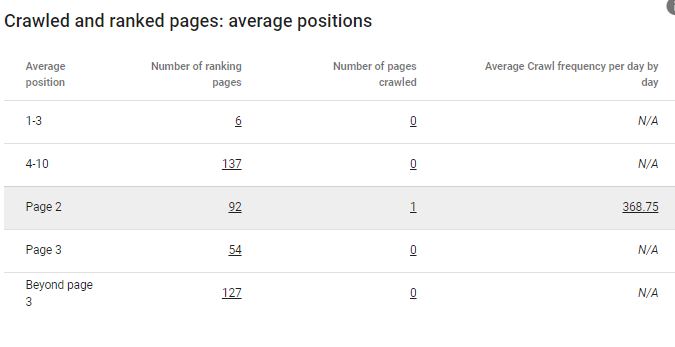

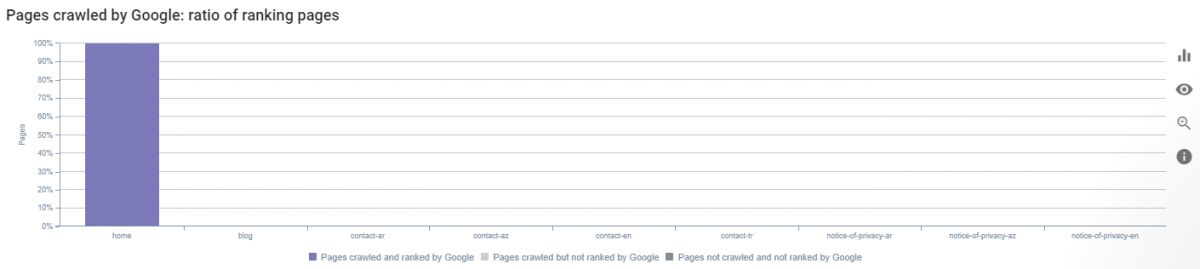

Ranking Indexation Report of OnCrawl

Ranking Indexation report is a section where GSC Data and Technical SEO Data are correlated to create insight together. How many of the URLs in the rankings have enough internal links or how many of them are canonical URLs or not, by appearing in this section, the potential of the website can be understood better.

We have 677 pages on our website and only 387 of them have an impression.

250 ranking pages do not have enough internal links and 127 of them are canonical URLs.

By clicking these URLs, you may see the convenient internal link possibilities also.

You may see the same information with a more comparative visual as above.

Also, according to Impression and Click Data, you can segment the website by averaging this data with position data as in the previous example. We also see that some of our Non-indexable pages are being indexed, which means that our internal link structure, robots.txt file, or the canonicalization signals can be used in the wrong way.

Also, we see that we have canonicalized URLs and Non-Canonical URLs in the SERP with impressions and clicks. Still, these can be fixed via Technical SEO. Like in the previous examples, we also may use this information by aggregating based on our website segments as below.

My favorite chart and data correlation about this section are actually the “Orphaned Pages” and their impression shared through the position data.

The orange pages here are the orphaned pages while the blue pages are the pages in the structure. So, there is a clear distribution between successful and unsuccessful pages in terms of InRank and their position data as we see.

And, just linking these orphaned pages, you can increase your Organic Traffic as the “Data Shows”.

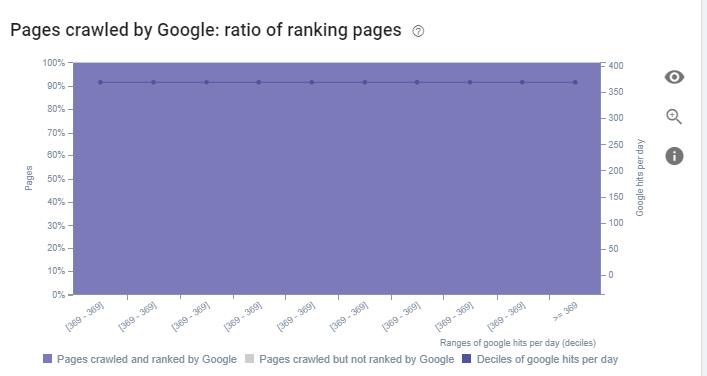

Characteristics of Ranking Pages based on GSC and SERP Data

OnCrawl has another awesome section about Ranking Factors. Imagine that by examining the web pages and their SERP Performance along with their position in the website, content, on-page SEO elements, and technical SEO situation, you may distribute a hand of ranking factors for creating insights.

Ranking Pages report consists of only the pages with 1 or more impressions. So, half of our URLs won’t be included in these report sections. Characteristics of Ranking Pages in Structure chart shows the web page amount according to different data columns as below:

- Ranking Pages

- Ranking Pages in Structure

- Average Depth

- Average InRank

- Average Number of Inlinks

- Average Load Time

- Average Words

- With an Optimized Title

- We see that our pages on page 3 are slower and also their content is shorter than others.

- We see that 1-3 Position Pages have the highest InRank.

- We see that Web Pages on the SERP’s 3th page do not have enough InRank.

- We see that Web Pages between 4-10 are not close enough to homepage.

To make these correlations and data insights, OnCrawl also has individual charts for every intersection between columns and rows.

Ranking pages and their distance from the Homepage correlation can be seen. InRank and their distribution in terms of impression data.

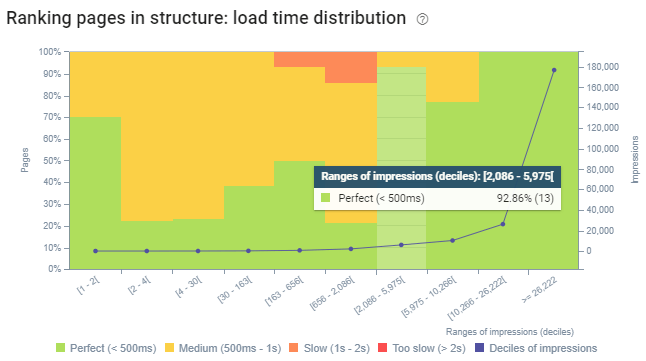

Load Time and Performance Distribution can be seen below.

We see that pages that are slow also have lesser impressions generally. So, improving web page performance can create a change in this chart, you may check the quick fixes for web page load timing and experience and then you can use the Crawl over Crawl feature to see differences.

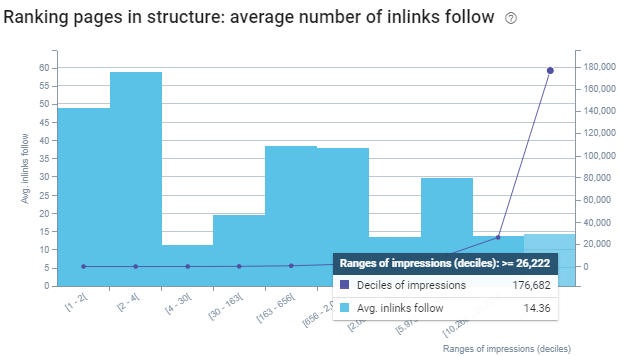

In terms of Inlink amount, we see that pages with the highest impression have lesser internal links compared to the others. Examining the internal links’ character, position and types can help in this situation.

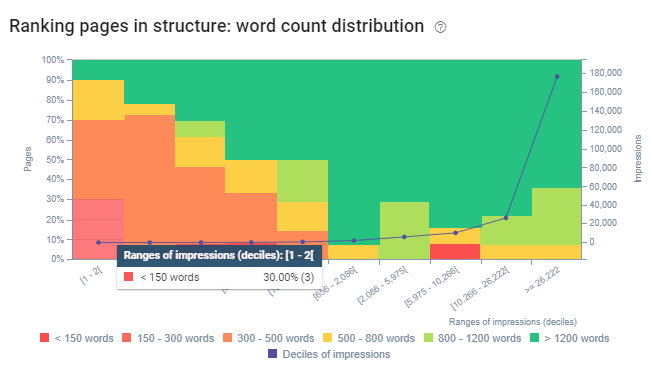

I think “word count distribution” is one of the strongest signals until now. We know that content length is not a ranking signal or factor but there can be a correlation between content granularity, detail level, and word count.

And, I might open a small paragraph here. There is a difference between a factor and a signal. Word Count is not a ranking factor but it is a related and helper signal for ranking and quality algorithms to determine the quality. Because there is a strict correlation between the information amount and quality within the content and word count, usually. That’s why we have “Word Count”, in OnCrawl.

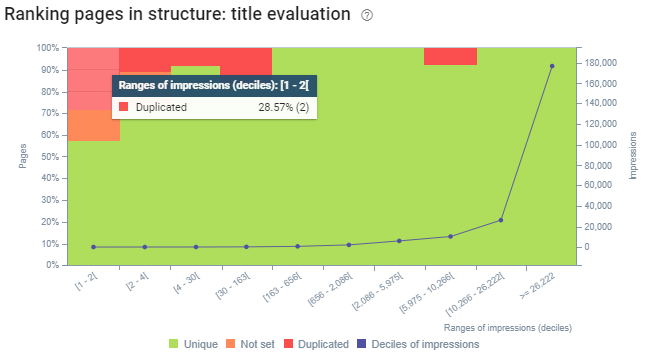

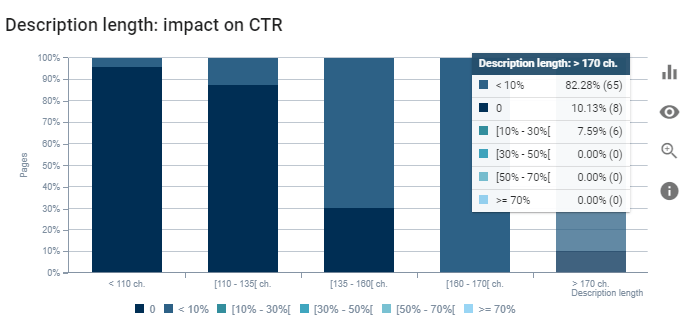

We see that Optimized Meta Titles have better ranking performance. In OnCrawl, you can measure the correlation between Meta Tag Length and CTR with repeated crawls.

We see that shorter Descriptions and their CTR Inefficiency can be related. By clicking these bars, you may check the characteristics of these URLs. You may check the CTR and Structured Data Correlation as below.

We see that pages without structured data have a lower CTR. We also can group the web pages with higher CTR without structured data and pages with higher CTR with structured data to make a deep comparison along with the Structured Data Type and Organic Traffic Performance charts as I showed before.

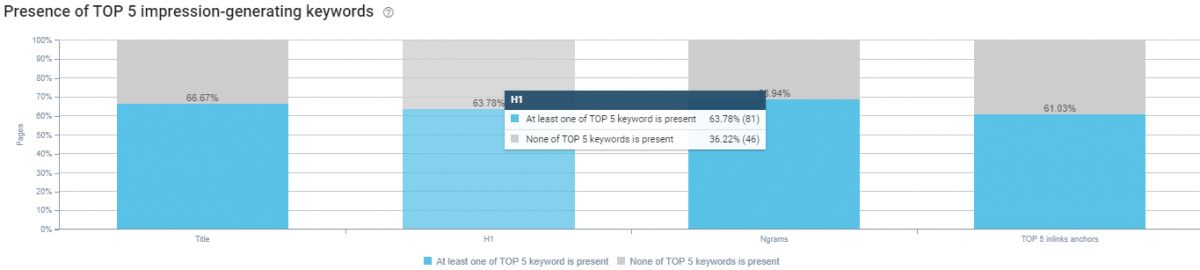

Also, we can see the 5 Impression-generating queries in the anchor texts, title tags, h1 tags, and N-Gram analysis. So you may find more queries to acquire better visibility.

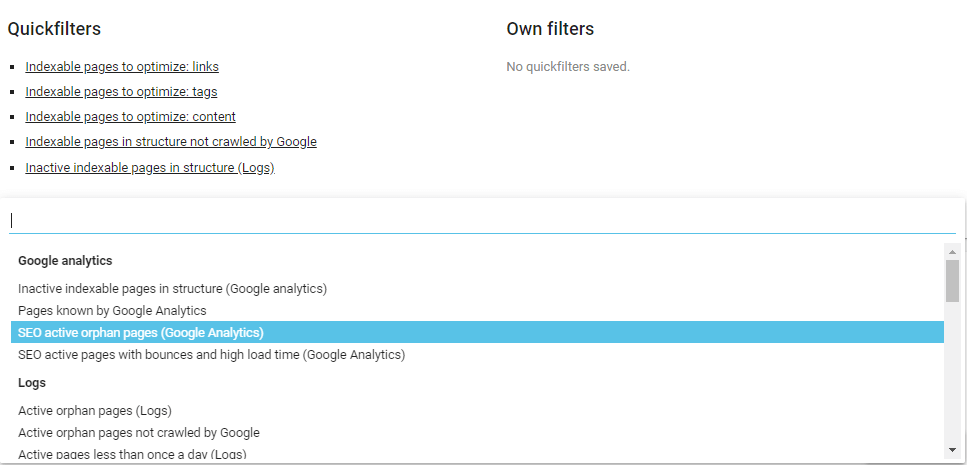

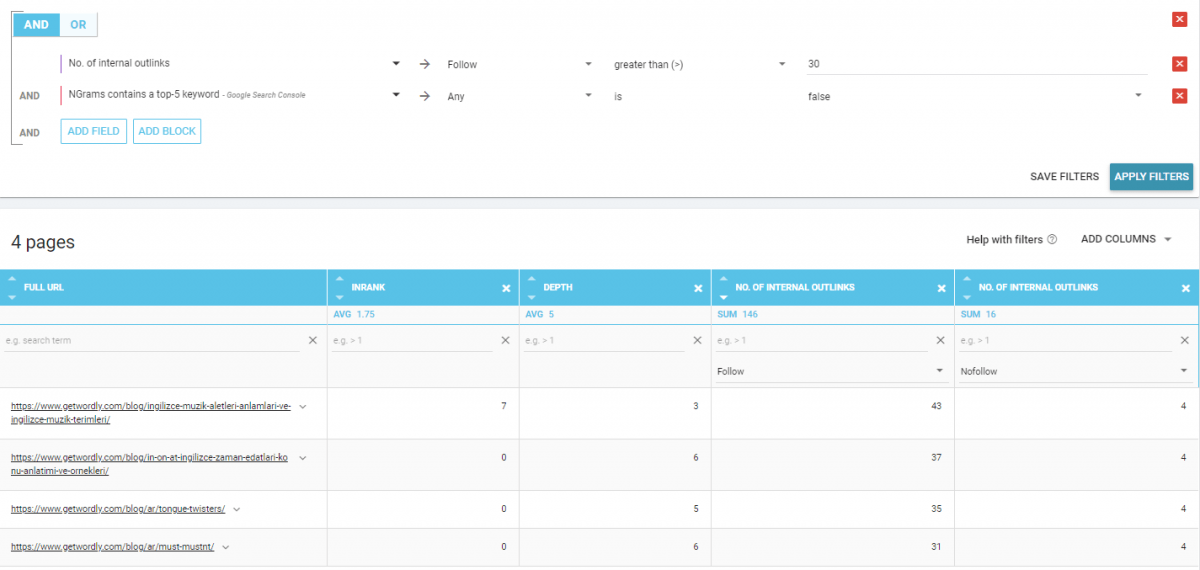

OnCrawl Data Explorer and Single URL Examination Attributes

All the reports I mentioned during OnCrawl Review are different types of crawl and correlation reports, which are divided into three, site-wide, segment-wide, and URL-based. It is a great advantage to see the fast transitions between all these variations with different columns, filters, and quick filters specific to On-page, Technical SEO, and GSC Data to individual URLs. In addition, OnCrawl allows filtering different types of URLs and data related to them with “and” and “or” logical operands.

And, there is also an important principle of OnCrawl for helping SEOs. “You need to have access to all the data for your crawl analysis”. In other words, via Data Explorer, you can export your data as CSV or spreadsheet with unlimited rows. And, with OnCrawl you can create probably more than 300 data columns for your analysis. I recommend you to check OnCrawl’s Data Explorer and “Export” option without any limitation for a Broad SEO Analysis.

Custom DashBoard Builder of OnCrawl

It is possible to create your own custom data extraction dashboard with OnCrawl. Thus, it is possible to get insight more clearly by combining different data taken from different points. Below you will see an example for Indexing and Crawling.

For instance, we have mentioned lots of terms from the SEO Discipline, we can collect all the “orphaned page”, “less crawled page” and “zero-click pages” together with every possible data correlation graph to profile them in a better way within a single report screen.

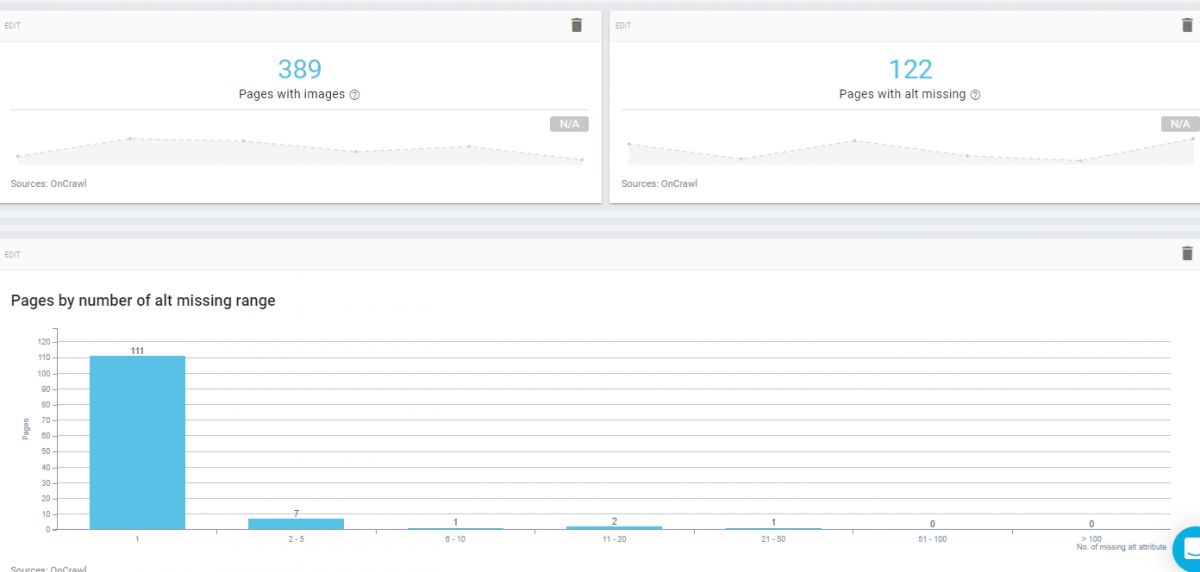

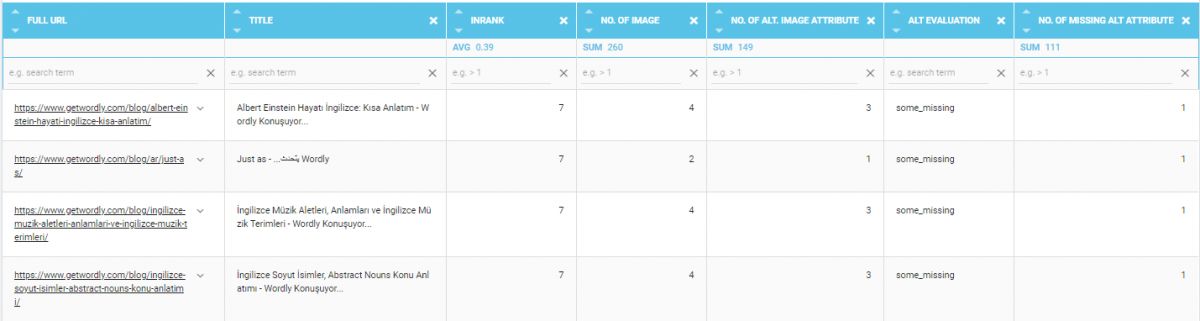

After you check the necessary data, you may clear the dashboard and you can select another section from the left sidebar. For instance, if you just want to see information about the Image Tags, you may see it clearly:

In 111 of the pages we have at least one image without an alt tag, it might be the “author photo” or the featured image of the post, you can see it just click the bar of the bar plot. You will see an example below for the pages with images with one missing alt tag.

If you click the SEO Tags from the sidebar, you will see another “Quick Diagnostic Dashboard” as below.

We see that we have 265 Duplicate H1 and 223 Duplicate Meta Description but only some of them are actually problematic.

You also can see the Dashboard Builder’s sidebar and option hierarchy to understand better what you can extract from here.

So, you can use Log Files, Google Search Console, Google Analytics, Majestic, and AT Internet for data blending. Every section has its own customizable sub-sections. Since I didn’t connect the Google Analytics or any other data sources except the Google Search Console for this crawl, I will show just an example from Google Search Console.

As you can see I selected a minor sub-section from the GSC option of Dashboard Builder and I started to use it for Keyword Insights that I showed before.

How To Download a Report from OnCrawl?