There are many Python Modules available that can be used to crawl a website in all aspects on a large scale. Custom scripts that can be written in modules such as Scrapy, BeautifulSoup, and Requests will be sufficient in this regard. However, there is a problem that there is no Python Module or a scripting script that will make it possible to scan every website in Screaming Frog detail free of charge. However, Elias Dabbas changed this situation. Advertools, in which we have written Guidelines for Holistic SEOs in many articles in our Python SEO Category, can help you do better than a third-party crawler with its “crawl ()” function.

In this article, we will show you how to browse a website with the help of Advertools and Python and evaluate it with the possibilities that third-party paid tools do not offer.

Without further progress, if you do not have enough coding experience, it may be useful to examine our other articles showing what can be done with Advertools within Python SEO.

How to Crawl a Website and Examine via Python

We will use the crawl function of Advertools to browse a website and position the scanned data in a data frame. First, we will import the necessary data.

import pandas as pd

from advertools import crawlWe have chosen a small web entity so that we can create an easy-going usage example in terms of time and cost.

crawl('https://encazip.com', 'encazip.jl', follow_links=True)

enczp = pd.read_json('encazip.jl', lines=True)

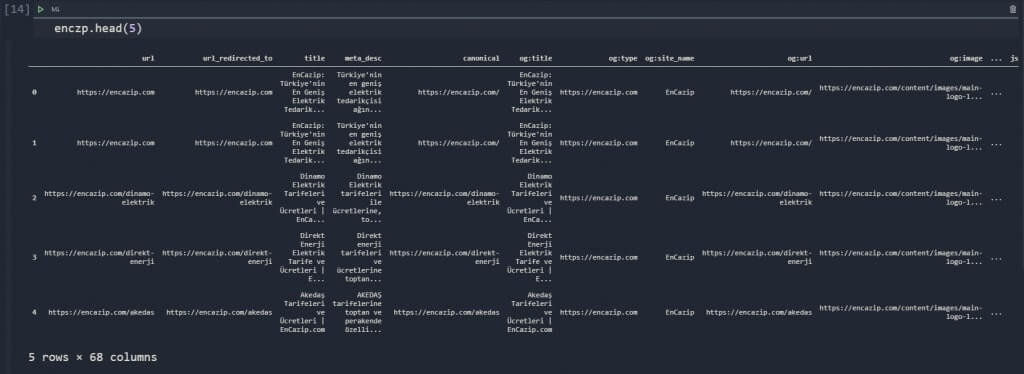

enczp.head(5)- The first line has crawled the “Encazip.com” which is a company that provides electricity consumers to change the distribution company in terms of electricity distribution. We have crawled the website and output the data in “jl” format. “follow_links=True” attribute is for making crawlers following the URLs on a web page.

- We have created a variable that is “enczp”, we have used the “encazip.jl” output via “lines=True” attribution so that our crawled data can be seen in a data frame.

- We have called our variable’s data.

You may see the output below.

We have 68 columns. Just look at the column names to understand the amount of data crawl offers to us.

enczp.columnsYou may see our columns’ names in a screenshot as below:

Giving the 68 column names in one frame may have created a complex image, you can see the relevant points in a grouped form below.

- Status Code

- URL, Title

- Description

- Canonical

- Open Graph

- Twitter Cards

- Response Size

- Headings Tags

- Body Text

- Internal Links

- Crawl Time

- Response Headers

- Structured Data

- Request Headers

- Image Alt Tags

- Nofollow Links

- Anchor Texts

- Response Latency

- Image Sources,

Also, thanks to Advertools’ technology, we have an option to add more columns via “CSS selectors” and “selectors” attribute. We will show an example later.

It is possible to get all these data without any paid SEO Crawler. Also, thanks to Python Modules, Libraries, and Holistic SEO Vision, unlike third-party crawlers’ use cases, we have endless opportunities and possibilities for our SEO projects thanks to this free and unlimited crawler who developed by Elias Dabbas.

You can crawl two separate websites and visualize them by comparing many points such as content, heading, title, canonical, URL, response headers, structured data, latency, and you. Or you can compare contents via NLP Algorithms and analyze what percentage of their titles are sarcastic or you can perform entity extraction and relationship visualization. You can do everything with Python with unlimited flexibility and creativity at any point from site speed, security to On-Page SEO Elements.

To learn more about Python SEO, you may read the related guidelines:

- How to resize images in bulk with Python

- How to perform DNS Reverse Lookup thanks to Python

- How to perform TF-IDF Analysis with Python

- How to perform text analysis via Python

- How to test a robots.txt file via Python

- How to Compare and Analyse Robots.txt File via Python

- How to Categorize Queries via Python?

- How to Categorize URL Parameters and Queries via Python?

How to Check whether there is a missing title (and more) on our website via Python?

We can use “isna” and “value_counts()” method.

enczp['title'].isna().value_counts()

OUTPUT>>>

False 98

Name: title, dtype: int64In this example, we don’t have any missing title tags. Also, you may use this methodology also for “Canonical tags”, “image alt tags”, “heading 1-6” tags, “Structured Data”, “Open Graph” and “Twitter Card”. We are choosing “meta titles” just as an example to keep the article brief.

How to Check whether there is a missing meta description on our website via Python?

We can use “isna” and “value_counts()” method for other data columns also.

enczp['meta_desc'].isna().value_counts()

OUTPUT>>>

False 97

True 1

Name: meta_desc, dtype: int64We have a missing description in this case, so, how can we see it?

How to find Missing Values in the Columns?

After learning whether there is a missing value in the columns, we can pull the missing data point.

enczp[enczp['meta_desc'].isna()]You may see the result below.

We don’t have a meta description for this URL, because it has a 404 Status Code. So, why it is in our data frame? Because, Encazip Web Entity has broken internal links, so it caused us to crawl these unnecessary URLs.

This reminds us that we should check also the status codes.

How to check Status Codes of URLs from a Website with Python

We will use the same methodology. Choosing the “status” column and using the “value_counts()” method will give the data we want.

enczp['status'].value_counts()

OUTPUT>>>

200 97

404 1

Name: status, dtype: int64We have only one 404 URL and it is the one with missing description.

How to Check Average Meta Title Length of a Web Site via Python?

To perform this task, we need to use “str()”, “len()” and “mean()” methods in a harmony. We also can find the “shortest” title and character count, longest like average. We can use this information to compare web entities’ general situation. You also can use this Data Scientist Perspective for SERP Analysis.

enczp['title'].str.len().mean()

OUTPUT>>>

50.8469387755102Our average title length is 50.84 character.

How to learn How Many Title Tags are Longer than 60 Characters via Python?

To learn how many title tags are longer than 60 characters, using “str()”, “len” methods and “>” logical operator is necessary. You can see an example below.

(enczp['title'].str.len() > 60).value_counts()

False 92

True 6

Name: title, dtype: int64Only 6 of our meta titles are longer than 60 characters.

How to Find Meta Titles Consist of Certain Number of Words

Also, we may check meta titles consist of specific number of words. %45 of the Google searches consist of queries with four words. So, using more descriptive and relevant words in a Meta Title can help. May Screaming Frog show Meta Titles according to the word count? No… But Python can!

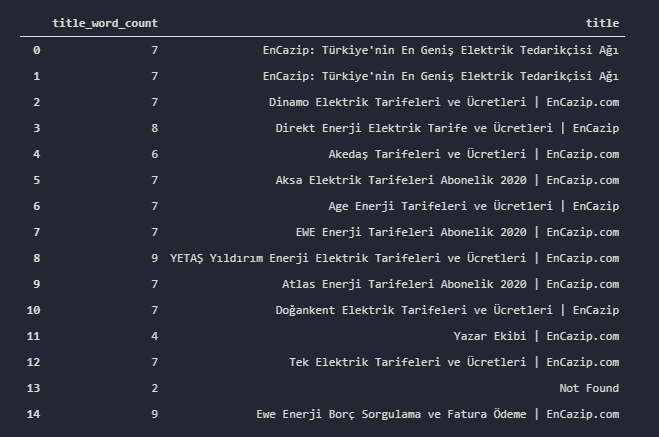

enczp['title_word_count'] = enczp['title'].str.count(' ') + 1

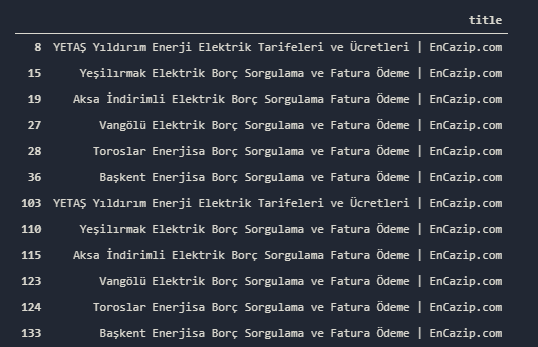

enczp.filter(['title_word_count', 'title']).head(15)- We have “count” a certain character in the certain Data Series (column).

- We also have added a “1” point for every result, because Python starts counting from 0. We have assigned all of these data into a new column.

- We have filtered these columns and called the first 15 lines.

You may see the result below:

We see that also there is an error page in our result. We have counted the ” ” characters, which means we have counted the spaces between words. You may need to use some strip methods for these kinds of calculations also. You may use this methodology also for descriptions, anchor texts, or “URLs” with stripping the “-” sign between words.

You may also use AI and Data Science to see whether a certain type of words in the meta titles take more clicks, impressions, or have more queries for taking search activity. These are a few options from Python’s endless world for SEO.

How to Pull the Meta Titles that are longer than 60 Characters via Python?

We can pull a specific row that doesn’t meet a certain condition as we did for the “Missing Description” section.

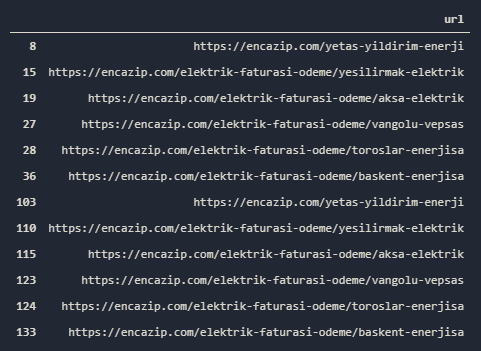

enczp[enczp['title'].str.len() > 60].url.to_frame()

pd.set_option('display.max_colwidth',155)You may see the result below.

How to Calculate Pixel Length of Meta Titles or Descriptions via Pytho?

We also can calculate the pixel length. If a Holistic SEO Knows Python, you can’t be beaten by Screaming Frog, it is impossible. Pixel length for a Meta Title and Meta Description shouldn’t be longer than 580px and 920px. For “pixel length calculation” we will use “PIL” which means “Python Image Library”. We have used Python’s PIL before for “image optimization via Python Guideline“. We also can calculate pixel coordinates, pixel length or image size, writing a text on images, or anything you may imagine. You may see the correct methodology for pixel calculation via Python below.

from PIL import ImageFont

font = ImageFont.truetype('Roboto-Regular.ttf', 20)

enczp['title_pxlength'] = [font.getsize(x) for x in enczp.title]

enczp.filter(['title', 'title_pxlength'])- In the first line, we have imported the necessary Module.

- We have created a variable that is “font”. We have assigned it “Roboto-Regular.ttf” font family and determined the font size as 20 Pixel.

- We have created a new column which is “title_pxlength”. We have calculated “pixel length” and also “pixel height” for the meta titles and put all of them into a list via List Comprehension.

- We have filtered the “title” and “title_pxlength” columns to see our title’s pixel length.

You may see the result below.

We have pixel length and pixel heights for our Meta Titles. We can use the same methodology for also Anchor Texts or Descriptions, URLs, etc… You may ask that why we used “Roboto-regular” and 20 pixel” for our pixel length calculation. Because, Google is using Roboto-regular for its Meta Titles as 20px.

How to find Meta Titles which are longer than 580px via Python?

Some SEOs may need help for using “tuples” in Pandas Rows to filter via conditions. So, we can also show this. There is a special method for tuples in Pandas Rows which is “itertuples()”. But, we will use an easier method.

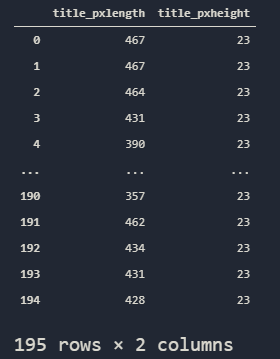

enczp[['title_pxlength', 'title_pxheight']] = pd.DataFrame(enczp['title_pxlength'].tolist(), index=enczp.index)

enczp[['title_pxlength', 'title_pxheight']]We have created two different columns for “title pixel length” and “title pixel height”. We have turned the “tuples” of “title_pxlenght” column into a list, then we have turned it into a data frame and assigned every different tuple component into the columns we have created via our valid data frame’s index. You may see the result below:

Now, we can perform our mission for this heading.

enczp[enczp['title_pxlength'] > 580]['title'].to_frame()We have filtered the titles that are longer than 580 pixel and put them into a frame. You may see the result below.

If you think that you should always try to perform these processes over and over again, you may also create your custom functions as below:

def pxlength(df):

from PIL import ImageFont

font = ImageFont.truetype('Roboto-Regular.ttf', 20)

df['pxlength'] = [font.getsize(x) for x in df.title]

df[['title_pxlength', 'title_pxheight']] = pd.DataFrame(df['title_pxlength'].tolist(), index=df.index)

return df[df['title_pxlength'] > 580]['title'].to_frame()You may use any kind of crawl output which is acquired by “Advertools.crawl()” with this function. Now, we can continue some text analysis methodologies over our meta tags.

How to Find Most Frequently Used Words in Meta Tags and Other On-Page Elements via Python

You may use the same methodology for every On-Page elements such as Anchor Texts, Heading Tags or Meta Tags. If you need to learn more about Text Analysis via Python, you can read the articles below before proceeding further more.

Now, we can proceed for our “word frequency” test over meta titles as an example use.

title_words = str(enczp['title'].tolist()).replace("|","")

#enczp['title'].str.replace('|', '') this line of code has been suggested by the Expert Elias Dabbas. You may see the difference.

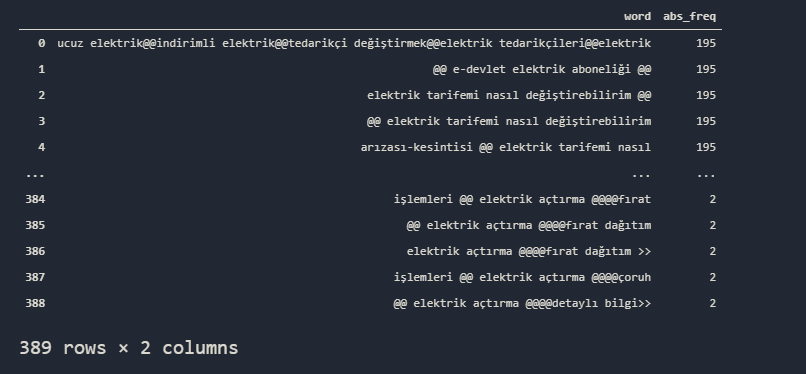

adv.word_frequency(title_words, rm_words=adv.stopwords['turkish']).head(20)- We have assigned all of the “title” row values into a variable by turning them into a list and stripping them from some unnecessary words and signs.

- We have used the “adv.word_frequency()” method with “Turkish stop words” so that some words such as “and, by, via, etc.” shall not be calculated.

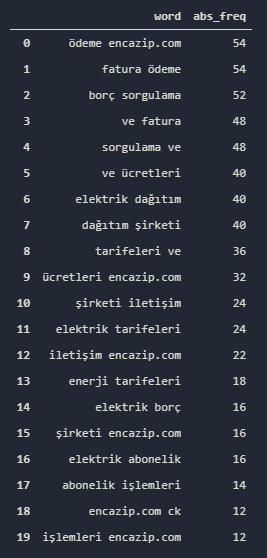

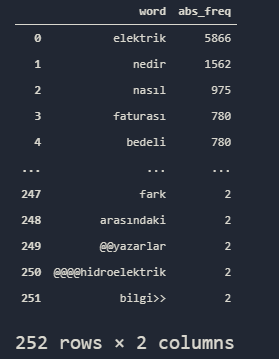

You may see the result below:

We have some “dates” in our result along with some important insights. We can simply see our most important queries and their usage amount. If we increase the “phrase length” we can feel more insight.

adv.word_frequency(title_words, rm_words=adv.stopwords['turkish'], phrase_len=2).head(20)With the “phrase_len=2” attribute, we have set our phrase length to the 2. We also may see some stop words now to protect the context of the word completion. The result is below.

We see that our main traffic source is related to the “electric bills calculation” and “electric bill querying”. Also, we have some “electric distribution company” web pages because we have 22 different “communication” title tag. If you want, you may use the same methodology for also URLs, Desrciptions or other kind of components.

How to Create a WordCloud from a Web Site’s Title Tags?

To see the most used words in title tags, we also can use WordClouds. ScreamingFroh has it? I don’t know, but thanks to Python (Advertools) Crawler, we can have it if we want! To create a cloud word from our “title words”, we will use a special Library whose name is “Wordcloud”, for visualizing it, we will use “Matplotlib”.

from wordcloud import WordCloud, STOPWORDS

import matplotlib.pyplot as plt

stopwords = set(STOPWORDS)

wordcloud = WordCloud(width = 800, height = 800,

background_color ='white',

stopwords = stopwords,

min_font_size = 10).generate(title_words)

plt.figure(figsize = (8, 8), facecolor = None)

plt.imshow(wordcloud)

plt.axis("off")

plt.tight_layout(pad = 0)

plt.show() - We have imported “wordcloud” modules, “WordCloud” is for creating the word cloud, “STOPWORDS” is for stripping the stop words from word cloud.

- We have imported the “Pyplot” from Matplotlib as “plt”.

- We have assigned the “stop words” into a variable.

- We have determined our word cloud’s resolution, font size, background color and assigned the result into a variable.

- We have created our plot via “Pyplot”, “facecolor=None” means that there will be no border color.

- We have chosen to not show axis.

- We have narrowed our layout via “tight_layout”.

- And we have called our word cloud.

You may see our word cloud that consist of a web site’s title tags below.

Since it is Turkish, this might seem a little bit messy. But you may use it on an English Web Entity. Also, comparing competitor web entities’ title tags in a word cloud shape can help for a quick query targeting strategy analysis for them.

How to Categorize URLs of a Web Site via Python?

Screaming Frog doesn’t categorize URLs and gives insights through them but you may analyze a web entity pretty deep only via URLs. If you wonder how to perform a Content Strategy and Publishing Trend Analysis through Sitemaps and URLs via Python, you may read our article. In this guideline, we will show two different methodologies for URL Categorizing.

Also, you may read our “URL Parameter and Query Categorization via Python to Understand a Web Entity” article to see how to perform a URL Categorization via Python’s “urllib” module. Now, we can perform our URL Categorization in a simple method.

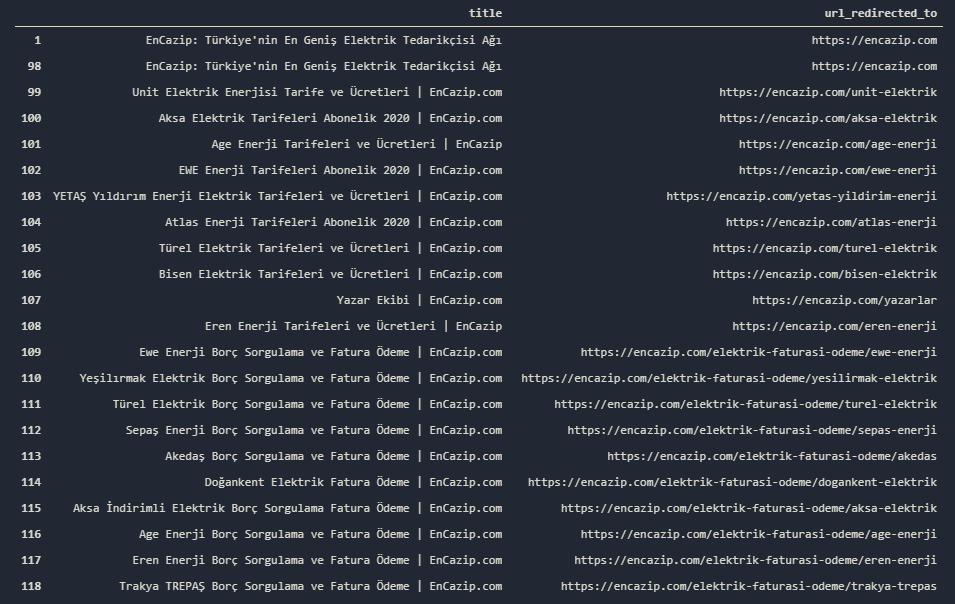

In our crawl output, we have more than one column that includes “url” information within it. In this case, we should know the difference between them.

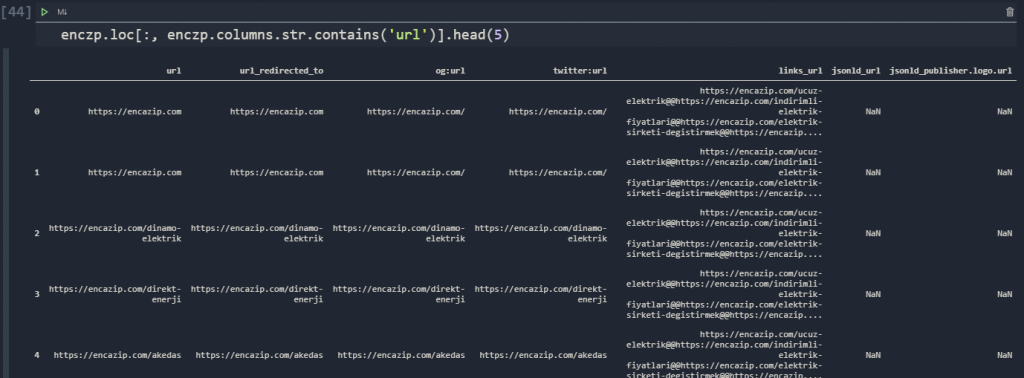

enczp.loc[:, enczp.columns.str.contains('url')]We have filtered all of the columns which have “url” string in their name, you may see the result below:

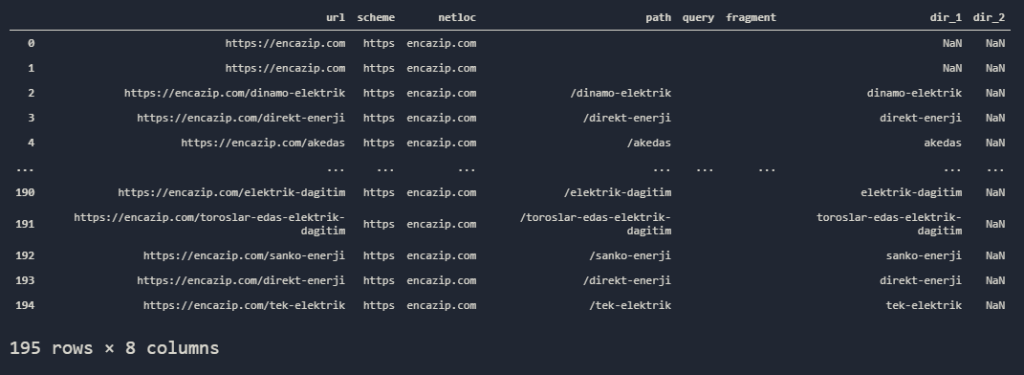

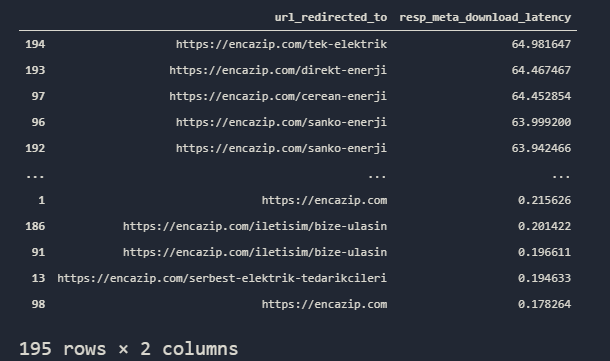

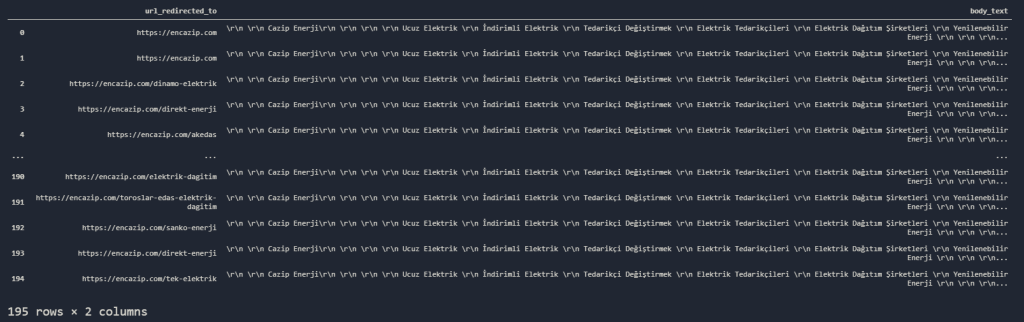

We can see that we have “url” and “url_redirected_to” columns. To perform a URL Categorization, we need to choose the right URL Column. In Advertools, the “url” column includes the URLs we have crawled while the “url_redirected_to” column includes the URLs we are redirected to. In this case, we need to use the “url_redirected_to” column, because it includes the destination URLs with “200” status code.

Note: The column with the name of “links_url” includes the “internal links” and “external links”, we also will use it in the future sections.

Now, we may continue our categorization as below.

enczp['mcat']=enczp['url_redirected_to'].str.split('/').str[3]

enczp['scat']=enczp['url_redirected_to'].str.split('/').str[4]

enczp['scat2']=enczp['url_redirected_to'].str.split('/').str[5]

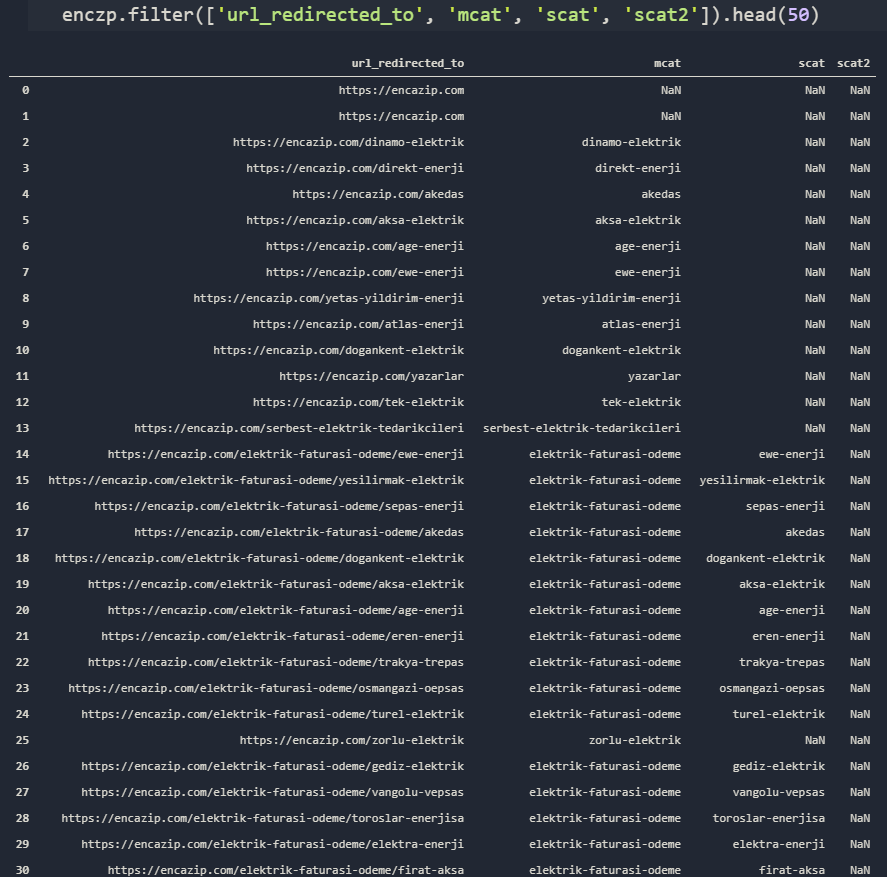

enczp.filter(['url_redirected_to', 'mcat', 'scat']).head(50)- We have created a new column for the “Main Category in URLs” as “mcat” via the “str()”, “split()” methods.

- We have created another column for “Sub URL Category” as “scat”.

- We also have created another “Sub URL Category” as “scat2” for the further URL snippets.

- We have filtered the new columns we have created and the original URL Column to see the results.

You may see the result below:

Now, we can categorize our URLs via the second method which belongs to the Advertools. We can also use “adv.url_to_df()” function. Since Advertools use the “urllib.parse” module for URL Categorization, it is quite similar in terms of attributes and methodology. Our biggest win here is taking a free and quick data frame from our “splitted and decoded” URL.

adv.url_to_df(enczp['url_redirected_to'], decode=True)With a single line of code, we can categorize every URL in our crawled web entity. You may see the result below.

You may see that we have encoded every URL, we have taken the “queries”, “parameters”, “fragments”, “subcategories”. We also can learn that, which category has how many URLs.

How to see which category has how many URLs via Python?

If there is a 250 content within a Directory or Category, Search Engine easily sees that this category may be more important than others, or the contrary, maybe it is just ordinary and non-popular press release category… We may see these kinds of information within a single line of code. As I can also remember, Screaming Frog doesn’t have this too.

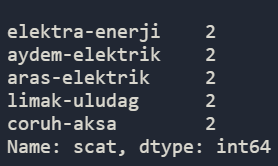

We have our main categories and sub URL Categories. We also can use “word_frequency()” method here by using “replace(“-“, ” “)” method for turning URLs into “human-readable” version and to see the most used words in our URLs. But, instead of doing that, we will use “nlargest()” and “idxmax()” methods for seeing the biggest URL Categories and Paths.

enczp['mcat'].value_counts().nlargest(5)We have called the most used 5 values in our “Main URL Category” column.

As in our “Meta Title Categorization” via “word_frequency”, we have “electric bill paying” as top value.

enczp['scat'].value_counts().nlargest(5)We have called our most used 5 value for our “sub category”.

We have also called our “scat2” values but it seems that, it is a completely empty column or full of “NaN” values.

We can also use “Advertools” for the same purpose as below.

url_df = adv.url_to_df(enczp['url_redirected_to'], decode=True)

url_df.groupby('dir_1').count().sort_values(by='url', ascending=False)- We have assigned our “adv.url_to_df()” method’s result into a variable.

- We have used the “groupby” method for grouping the information and sorted it via the “url” column as descending.

You may see the result below:

We have the same result as in previous methodology. We have showed lost of “how to” section for lots of things, but we didn’t touch the duplications.

How to find duplicate Titles or Descriptions from a Web Site via Python

To find duplicated values, we can use “Pandas Library’s “duplicated()” method.” Also, Advertools use this method along with “drop_duplicates” method for finding and dropping the duplicate values in the “url” column and hold only the unique values in the data frame since we are using “found links on the web pages”, this is a necessary step.

To find the duplicated titles in a web site via Python, you can use the singline of code below.

enczp[enczp.duplicated('title')][['title','url_redirected_to']].head(50)We have called all of the duplicated or too much similar values from the ‘title) column, we have filtered them along with their URLs and called the top 50 Lines.

You may see the duplicated Titles here. As we said before, you may use this methodology for also other web site components.

How to find not self-referencing Canonical Tags via Python?

We may find the not self-referencing canonical tags with a single line of code, as always. Canonical tags give a clear signal for the Search Engine to see the original web page for duplicate, repetitive, and similar content. This helps for fastening the indexing while decreasing the cannibilization possibilities. To learn more, you may read our “What is Canonical Tag” article. To find not referencing canonical tags, you may use the method below.

enczp[enczp['canonical'] != enczp['url_redirected_to']].filter(['url_redirected_to', 'canonical'])- We have filtered all rows which are the “canonical” and “url_redirected_to” values are not equal to each other.

- We have filtered the related columns which are “url_redirected_to” and “canonical”.

You may see the result below:

We see that our canonical tag for the “homepage” is not correct. Also, we see that some of the web pages are giving canonical to the “404” URLs. The same method can be used via “custom extracted values” such as “structured data’s Author entry” and the author’s name on the web page. Also, you may extract the “articles” or “tables” from the web pages via the css selectors.

How to Scrape Custom Values and Data from Web Sites via Python

In Advertools, we have “special CSS or XPath Selectors” option to scrape custom values. You may scrape author names, sidebar links or other types of on page elements you want such as Breadcrumbs, Table of Contents or others. This custom scrape option makes it easy to understand many points on a large scale for a Holistic SEO. You may see an custom value scraping example.

selectors = {

'related_links':'.clear'

}

crawl('https://encazip.com/elektrik-sirketi-degistirmek', 'custom_value3.jl',follow_links=False, css_selectors=selectors)

#custom_settings={'DEPTH_LIMIT':1} attribution have been removed from the function, because it is not necessary while using "follow_links".

#This correction also has been made by Elias Dabbas.How to See Average and General Size of Web Pages of a Web Site via Python?

Unlike Screaming Frog, we also can see all of the web pages’ sizes. This helps us to see the average size of the web pages and also gives us the opportunity to compare the different web entities in terms of their web pages’ sizes. Also, you can see the heaviest web pages and filter them for optimization. Performing Page Speed tests in bulk and automating the page speed tests via Python is possible. You also can perform Page Speed tests over these pages and correlate the results with their sizes to see the connection between two metrics.

At below, you will see an example use case for visualizing all web pages’ sizes from a web site.

data = [go.Bar(

x=enczp['url_redirected_to'], y=enczp['size']

)]

layout = go.Layout(title='Average and General Size', height=600, width=1500)

go.Figure(data=data, layout=layout)You may see the result below:

Most of our web pages are under 500 KB but some of them are higher. Also, some pages are really low in terms of KB size, they can be 404 pages or some non-important pages.

How to Pull and Filter Web Pages According to their Sizes via Python

To see which page is the biggest in terms of KB and which is the smallest or to pull web pages according to their size, we also can use Python. First, let’s take our URLs average size with a simple Pandas method that is “mean()”.

enczp['size'].mean()

OUTPUT>>>

239181.54358974358

enczp['size'].mean().astype(int)

239181Our average size of URLs is 239181 byte. Let’s see how many of those URLs are above the average.

(enczp['size'] > 239181).value_counts()

OUTPUT>>>

True 116

False 79

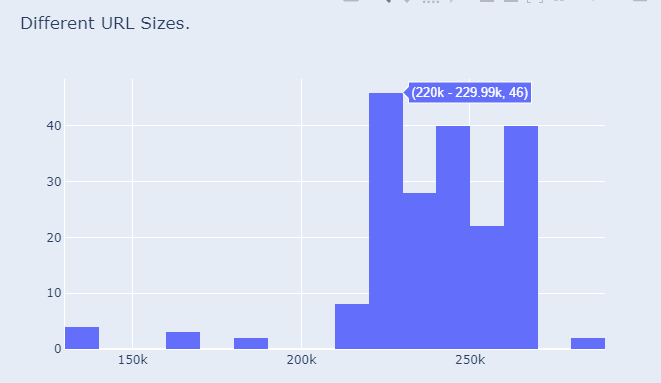

Name: size, dtype: int64Elias Dabbas also suggested here to use a “Histogram Graph”. You can see visually how many URLs are there from different size ranges?

fig = go.Figure()

fig.add_histogram(x=enczp['size'])

fig.layout.title='Different URL Sizes.'

fig.layout.paper_bgcolor='#E5ECF6'

fig.show()

It seems that most of our URLs are above average. It means that some of the web pages have a really low size in terms of KB. Let’s sort our URLs according to their size.

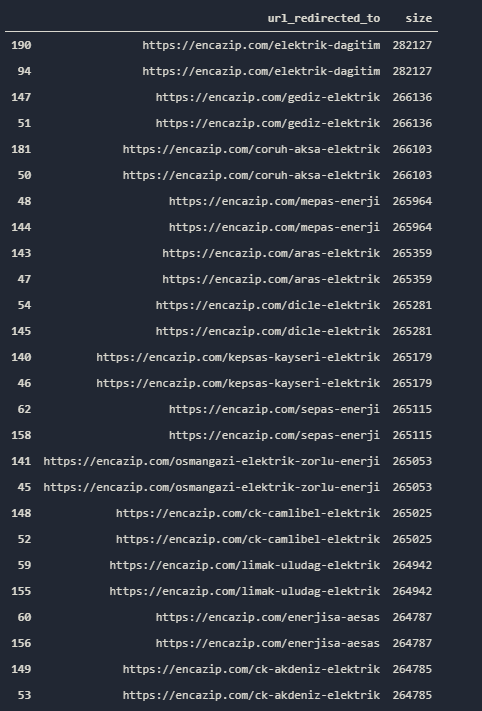

enczp[['url_redirected_to','size']].sort_values(by='size', ascending=False).head(45)

We see that our most heavy pages have the same pattern and they are actually from same Path or same marketing intent. Most of them are “Electric Distribution Companies” brand pages for marketing and more information about them. To filter out URLs from a certain size range, you may use the code below.

((enczp['size'] > 190000) & (enczp['size'] < 232000)).value_counts()

OUTPUT>>>

False 141

True 54

Name: size, dtype: int64

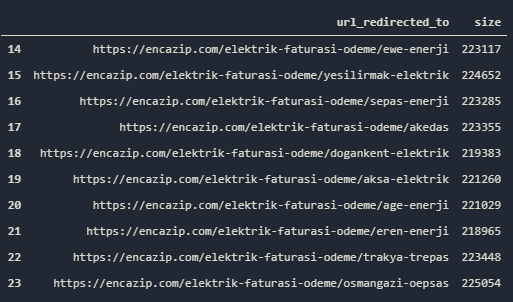

enczp[(enczp['size'] > 190000) & (enczp['size'] < 232000)][['url_redirected_to', 'size']].head(10)First we have checked that how many of our URLs’ size are between “190.000” and “232.000” values. We see that 54 of them are in this size range. At the second command, we have called these URLs’ first 10 example. You may see the result below.

You also can categorize the web pages according to URLs and compare their sizes according to their category intent or you may perform the same process between different competitors. Now, we may continue to the response headers.

How to Check a Web Site’s Site-wide Used Response Headers via Python?

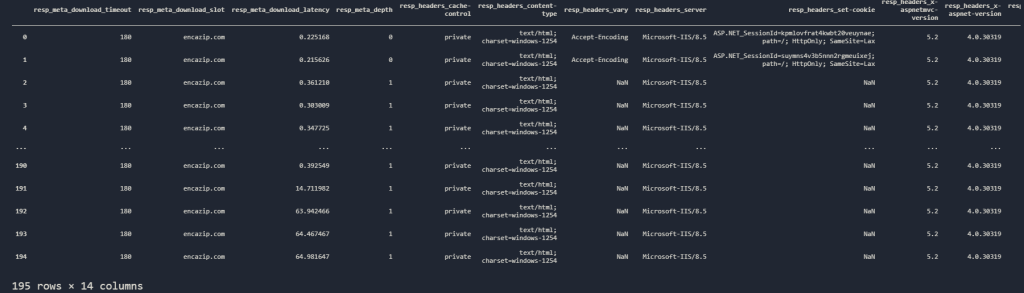

Imagine you have created a Python Function to check whether a web site uses the necessary Response Headers for security or Page speed? In this article, we won’t do that, but thanks to Advertools, we have the chance to check which response headers are being used or not used in a web site. You may see the response headers columns below. To see this information, we need to filter all the columns according to their names which include “resp”.

If you ask that How to filter a Pandas Data Frame according to their names? You may check below.

enczp.loc[:, enczp.columns.str.contains('resp')]We have used the “loc” method by choosing all rows and only choosing some columns according to their names.

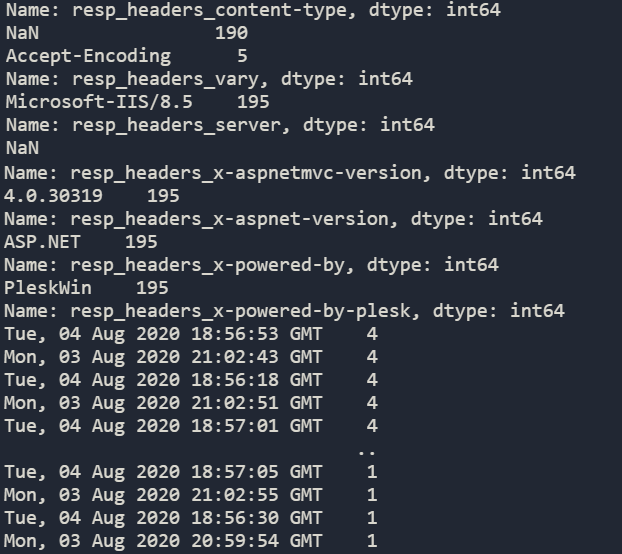

We have 14 columns which include response headers. Also, we see that they don’t use every response header for every web page. Let’s check what can we do via these information.

How to Check Download Latency (Time to First Byte) for entire Web Site via Python?

Thanks to “resp_meta_download_latency” column, we can see the TTFB metrics for the “HTML Document’s” itself. TTFB is one of the most important page speed metrics related to the back-end and server structure. Comparing and analyzing TTFB for HTML Documents can be useful for this reason.

Average TTFB for HTML Documents is below:

enczp['resp_meta_download_latency'].mean()

OUTPUT>>>

3.085272743763068

enczp['resp_meta_download_latency'].describe() #also has been suggested for the same purpose by Elias Dabbas.

OUTPUT>>>

count 195.000000

mean 3.085273

std 10.603454

min 0.178264

25% 0.295340

50% 0.323820

75% 0.361688

max 64.981647

Name: resp_meta_download_latency, dtype: float64Our average latency for connection is 3 seconds. It may be seem as really high, but you should remember that we haven’t used “custom_settings={‘DOWNLOAD_DELAY‘:2}” or such a special condition. It means that the server of the targeted domain had to open lots of connection at the same time, so if you want to perform an analysis over this metric, prefer to use “DOWNLOAD_DELAY” special option as 2 seconds or more. But still, you may compare the competitors without using this special option since, they both have the equal conditions.

Do you wonder our max TTFB?

enczp['resp_meta_download_latency'].max()

OUTPUT>>>

64.98164701461792And which URLs have the max TFFB?

enczp[['url_redirected_to','resp_meta_download_latency']].sort_values(by='resp_meta_download_latency', ascending=False)You may see the result below.

You may see that the TTFB is higher on similar web pages, is it a problem or is it because they were at the end of the crawl line? To see that, you should perform experiment.

How to print a columns which has a certain condition via Python?

To see which else response header should be test, you can print all of the columns which has the “resp” string in it via a for loop as below.

for x in enczp.columns:

if str.__contains__(x, 'resp') == True:

print(x)

OUTPUT>>>

resp_meta_download_timeout

resp_meta_download_slot

resp_meta_download_latency

resp_meta_depth

resp_headers_cache-control

resp_headers_content-type

resp_headers_vary

resp_headers_server

resp_headers_set-cookie

resp_headers_x-aspnetmvc-version

resp_headers_x-aspnet-version

resp_headers_x-powered-by

resp_headers_x-powered-by-plesk

resp_headers_date“str.__contains__(‘argument to test condition’, ‘argument to check’)” method can give us any kind of condition check related to the finding a substring in a string. Before proceeding the “structured data” we also can test whether they have used the “set-cookie” response header that is related to the security correctly or not.

To test wich response header is being used or not used, we will choose special response header columns and analyze them via “value_counts(dropna=False)” method and attribute.

enczp['resp_headers_set-cookie'].value_counts(dropna=False)

OUTPUT>>>

NaN 192

ASP.NET_SessionId=kpmlovfrat4kwbt20veuynae; path=/; HttpOnly; SameSite=Lax 1

ASP.NET_SessionId=suymns4v3b5nnn2rgmeuixej; path=/; HttpOnly; SameSite=Lax 1

ASP.NET_SessionId=rt1xctnrnjinvvzz4vrucpwi; path=/; HttpOnly; SameSite=Lax 1

Name: resp_headers_set-cookie, dtype: int64It seems that 192 of our web pages don’t have a “set-cookie” response header.

How to test all of the Response Header’s Usage Case via Python?

A Holistic SEO may want to check all of the response headers with only a single code. Thanks to Python, we can perform this process. To check all response headers columns from our crawled data, we can use a for loop as below.

#i = 0

#c = []

for x in enczp.columns:

if str.__contains__(x, 'resp'):

#c.append(x)

a = enczp[x].value_counts(dropna=False)

print(a)

#print(f'{c[i]} {a.index} : {a.values}')

#i +=1In the for loop, we have put five lines with “#” sign. They are for changing the style of the output. Thanks to “string formatting”, you can create a different kind of output style. We haven’t used them for this example. We have used “__contains__()” method again via an “if statement” to see check the columns with “resp” string. We have assigned the “value_counts()” method results in a variable and printed them. You may see the “NaN” values below:

Since we have 195 Rows, you may simply check some of the results by checking the resulting number, in other columns, you may simply see the column name and the “NaN” value amount. Since we have covered some points in Response Header Columns, we can check the Structured Data Columns in the same methodology via a quick check.

How to check a web site’s Structured Data Usage via Python?

Advertool’s “crawl methodology” also pull the data related to the structured data usage via Python. Like in response headers, we can also call the columns that are related to the JSON-LD.

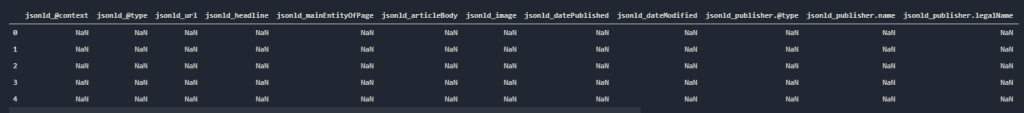

enczp.loc[:, enczp.columns.str.contains('json')].head(5)You may see our columns below.

It seems that our sample web site doesn’t have any kind of JSON-LD Structure data. To be sure that they don’t have any structured data, we can try to check which types of JSON-LD Schema Mark Up they are using or not using.

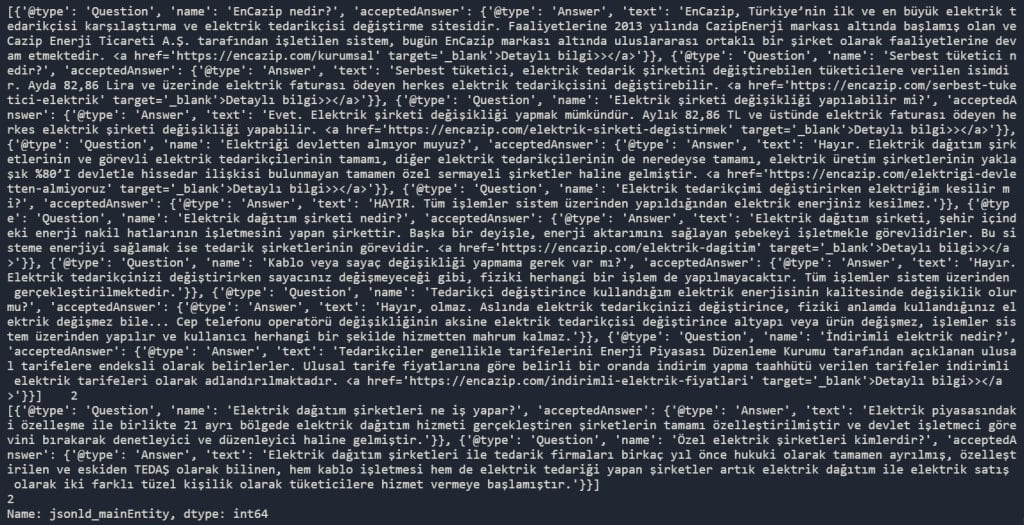

enczp['jsonld_mainEntity'].value_counts()MainEntity in JSON-LD shows the object or the main thing on that web page that is related to the topic. It also can define the purpose of the web page or the component of the web page. You may see the result below.

You may see that we have “two FAQ” structure with “acceptedAnswer” items and “Question” items. Via Python, you also can check the JSON-LD Code errors. To validate Structure Data or to test the Structure Data you may use “PYLD” which is a Python Package that focused on JSON-LD Parsing. In this article, for now, we won’t do the validation process but if you know JSON-LD structure, even with eyes you can find obvious errors.

Note: If there wouldn’t be a structured data on the web site with JSON-LD, these columns wouldn’t be exist at the first place.

If you find this output so complicated, you may try the code below.

enczp['jsonld_@type'].value_counts()

OUTPUT>>>

FAQPage 4

NewsArticle 2

Name: jsonld_@type, dtype: int64It shows us that we have six structured data usage example from our test subject. “4” of them are “FAQ” and “2” of them are “NewsArticle”.

How to check Links on the web pages from a web site to see whether they used a “nofollow” tag or no via Python?

We also can check whether a web site used “nofollow” tag on the links on their web pages or not. In Screaming Frog, we actually don’t have this option unless you don’t use the “scraping” via “CSS Selectors or XPATH”.

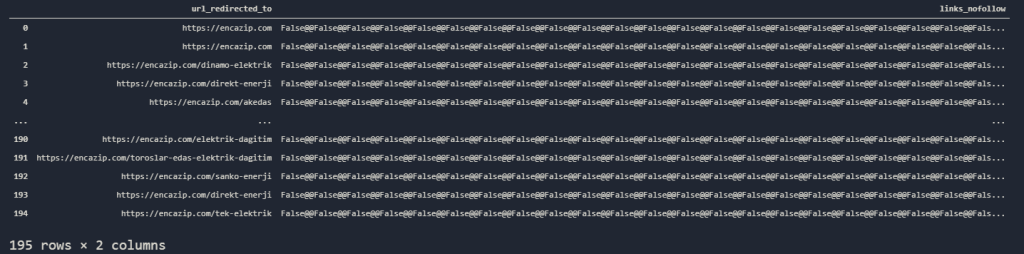

enczp.loc[:, ['url_redirected_to','links_nofollow']]You may see the result below:

Advertools’ Crawl Method, puts repetitive ocurrenceness side by side by dividing them with “@@” signs. So, in this example, you may see the “False@@False…” pattern which means that on those URLs, we don’t have any nofollowed links. You also can create different data frames by dividing these rows via their divider signs in this case “@@”.

How to pull all the Image URLs from an entire web site via Python?

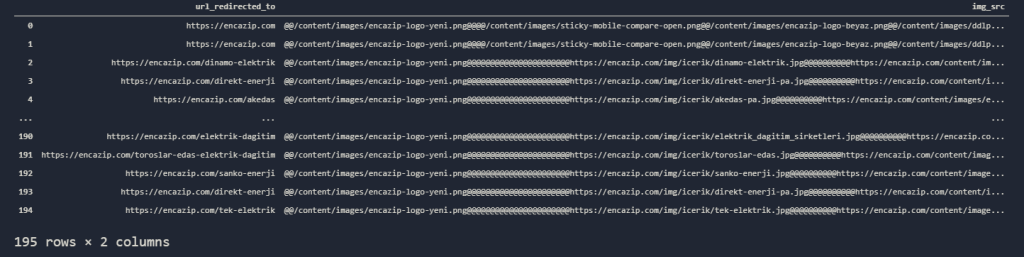

As in Screaming Frog, we also have the image URLs, you also can download all of those images and optimize these images via Python. Like in “nofollow” tag section, with the same methodology, we can pull all the image URLs.

enczp.loc[:, ['url_redirected_to', 'img_src']]You may see the results below.

Also, if you want to pull only the unique images for that URL, you may simply write a Pandas Condition to create a new column.

How to Find Duplicate, Missing, and Multiple Heading Tags via Python?

We have already showed you the necessary methodologies but before the closing the stage, we also wanted to show a few things related to the heading tags. You may see the codes below by ordered.

In the “Duplicate Title” section, we have used Pandas’ “duplicate()” method. It also shows excessively similar title tags that are close to each other. In this example, we will use “value_counts()” for finding exact match copies.

enczp['h1'].value_counts()

OUTPUT>>>

\r\n @@\r\n En Cazip Elektrik Tarifesini Bulun\r\n 3

Bingöl, Elazığ, Malatya, Tunceli GTŞ'si Fırat Aksa Elektrik 2

Enerjisa Toroslar Elektrik 2

Türel Elektrik İndirimli Tarifeleri 2

Toroslar Elektrik Dağıtım A.Ş. | Toroslar EDAŞ 2

..

Sepaş Enerji Elektrik Fatura Ödeme 2

Dicle Elektrik Perakende Satış A.Ş. | Dicle EPSAŞ 2

Kayseri Elektrik Perakende Satış A.Ş. | KEPSAŞ 2

Gediz Elektrik Perakende Satış A.Ş. 2

2

Name: h1, Length: 97, dtype: int64As you may see, we have exact match copy title tags.

How to find out whether there is a missing heading tag on a URL or not?

enczp['h1'].value_counts(dropna=False)

OUTPUT>>>

\r\n @@\r\n En Cazip Elektrik Tarifesini Bulun\r\n 3

Bingöl, Elazığ, Malatya, Tunceli GTŞ'si Fırat Aksa Elektrik 2

Enerjisa Toroslar Elektrik 2

Türel Elektrik İndirimli Tarifeleri 2

Toroslar Elektrik Dağıtım A.Ş. | Toroslar EDAŞ 2

..

Sepaş Enerji Elektrik Fatura Ödeme 2

Dicle Elektrik Perakende Satış A.Ş. | Dicle EPSAŞ 2

Kayseri Elektrik Perakende Satış A.Ş. | KEPSAŞ 2

Gediz Elektrik Perakende Satış A.Ş. 2

2

Name: h1, Length: 97, dtype: int64Thanks to “dropna=False” option, we see that there is not a “NaN” value. We also double check it.

enczp['h1'].str.contains('nan').value_counts()

OUTPUT>>>

False 195

Name: h1, dtype: int64So, we don’t have missing “h1” tags.

How to find out whether there is a multiple heading tags on a URL via Python?

enczp['h1'].str.contains('@@').value_counts()

OUTPUT>>>

False 192

True 3

Name: h1, dtype: int64Since the ‘@@’ signs are dividing the repetitive occurenceness in a web URL, we may use it to see multiple occurenceness of H1 Tags. We have three pages with multiple heading one tags. You also call the specific URLs with the code below.

enczp[enczp['h1'].str.contains('@@')]How to check all the Anchor Text on a entire web page via Python?

Unlike Screaming Frog, we can check all the anchor texts from a web page to see whether they are contextual or repetitive. Also, we can see which anchor texts are being used on which pages for pointing out which URL. Also, we can compare our anchor texts with competitors. You may see the entire anchor texts from a web page.

enczp['links_text'].to_frame()

Some of those links are from header and footer area. So there is a repetitiveness, but this doesn’t mean that you may clean this data to see only a certain anchor texts. Also, you may see a, quick word frequency test for anchor texts.

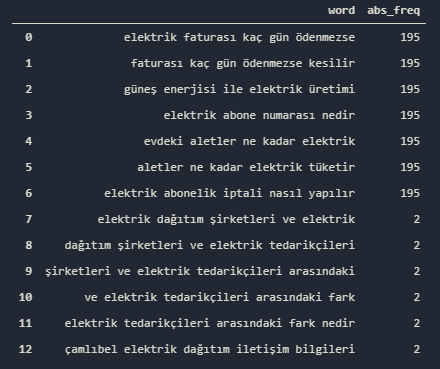

adv.word_frequency(enczp['links_text'].str.strip('@@@'), phrase_len=5)

Note: Elias Dabbas has suggested a better code and methodology for this section. And it “explode”. “explode” method turns a list-like object into a row by removing the index values. Thus, we can calculate every words’ frequency such as they are in a text snippet. Also, thanks to “split” method, we will remove the ‘@@’ signs. I will leave two methodologies here to show different levels of expertise.

adv.word_frequency(enczp['links_text'].str.split('@@').explode(), phrase_len=5)You may see the result below.

We see so many repetitive anchor texts in this example and it can cause an over-optimization penalty in terms of Google Algorithms. You may see the same analysis with only “phrase_len=1” usage.

adv.word_frequency(enczp['links_text'].str.strip('@@@'), phrase_len=1, rm_words=['@@', '@@@'])

So, in a glimpse, the Google or any kind of other Search Engine can clearly categorize the web site from the URLs to the anchor texts. The important quest here is to optimize them in a natural way with a better UX, Page Speed and Navigation along with Site-structure, Layout and Design etc…

How to Pull All of The Content from a Web Site via Python?

Thanks to Python, we also scrape all of the content from a web site. But, this includes the Boiler-Plate Content, but still, it gives enough information about their content structure. Also, thanks to Custom Selectors, you may scrape all of the actual content without any data pollution. You may see an example below.

pd.set_option('display.max_colwidth', 500)

enczp.filter(['url_redirected_to', 'body_text'])

The “\r\n” patterns here are for creating new lines. It will help us to see the visual hierarchy between text snippets and also visualize them via the “print” function. Let’s pull only one content of a URL.

enczp['body_text'][1]You may see it below.

Elias Dabbas has suggested to use “print” function here to change those “new-line” characters with actual new lines. So you may see a different angle below.

print(enczp['body_text'][1])These methods also can be used for TF-IDF Analysis with Python and comparing the results between different competitors. The important section here is cleaning the data. Also, this can be performed for all sites from a certain search query results.

Last Thoughts on Holistic SEO and Crawling, Analyzing a Web Entity via Python

We have demonstrated how to do a better SEO Analysis of a website via Python in less time. We also showed some opportunities and expanding ideas even the Screaming Frog can’t do for the moment. Python is not a must for an SEO. But if you are a Holistic SEO, Python can help you to analyze lots of different data columns, all in one. Also, a Holistic SEO can create his / her own Python functions or scripts to make this process even shorter and more efficient by his / her creativity.

This guideline has lots of missing points and a huge opportunity for expanding even more. With time, we will improve our crawling a web site via Python Guideline, if you want to contribute, you may contact with us.

- Sliding Window - August 12, 2024

- B2P Marketing: How it Works, Benefits, and Strategies - April 26, 2024

- SEO for Casino Websites: A SEO Case Study for the Bet and Gamble Industry - February 5, 2024

Hi, on running the crawl() function I am receiving an error “FileNotFoundError: [WinError 2] The system cannot find the file specified”

Hello Nishit,

Thanks for reading.

The WinError2 happens if you give a wrong path for your output file, or if you do not have access authority for the path of the output file.